Many inequalities in information theory have entropies or mutual informations appearing as linear additive terms (e.g. Shannon-type inequalities). It’s also not uncommon to see entropies appearing as exponents (e.g. entropy power inequality). But perhaps it’s not immediately seen how products of mutual informations make sense.

Recently I have encountered an inequality involving a product of mutual informations, which I cannot find a good way to prove (or disprove, though some numerics and asymptotic analysis seem to suggest its validity). I would much appreciate it if someone could be smart and gracious enough to provide a proof, or counter example, or generalization.

The formulation is quite simple: suppose are binary random variables with

, and

is another random variable such that

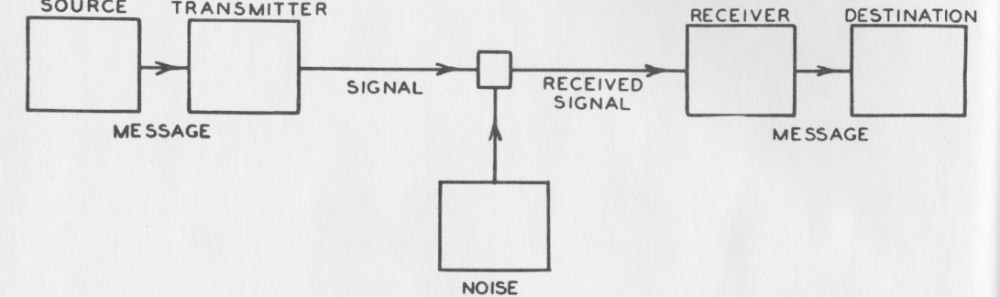

forms a Markov chain. The claim is that

. (Note that the left side is also upper bounded by either one of the two factors on the right side, by the well-known data processing inequality.

At first glance this inequality seems absurd because different units appear on the two sides; but this may be resolved by considering that has only one bit of information.

For a given joint distribution of and

, the equality can be achieved when

is an injective function of

.

———————————-

Update: the inequality has been proved, thanks very much for the help by Sudeep Kamath (see comments). An alternative proof (which avoids computing the monotonicity of is sketched as follows: by convexity of the function

(see for example, proof of Mrs Gerbers Lemma), we have

for any

, where

. Averaging over

under

gives

, and the claim follows after rearrangements.