Group 11 – Don’t Worry About It

Daniel, Krithin, Amy, Thomas, Jonathan

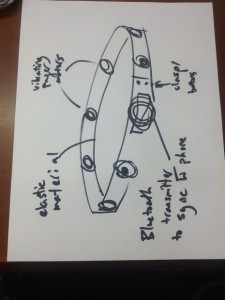

The NavBelt provides discreet and convenient navigational directions to the user.

Testing Discussion

Consent

We had a script which we read to each participant, which listed the potential risks and outlined how the device would work. We felt that this was appropriate because any risks or problems with the experiments were made clear, and the worst potential severity of accidents was minimal. In addition, we gave our participants the option of leaving the experiment at any time.

Scripts HERE.

Participants

All participants were female undergraduates.

Since our target audience was “people who are in an unfamiliar area”, we chose the E-Quad as an easily accessible region which many Princeton students would not be familiar with, and used only non-engineers as participants in the experiment. While it would have been ideal to use participants who were miles from familiar territory, rather than just across the road, convincing visitors to Princeton to allow us to strap a belt with lots of wires to them and follow them around seemed infeasible.

- Participant 1 was a female, senior, English major who had been in the E-Quad a few times, but had only ever entered the basement.

- Participant 2 was a female, junior, psychology major.

- Participant 3 was a female, junior, EEB major.

We selected these participants first by availability during the same block of time as our team, and secondly by unfamiliarity with the E-Quad.

Prototype

The tests were conducted in the E-Quad. We prepped the participants and obtained their consent in a lounge area in front of the E-Quad cafe. From that starting point, there were hallways and a staircase that the participants had to traverse in order to reach the final destination which was a courtyard. The prototype was set up using a belt with vibrating motors taped to it. Each had alligator clips that could be connected to a battery in order to complete the circuit and cause it to vibrate. A pair of team members would trail each tester, closing circuits as needed in order to vibrate a motor and send the appropriate direction cue to the tester.

This setup represents a significant deviation from our earlier paper prototype, as well as a significant move away from pure paper and manual control to include some minimal electrical signaling. We feel however that this was necessary to carry out testing, since the team members who tested our original setup, where one team member physically prodded the tester to simulate haptic feedback, reported feeling their personal space was violated, which might have made recruiting testers and having them complete the test very difficult. To this end we obtained approval from Reid in advance of carrying out tests with our modified not-quite-paper prototype. However, the simulated mobile phone was unchanged and did not include any non-paper parts.

Procedure

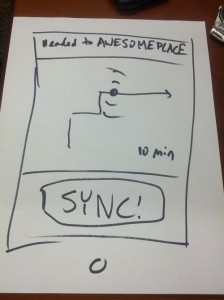

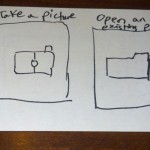

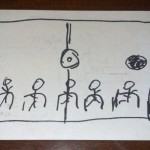

Our testing procedure involved 2 testers (Daniel and Thomas) following the participant and causing the belt to vibrate by manually closing circuits in a wizard-of-oz manner. Another tester (Krithin) videotaped the entire testing procedure while 2 others took notes (Amy and Jonathan). We first asked the participants to select a destination on the mobile interface, then follow the directions given by the belt. In order to evaluate their performance on task 3 we explicitly asked them to rely on the buzzer signals as opposed to the map to the extent they were comfortable.

- Prototype Overhead View

- Participant Using Belt with Testers Navigating

Scripts HERE.

Results Summary

All three participants successfully found the courtyard. The first participant had trouble navigating at first, but this was due to confusion among the testers who were manipulating the wizard-of-Oz buzzers. Most testers found inputting the destination to be the most difficult part, and once the belt started buzzing, reported that the haptic directions were “easy” to follow. Fine-grained distinctions between paths were difficult at first, though; Participant 1 was confused when we arrived at a staircase which led both up and down and was simply told by the belt to walk forward. (In later tests, we resolved this by using the left buzzer to tell participants to take the staircase on the left, which led down.) Finally, all participants appeared to enjoy the testing process, and two of the three reported that they would use it in real life; the third called it “degrading to the human condition”.

One of the participants repeatedly (at least 5 times) glanced at the paper map while en route to the destination, though she still reported being confident in the directions given her by the belt.

One aspect of the system all the participants had trouble with was that they were not sure when the navigation system kicked in after they were done inputting the destination on the phone interface.

Results Discussion

Many of the difficulties the participants experienced were due to problems in the test, and not in the prototype itself. For instance, Participant 1 found herself continually being steered into glass walls, while the testers in charge of handling the alligator clips tried to figure out which one to connect to the battery; similarly, while testing on Participant 3, one of the buzzers malfunctioned, leading her to not correctly receive the three-buzzer end of journey signal.

Two participants preferred directions to be given immediately when they needed to turn; one participant suggested that it would be less confusing if directions were given in advance, or some signal that a direction was forthcoming was given, because a vibration was easy to imagine when nonexistent or, conversely, it was easy to get habituated to a constant vibration and cease to notice it.

The difficulty in using the paper prototype for the phone interface was probably caused at least in part by the fact that only one of the participants regularly used a smartphone; in hindsight we might have used familiarity with google maps on a smartphone or GPS units as a screening factor when selecting participants. The fact that some participants glanced at the map seems unavoidable; while relative, immediate directions are all that one needs to navigate, many people find it comforting to know where they are on a larger scale, and we cannot provide that information through the belt. However, using the map as an auxiliary device and the navigation belt as the main source of information is still a better method than the current standard navigation method of relying solely on a smartphone.

To address the fact that users were not sure when the navigation system had started, we might in the actual product either have an explicit button in the interface for the user to indicate that they are done inputting the destination, or have the belt start buzzing right away, thus providing immediate feedback that the destination has been successfully set.

Higher-Fidelity Prototype

We are ready to build without further testing.