Group Number and Name

Group 14, Team Chewbacca

Eugene, Stephen, Jean, Karena

Description

Our project is a system consisting of a bowl, dog collar, and mobile app that helps busy owners take care of their dog by collecting and analyzing data about the dog’s diet and fitness, and optionally sending the owner notifications when they should feed or exercise their dog.

Projects

P1: Group Brainstorming

http://blogs.princeton.edu/humancomputerinterface/2013/02/22/p1-team-chewbacca/

P2: Contextual Inquiry and Task Analysis

http://blogs.princeton.edu/humancomputerinterface/2013/03/12/p2chewbacca/

P3: Low-Fidelity Prototype

http://blogs.princeton.edu/humancomputerinterface/2013/03/29/p3-lab-group-14/

P4: Usability Test with Lo-Fi Prototype

http://blogs.princeton.edu/humancomputerinterface/2013/04/08/p4-team-chewbacca/

P5: Working Prototype

http://blogs.princeton.edu/humancomputerinterface/2013/04/22/p5-group-14-team-chewbacca/

P6: Pilot Usability Study

http://blogs.princeton.edu/humancomputerinterface/2013/05/06/p6-team-chewbacca/

Project Video

http://www.youtube.com/watch?v=b1t13_luvkc&feature=em-upload_owner

Changes

1. Added the “generate report” button to the top “action bar” of the application. This way, users can see the button much more easily. We found in our testing that this was a significant problem for users who were inexperienced with the hardware of the Samsung Galaxy S3, where the settings button appears on the top right in that position.

2. Changed the home screen panels to actual buttons that would automate a swipe motion instead of doing nothing upon clicking. This makes it all right if the user identifies one of the panels as a button instead of swiping, since both actions will lead to the same place.

3. Instead of automating the “Wizard of Oz” functionality by pressing the panels five times, we changed it to the settings menu

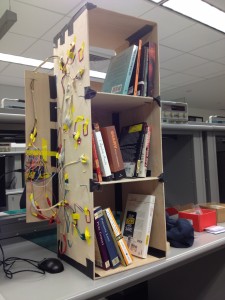

4. Redesigned collar for more compact design

Evolution of Goals/Design

Overall goal

When we began brainstorming the design of our system, we thought about making a convenient pet owner system that would integrate any feature related to owning a pet. This included adding features like a GPS tracking device to detect the location of the dog, and a physical dog feeder that would release food at certain times in the day. As we began developing our project, we realized that we should make our app a lot more focused, and not just add any feature related to pet-owning. As we started performing contextual analysis and interviews with our users, busy dog owners, we realized maintaining the dog’s health should be our primary focus because they were too busy to keep track of their dog’s activity and health habits on a day-to-day basis-but also that they did not want to be overwhelmed with so much data. Therefore, our overall goal for the pet-owner system transformed from making pet-owning more convenient for the dog-owner, to allowing the user to maintain the dog’s health with three different tasks: tracking the dog’s diet pattern (how much it has eaten every day), tracking the dog’s activity level (how many steps it has taken every day), and being able to send the data to a veterinarian who can add further insight on the dog’s behavior.

Design changes to system

The design of our project also evolved considerably over the course of the semester. In our initial sketches and prototypes, our mobile app functioned more as a data log than as a tool for data interpretation. For example, in our paper prototype in P3, the homepage of our mobile app only included a “total health score” and the time the dog bowl was last filled. It did not include suggestions about how to respond to or improve the health score, or provide feedback to the user about whether or not they should feed or walk their dog. During our prototype testing in P4, this limitation of our system was brought to our attention by the feedback we received from users, as they expressed that they wanted direct, actionable feedback and recommendations from the mobile app. Another major design change we made to our mobile app was to “hide” our more detailed data in separate pages that were linked from the main diet and activity pages, as prototype testers expressed being overwhelmed by the long-term data, and said that they would not access this data very often. This also helped us to keep the most relevant information “above the fold” in keeping with Nielsen’s Heuristics (H8: Aesthetic and Minimalist Design). Finally, we made changed the initial designs of both the dog bowl and collar by eliminating the time display panel on the dog bowl and the LEDs on both the bowl and collar. We made this change because it did not communicate much information to the user, and we felt that this created a more minimalist design.

Critical Evaluation

As our system is currently implemented, there are several design obstacles that we need to address in order to turn this final prototype into a useful real-world system. First, we need to fully integrate bluetooth into the functionality of our app, as we currently signal another application, Amarino, to run in the background and connect to bluetooth instead of handing this in the DogSmart application. We also must consider the scalability of this device to multiple users – our current system only accounts for one smartphone to control the device, but in the future we want users to access the data from their computers and also have other caretakers view and manage this data. We can also consider how we might adapt the device for other pets, such as cats, and what other constraints we might need to our app to make including other animals feasible.

However, we believe that these design changes are all feasible, and that with a reasonable amount of further iteration we could turn DogSmart into a real-world system. At Communiversity, we spoke with a woman who runs a small dog-care business. We asked her a few questions, and gained some important pieces of information from her. Firstly, she commented that our system could be very useful for detecting sickness of dogs, since usually one can determine if a dog is sick by checking their health habits over an extended period of time. Additionally, she noted that this device could be very popular with dog owners, since they are often people who care very much about their dogs and are willing to purchase a variety of products to help their dog stay happy and healthy.

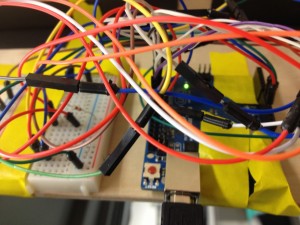

The process of creating and testing low- and high-fidelity prototypes has taught us a lot about both dog care and the technology requirements of this application space. For example, by interviewing dog-owners through contextual inquiry and prototype testing we learned that dog care is mostly completed by habit and routine, so any application or system being implemented in this space must integrate seamlessly into the user’s everyday life, running in the background and enhancing their normal routine without being an intrusive presence. Thus, this application space is an appropriate environment for ubiquitous computing systems, and throughout the development process for DogSmart we have worked to fit our system to this model of computing. In addition, the process of developing our system taught us about how to integrate several different hardware/software systems together; this included using bluetooth communication to connect data from Arduino hardware/software to an Android app. During this process, we also realized the limitations of using Bluetooth and the complications that arise from these limitations. The Bluetooth device only has a limited range, meaning the data can only be transferred when the Bluetooth device is in the vicinity of the Android app. This meant we had to either ensure the dog was always in the range of the Android app, or configure a database in the Arduino software so the data stored in the Arduino could be transferred to the Android app.

Moving Forward

If we had more time, we would definitely focus on giving our mobile app the capacity to handle memory. Our app is currently only running in real-time, and outputs are based on sensor data that occurs during an event in real-time. If we were to incorporate a database into our mobile app, we would have the option of using the EEPROM database in Arduino or the SQLITE software in Android. We would also focus on incorporating real-world data about dog health into the program. This would mean identifying important measures of dog health including the recommended level of exercise for different dogs, and the corresponding amount of food they should eat based on their exercise level. If this data did not exist, we would likely have to talk to veterinarians or experts in animal health who could give reasonable answers. This data would allow us to give more accurate feedback to the user about their dog’s overall health, and to give more accurate recommendations. Alternatively, we could also establish a “baseline testing” period of using the system, where the device would collect data about the dog’s movement and eating levels, and then incorporate this into a baseline measurement. When the dog deviates from this baseline, the device could report it as non-healthy behavior.

More specifically, we would need to change all the features of our app for which we are currently using “Wizard-of-Oz” techniques. This includes having actual graphs for activity level and amounts of food that correspond to data that has actually been collected over time. This also means having the color changes corresponding to how urgent the situation is; how dire the situation is based on data collected over time (the last time the dog was fed and the dog’s activity level). We would also like to have a valid way of assessing the dog’s health and calculating the health score. This is related to having real-world data stored in our app or a “baseline” data set,, as mentioned above. In the future, we would like our generate report to compile a nicely formatted pdf of the data collected over time, and be able to e-mail the pdf to the veterinarian. Currently, it does not have the data, and therefore, we could not actually implement this feature. We would also like to be able to send reminder notifications to the user, telling them that their dog needs to be fed. This could be done either as a text message, or an Android reminder message. Finally, we would like the app to be customized to different types of dogs.. We would like the user to be able to enter the type of dog they have, and have the threshold for steps, and the recommended food and activity levels calibrated accordingly. Finally, we would like to make the picture on the home screen of the app customizable. This means enabling the user to upload a picture of their own dog. If we were to test users in the future, we would like to test several types of dogs with our app to see how it responds to different dogs. Furthermore, we would get feedback from users on how they might actually use the long term data, and from testing, identify how we would like to display the data collected. Lastly, we would likely talk to dog experts who could possibly help with the back-end analysis of data, and identify what features are useful for a veterinarian versus which features are not as useful.

Source Code

https://dl.dropboxusercontent.com/u/85418085/CanineCare.zip

Third Party Code

Arduino

MeetAndroid.h library

http://code.google.com/p/amarino/source/browse/trunk/arduino_library/MeetAndroid/MeetAndroid.h?r=14

helps establish the bluetooth connection between the Arduino and the Android

Time.h library

http://playground.arduino.cc/Code/time

allows us to get the current time reading for the dog bowl updates

Android

Amarino libraries

http://www.amarino-toolkit.net

code that allows Android to establish bluetooth connection with the Arduino

SensorGraph

http://www.amarino-toolkit.net/index.php/download

part of the Amarino library that graphs real-time data collected via Bluetooth

GraphView

https://github.com/jjoe64/GraphView

a library that is used by the Sensor Graph code in the Amarino library

Date Format Library

http://developer.android.com/reference/java/text/DateFormat.html

a library that allows the date printed on the screen to be formatted

Date library

http://developer.android.com/reference/java/util/Date.html

allows the date to be read in as an object, and then manipulated easily

Tutorial for Sensor Graph

http://www.bygriz.com/portfolio/sensor-graph/#more-1344

Tutorial for Android Beginners

http://developer.android.com/guide/topics/ui/controls.html

Presentation Information