Group 17-EyeWrist

Evan Strasnick, Joseph Bolling, Jacob Simon, and Xin Yang Yak

Evan organized and conducted the interviews for the assignment, brainstormed ideas, and wrote the descriptions of our three tasks.

Joseph helped conduct interviews, researched bluetooth and RFID as tech possibilities, and worked on the task analysis questions

Xin Yang took detailed notes during interviews, brainstormed ideas, wrote the summaries of the interviews, and helped write the task analysis questions.

Jacob brainstormed ideas, helped take notes during interviews, wrote the description of the idea, and drew the storyboards and diagrams.

Problem and solution overview

The blind and visually disabled face unique challenges when navigating. Without visual information, simply staying oriented to the environment enough to walk in a straight line can be challenging. Many blind people choose to use a cane when walking to avoid obstacles and follow straight features such as walls or sidewalk edges, but this limits their ability to use both hands for other tasks, such as carrying bags and interacting with the environment. When navigating in an unknown environment, the visually impaired are even further limited by the need to hold an audible gps system with their second hand. Our device will integrate gps interface and cane into a single item, freeing an extra hand when navigating. When not actively navigating by gps, the compass in our device will be used to help the user maintain their orientation and sense of direction. All of this will be achieved using an intuitive touch/haptic interface that will allow the blind to navigate discretely and effectively.

Users:

We began our project hoping to improve the quality of life of the visually impaired, and thus our primary user group is the blind. This group provides great opportunities for our design purposes for a number of reasons: first, as discussed in class, “standard” technologies tend to interface by and large in a visual way, and therefore working with the blind encourages exploration of new modalities of interaction. In addition, the blind endure a series of problems of which the majority of the sighted population (ourselves included) are not even aware. This motivated us to attempt to identify and hopefully solve these problems to noticeably improve the lives of the visually impaired.

Despite the difficulty of encountering blind users around the Princeton area, we wanted to make sure that we did not compromise the quality of our contextual inquiry by observing users who were not actually familiar with the difficulties of visual impairment, and thus we contacted the New Jersey Commission for the Blind and Visually Impaired, utilizing their listserv to get in contact with various users. As we waited for IRB approval to actually interview these users, we gathered background information by watching YouTube videos of blind people and the strategies that they have adopted in order to navigate the world safely and autonomously. We taught ourselves about the various pros and cons of the traditional cane, and practiced the actual techniques that the blind themselves learn to navigate. This alone provided dozens of insights without which we could not have hoped to understand the tasks we are addressing. (one such video can be found here: http://www.youtube.com/watch?v=VV9XFzKo1aE)

Finally, after approval was given, we arranged interviews with three very promising users. All were themselves blind – two born without vision and one who lost vision later in life – and each not only faced the challenges of navigation in their own lives, but also had some interest or occupation in assisting other blind people with their autonomy. Their familiarity with technology varied from slight to expert. Because of the difficulties the users faced in traveling and their distances from campus, we were limited to phone interviews.

Our first user, blind since birth, teaches other visually impaired people how to use assistive technologies and basic microsoft applications. He had a remarkable knowledge of existing technologies in the area of navigation, and was able to point us in many directions. His familiarity with other users’ ability and desire to adopt new technologies was invaluable in guiding our designs

Our second user, whose decline in vision began in childhood was the head of a foundation which advocates for the rights of the blind and helps the visually impaired learn to become more autonomous. While her technological knowledge was limited, she was able to teach us a great deal about the various ins and outs of cane travel, and which problems remained to be solved. She was a musician prior to losing her sight, and knew a great deal about dealing with loss of certain capabilities through inventive solutions and positive attitude.

Finally, our third user was directly involved with the Commission for the Blind and Visually Impaired, and worked in developing and adapting technologies for blind users. He already had a wealth of ideas regarding areas of need in blind technologies, and with his numerous connections to users with visual impairment, understood which features would be most needed in solving various tasks. In addition to his day job, he takes part in triathlons, refusing to believe that there is any opportunity in life which the blind were unable to enjoy.

The CI Interview

All of our CI interviews took place over the phone as none of our interviewees lived near campus or could travel easily. Prior to our interview, we watched an instructional video on cane travel to better understand the difficulties associated with cane travel, and identified that navigation and tasks requiring the use of both hands were tasks that we can potentially improve. Based on what we learned, we asked our users about general difficulties for the visually impaired, problems associated with cane travel and navigation, and how they would like to interact with their devices.

Our interviewees all indicated that indoor navigation is more difficult than outdoor navigation, as the GPS and iPhone have solved most of their outdoor navigation problems. Being blind also means having one less hand free, since one hand would almost always be holding the cane. Our interviewees also emphasized the importance of being able to hear ambient sound. The fact that all our interviewees are involved with teaching other blind users (through technology or otherwise) may have contributed to these similarities in their responses – one of our interviewees mentioned that people over the age of 50 tend to have more difficulty coping with going blind because of their reluctance to use new technologies.

There were also some differences between what the issues the interviewees brought up. Our first user was particularly interested in an iPhone-App-based solution for indoor navigation. He brought up how the smartphone had drastically lowered the cost of assistive technologies for blind people. Our second interviewer brought up that many problems associated with navigation can be overcome with better cane travel and more confidence. She, for example, mentioned that reading sheet music is a problem. Our third user suggested a device to help blind swimmers swim straight. These differences could be due to the difference in the interviewees backgrounds – for example, the second interviewee has a music background, while the third interviewee takes part in triathlons.

Task Analysis

1. Who is going to use system?

We are designing our product with the blind and visually impaired as our primary user group. The vast majority of computing interfaces today operate primarily in the visual data stream, making them effectively useless to the blind and visually impaired. We are hoping to improve the quality of life of those who have difficulties navigating their physical environment by developing a simple, unobtrusive, but effective touch-based navigation interface. These users will likely already have skills in navigating using haptic exploration (i.e. working with a cane), but they may not have much experience with the types of technology that we will be presenting to them. They can be of any age or education, and have any level of comfort with technology, but they share in common the fact that they wish to reduce the stress, inconvenience, and stigma involved with navigating their day-to-day space and thereby increase autonomy.

2. What tasks do they now perform?

The blind and visually impaired face numerous difficulties in performing tasks that seeing individuals might find trivial. These include:

-Maintaining a sense of direction while walking

-Navigating through unfamiliar environments

-Walking while carrying bags or other items

Notably, it is impossible for seeing individuals like us to understand the wide range of tasks that we take for granted. Typically these tasks are solved using:

-A cane, with which the user may or may not already have an established “relationship”

-Audio-based dedicated GPS devices

-Navigation applications for smartphones, developed for use by the blind or used along with some form of access software, such as a screen reader

-Seeing-eye aides (e.g. dogs)

-Caretakers

-Friends/Relatives

-Strangers

The current solutions used in completing the tasks all require the use of more resources, in terms of auditory attention, hands, and help from others, than we believe are necessary.

3. What tasks are desired?

We wish to introduce a more intuitive, less obtrusive system that will allow the blind to navigate quickly and confidently. Namely, users should be able to

-Traverse an area more safely and more quickly than before

-Maintain a sense of direction at all times while walking

-Navigate unfamiliar territory using only one hand to interact with navigation aides

-Navigate unfamiliar territory while maintaining auditory focus on the environment, and not a gps device

-Feel more confident in their ability to get around, allowing them a greater freedom to travel about their world

4. How are the tasks learned?

The blind and visually impaired spend years learning and practicing means of navigating and handling daily tasks without vision. These skills can be developed from birth for the congenitally blind, or can be taught by experts who specialize in providing services and teaching for the visually impaired. In the case of phone applications and handheld gps devices, a friend or family member may help the user learn to interact with the technology. For this reason, it will be especially important that the users themselves guide the design of our system.

5. Where are the tasks performed?

The tasks are performed quite literally everywhere. There is a distinction between navigating familiar environments, where the user has been before, and navigating new spaces.The latter task, which may be performed in busy streets, in stores, schools, or when traveling, involves a much greater degree of uncertainty, stress, and potential danger. It should also be noted that there is a distinction between indoor and outdoor navigation. Outside, GPS technology can be used to help the blind locate themselves and navigate. Indoor navigation becomes a much more difficult task. In both environments, the blind frequently are forced to request help from sighted bystanders, which can be embarrassing or inconvenient.

6. What’s the relationship between user & data?

Our current designs do not involve the storage and handling of user data per se, but as we proceed through the various phases of user testing, we believe that the visually impaired have a particular right to privacy due to the potential stresses and embarrassment of their results. For this reason, we hope to learn from specialists the proper way to interact with and record data from testing with our users.

7. What other tools does the user have?

The primary tool which serves to make navigation possible is the cane. This is an important and familiar tool to the user, and has the natural advantages of intuitive use and immediate haptic response. However, users must dedicate a hand to its use and transportation. Another tool that they frequently use is the GPS functionality of the smartphone – given the current location and destination, the user can receive turn-by-turn auditory directions. This has the advantage of allowing the user to navigate to a destination that is unfamiliar without asking for direction. The disadvantage is that the GPS is not always reliable, and does not provide directions indoors. The user also needs to use a hand to hold the smartphone. Users might also employ the use of aides, whether human or animal, although this further decreases the user’s autonomy.

8. How do users communicate with each other?

Barring any other deficits in hearing, the blind are able to communicate in person through normal speech; however, they are unable to detect the various visual cues and nuances that accompany normal speech. The blind have a number of special accessibility modifications to technology (text-to-speech, etc.) that increasing allow the use of the same communications devices (smartphones, computers) that seeing individuals employ.

9. How often are the tasks performed?

Blind people navigate every day. In day-to-day scenarios, the cane allows the users to navigate safely and confidently in familiar surroundings. However, while blind people don’t navigate to unfamiliar places as often, there is more uncertainty involved and is more intimidating, so this is a problem worth solving.

10. What are the time constraints on the tasks?

Navigation takes much longer without the use of vision. The specific time constraints on the task vary with where and why someone is travelling, but are often based on the times associated with events or meetings that the navigator wishes to attend. We hope to make the process of communicating with a navigation system much more efficient, in terms of time and mental energy required. Ideally, we believe our system can allow a blind user to navigate as quickly as a sighted person equipped with a standard gps system, by eliminating the delay associated with conveying information solely through the audio channel.

11. What happens when things go wrong?

The hazards of wrongly or unsafely navigating space are not merely inconvenient; they are potentially life-threatening. Safety is the number one consideration that limits the ability of the user to be confident in their navigational skills. Outside of the safety concerns associated with being unable to navigate smoothly and efficiently, visually impaired people who become lost or disoriented often have to rely on the kindness of strangers to point them to their correct destination. This can be embarrassing and stressful, as the user loses his or her autonomy to a complete stranger. Not only this, but even when things “go right,” and users manage to get from point A to point B, they psychological stress of staying found and oriented without visual information makes travel unpleasant.

Specific Tasks:

1) Walking home from a shopping trip: This task is moderately difficult with existing means, but will likely be much easier and safer with our proposed technology. We describe a scenario in which the user begins at a mall or grocery store and walks a potentially unfamiliar path which involves staying on sidewalks, crossing a few streets, and avoiding obstacles such as other pedestrians while staying on route to their destination by identifying particular landmarks. This must be accomplished all while carrying the purchased items and possibly manipulating a GPS device.

Currently, such a task involves utilizing a cane or similar tool to identify obstacles and landmarks. While basic cane travel is an art that has been developed over generations and has many invaluable merits, it also carries several drawbacks. Firstly, the cane must be carried around constantly and kept track of throughout the day, limiting the hands that the user has to carry other items (or interact with objects or people). If the user is utilizing a GPS device to guide them to their destination (as most blind people have now become accustomed to doing with their smartphones), they must use their other hand to manipulate the device. Thus, unless the user is setting something down or fumbling to carry everything, they will have to stop and set down things simply to operate their GPS. On the other hand, because the cane can only help guide the user relative to their mentally tracked position, if the user has no GPS device and loses track of their cardinal orientation, they have few means by which to reorient themselves without asking for help.

With our proposed system, the user will no longer need to worry about tracking their cardinal orientation, because the system can immediately point them in the right direction through intuitive haptic feedback. Because the cane itself will integrate with GPS technology via bluetooth, the user will not have to manipulate their phone in order to query directions or be guided along their route. This frees up a hand for the user to carry their items as needed.

2) Following a route in a noisy area: This is another fairly difficult task which will become significantly easier using our system. An example of this task is getting from one place to another in city region such as Manhattan. Because the user must receive their navigation directions via audible commands, the user has trouble navigating if they cannot hear their commands. Currently, aside from straining to hear, the main option for a blind person to still manage is by using headphones. However, most blind users prefer not to use headphones, as doing so diminishes their ability to hear environmental cues, on which they heavily rely to navigate.

Our system solves this problem by relaying directional commands in a tactile manner, allowing the person to clearly receive guidance even in the noisiest of environments. Similarly, the need for headphones is eliminated, allowing a person to never disrupt their perception of environmental cues by the occurrence of a GPS message. Guidance is continuous and silent, allowing the user to constantly know where they headed and how to get there.

3) Navigating an unfamiliar indoor space. Despite the preconceptions we might have about the outdoors being hazardous, this task is actually the most difficult of all. Currently, because most GPS technologies do not function well indoors, unfamiliar indoor spaces are generally agreed to be the most intimidating and difficult to navigate.

With current means, blind people typically must ask for help in locating features of an indoor space (the door leading somewhere, the water fountain, etc.), and build a mental map of where everything is relative to themselves and the entrance of the building. The cane can be used to tap alongside walls (“shorelining”) or to identify basic object features (i.e. locate the doorknob). Unfortunately, if the person loses their orientation even momentarily, their previous sense of location is entirely lost, and help must again be sought. For this reason, any indoor space from a bathroom to a ballroom poses the threat of getting “lost.”

Our system uses a built-in compass to constantly keep the user cardinally oriented, or oriented in the direction of a destination if they so choose. As a result, a user can build their mental schema relative to absolute directions and never worry about losing sight of, e.g., where North is located. The user need not draw attention to himself through audible means or carry a separate device for indoors such as a compass (or manipulate their smartphone with their only free hand). Most importantly, the user’s autonomy is not limited because the directional functionality integrated into their cane gives them the ability to navigate these otherwise intimidating spaces on their own.

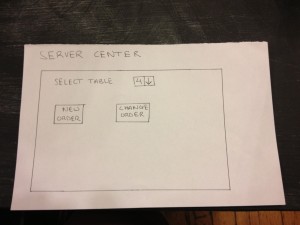

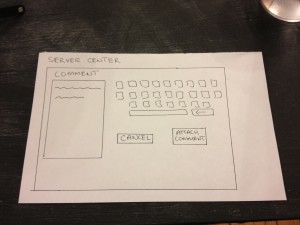

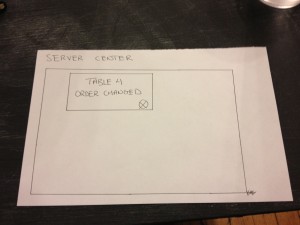

Interface Design

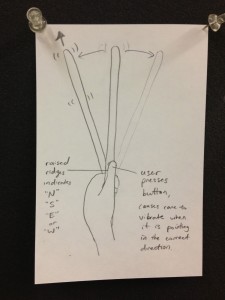

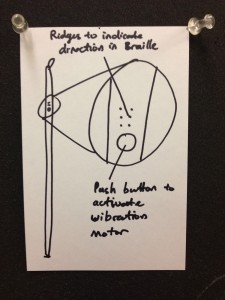

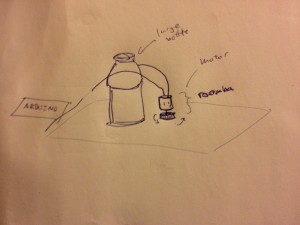

Description: It was apparent from our interviews that our device should not impede users’ ability to receive tactile and auditory feedback from their environment. By augmenting the cane with haptic feedback and directional intelligence, we hope to create a dramatically improved interface for navigation while preserving those aspects that have become customary for the blind. Specifically, the “Bluecane” will be able to intelligently identify cardinal directions and orient the user through vibration feedback. Bluetooth connectivity will enable the cane to communicate with the user’s existing Android or iOS device and receive information about the user’s destination. A series of multipurpose braille-like ridges could communicate any contextually-relevant information, including simple navigational directions and other path-finding help. The greatest advantage of an improved cane is that it wouldn’t disrupt or distract the user, unlike an audible navigation system, and it gives the user a free hand while walking.

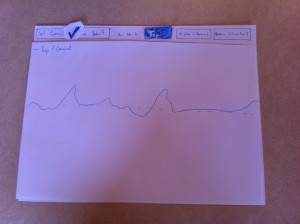

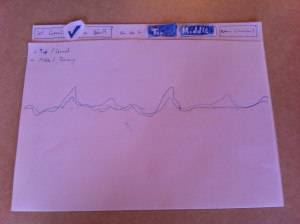

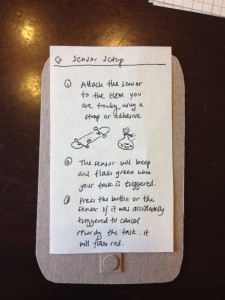

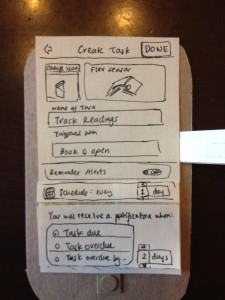

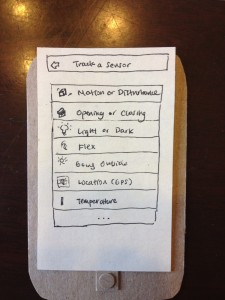

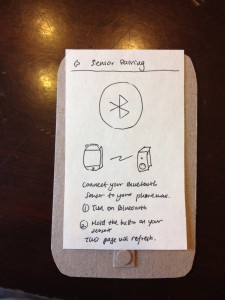

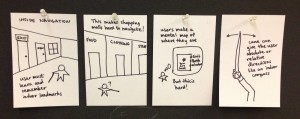

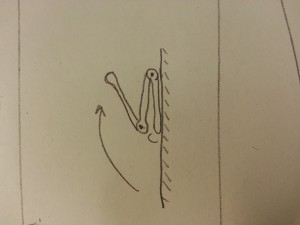

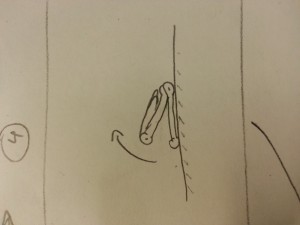

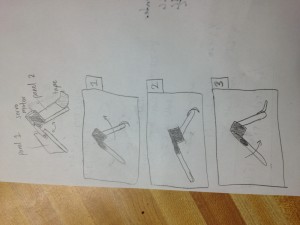

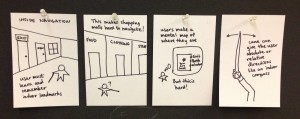

Storyboard for Task 1

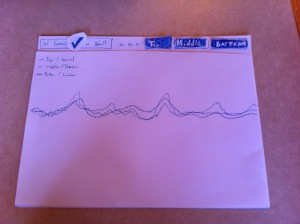

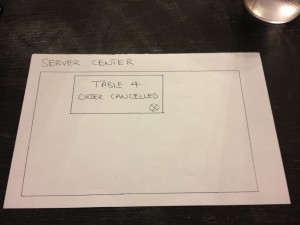

Storyboard for Task 2

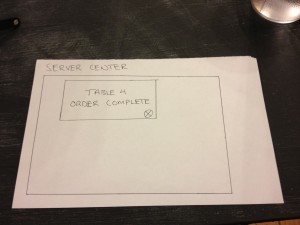

Storyboard for Task 3

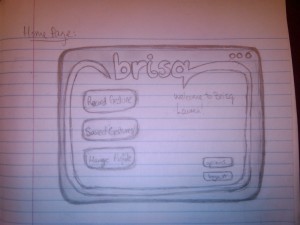

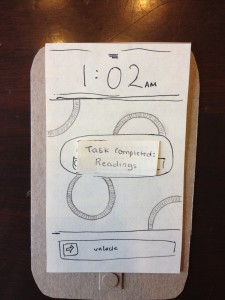

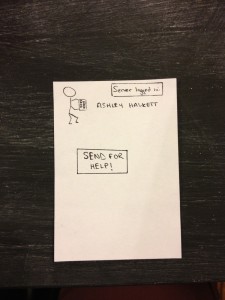

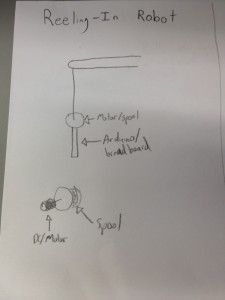

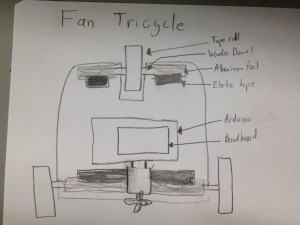

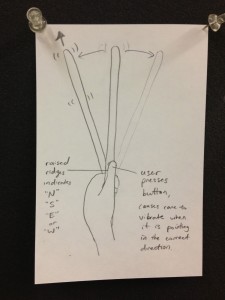

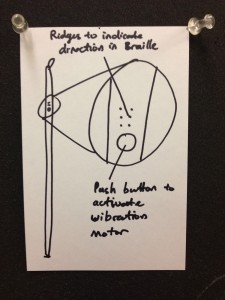

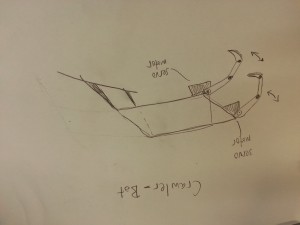

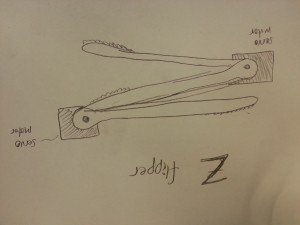

Design sketch

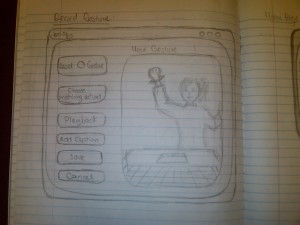

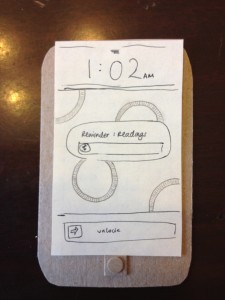

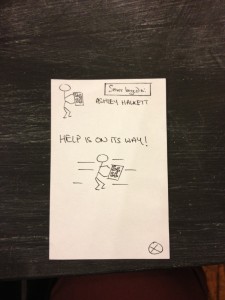

Design sketch

![solo_bracelet[1]](https://blogs.princeton.edu/humancomputerinterface/wp-content/uploads/sites/127/2013/03/solo_bracelet1-300x225.jpg)

![on_hand_bracelet[1]](https://blogs.princeton.edu/humancomputerinterface/wp-content/uploads/sites/127/2013/03/on_hand_bracelet1-300x225.jpg)