Today I’m going to talk about a variant of Mirror Descent invented by Nemirovski in 2004 (see this paper). This new algorithm is called Mirror Prox and it is designed to minimize a smooth convex function ![]() over a compact convex set

over a compact convex set ![]() . We assume that the smoothness is measured in some arbitrary norm

. We assume that the smoothness is measured in some arbitrary norm ![]() , precisely we assume that

, precisely we assume that ![]() .

.

Let ![]() be a mirror map on

be a mirror map on ![]() (see this post for a definition) and let

(see this post for a definition) and let ![]() . Mirror Prox is described by the following equations:

. Mirror Prox is described by the following equations:

In words the algorithm first makes a step of Mirror Descent to go from ![]() to

to ![]() , and then it makes a similar step to obtain

, and then it makes a similar step to obtain ![]() , starting again from

, starting again from ![]() but this time using the gradient of

but this time using the gradient of ![]() evaluated at

evaluated at ![]() (instead of

(instead of ![]() ). The following result justifies the procedure.

). The following result justifies the procedure.

Theorem. Let

be a mirror map

-strongly convex on

with respect to

. Let

and

be convex and

-smooth w.r.t.

. Then Mirror Prox with

satisfies (with

)

Proof: Let ![]() . We write

. We write

We will now bound separately these three terms. For the first one we have (by definition of the method, first-order optimality condition of the projection, and the definition of the Bregman divergence)

For the second term using the same properties than above and the strong-convexity of the mirror map one has

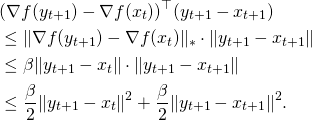

Finally for the last term, using H\”older’s inequality, ![]() -smoothness, and the fact

-smoothness, and the fact ![]() one has

one has

Thus summing up these three terms and using that ![]() one gets

one gets

![]()

and this inequality is also true for ![]() (see the bound for the first term). The proof is then concluded as usual.

(see the bound for the first term). The proof is then concluded as usual.

![]()

Mirror Prox for Saddle Points

Following the generalization of Mirror Descent for Saddle Points (MD-SP, see this post) one can propose a version of Mirror Prox for Saddle Points (MP-SP). In fact the importance of Mirror Prox lies primarily in its application to compute the saddle point of a smooth convex-convave function. Indeed, the key observation is that many non-smooth convex functions admit a smooth saddle-point representation (see examples below). With such a representation one can then use MP-SP to obtain a rate of convergence of order ![]() , despite the fact that the original function is non-smooth! Recall that we proved that for non-smooth convex functions the optimal black-box rate is of order

, despite the fact that the original function is non-smooth! Recall that we proved that for non-smooth convex functions the optimal black-box rate is of order ![]() . However MP-SP is not a black-box method in the sense that it makes use of the structure of the function (by representing it as a saddle-point). Nonetheless MP-SP still enjoys the nice time-complexity property of black-box methods since it is a first-order method. It is a win-win situation!

. However MP-SP is not a black-box method in the sense that it makes use of the structure of the function (by representing it as a saddle-point). Nonetheless MP-SP still enjoys the nice time-complexity property of black-box methods since it is a first-order method. It is a win-win situation!

Let us now describe more precisely MP-SP. Let ![]() be a compact and convex set endowed with some norm

be a compact and convex set endowed with some norm ![]() and a mirror map

and a mirror map ![]() (defined on

(defined on ![]() ), and let

), and let ![]() be a compact and convex set endowed with some norm

be a compact and convex set endowed with some norm ![]() and a mirror map

and a mirror map ![]() (defined on

(defined on ![]() ). Let

). Let ![]() be a continuously differentiable function such that

be a continuously differentiable function such that ![]() is convex and

is convex and ![]() is concave. We assume that

is concave. We assume that ![]() is

is ![]() -smooth in the sense that:

-smooth in the sense that:

We consider the mirror map ![]() on

on ![]() (defined on

(defined on ![]() ). Let

). Let ![]() . Then for

. Then for ![]() , let

, let ![]() and

and ![]() be defined by the following equations (with

be defined by the following equations (with ![]() ):

):

Theorem. Assume that

is

-strongly convex on

with respect to

and let

(with similar definition for

). Let

. Then SP Mirror Descent with

satisfies

Proof: First we endow ![]() with the norm defined by

with the norm defined by

![]()

It is immediate that ![]() is

is ![]() -strongly convex with respect to

-strongly convex with respect to ![]() on

on ![]() . Furthermore one can easily check that

. Furthermore one can easily check that

![]()

and thus the vector fields ![]() used in MP-SP satisfy:

used in MP-SP satisfy:

![]()

It suffices now to use the exact same proof than for the standard Mirror Prox starting with:

![]()

Smooth saddle-point representation of non-smooth functions

Assume that the function ![]() to be optimized is of the form:

to be optimized is of the form:

![]()

where the functions ![]() are convex,

are convex, ![]() -smooth and

-smooth and ![]() -Lipschitz in some norm

-Lipschitz in some norm ![]() . Minimizing

. Minimizing ![]() over

over ![]() is equivalent to finding a saddle point over

is equivalent to finding a saddle point over ![]() since one has:

since one has:

![]()

Thus we are looking for the saddle point of ![]() which is convex in

which is convex in ![]() , and concave (in fact linear!) in

, and concave (in fact linear!) in ![]() . Furthermore

. Furthermore ![]() is smooth and it is an easy exercise to verify that in this case one has

is smooth and it is an easy exercise to verify that in this case one has ![]() ,

, ![]() ,

, ![]() , and

, and ![]() . Thus a rate of convergence (to the minimizer of

. Thus a rate of convergence (to the minimizer of ![]() ) of order

) of order ![]() can be obtained by applying MP-SP with appropriate mirror maps (in particular the update in the

can be obtained by applying MP-SP with appropriate mirror maps (in particular the update in the ![]() -space has to be done with the negative entropy). Note that is rate is particularly striking since the oracle lower bound of

-space has to be done with the negative entropy). Note that is rate is particularly striking since the oracle lower bound of ![]() was proved precisely for this type of function! Here we are able to get around this lower bound by making use of the structure of the function. Remark also that if you think about what this algorithm is doing to optimize the function

was proved precisely for this type of function! Here we are able to get around this lower bound by making use of the structure of the function. Remark also that if you think about what this algorithm is doing to optimize the function ![]() you get a very very elegant procedure for which it is highly non-trivial a priori to prove something about its rate of convergence.

you get a very very elegant procedure for which it is highly non-trivial a priori to prove something about its rate of convergence.

Finally note that if ![]() and

and ![]() are of different order of magnitudes one can obtain a better result by considering the mirror map

are of different order of magnitudes one can obtain a better result by considering the mirror map ![]() and optimizing over the values of

and optimizing over the values of ![]() and

and ![]() .

.

Another interesting example arises when ![]() with

with ![]() ,

, ![]() and

and ![]() . With the

. With the ![]() -norm on both spaces it is easy to see that

-norm on both spaces it is easy to see that ![]() (the entrywise max norm), and of course

(the entrywise max norm), and of course ![]() . Thus we obtain here a convergence rate of order

. Thus we obtain here a convergence rate of order ![]() . Again if

. Again if ![]() and

and ![]() are of different order of magnitudes one can improve the result to obtain a rate of order

are of different order of magnitudes one can improve the result to obtain a rate of order ![]() by considering the mirror map

by considering the mirror map ![]() and optimizing over the values of

and optimizing over the values of ![]() and

and ![]() .

.

Many more interesting examples can be derived and we refer the reader to the original paper of Nemirovski as well as this book chapter by Juditsky and Nemirovski.

![Rendered by QuickLaTeX.com \[f\bigg(\frac{1}{t} \sum_{s=1}^t y_s \bigg) - f(x^*) \leq \frac{\beta R^2}{\kappa t} .\]](https://web.archive.org/web/20210425194914im_/https://i0.wp.com/blogs.princeton.edu/imabandit/wp-content/ql-cache/quicklatex.com-b757fd6564a43e1c7be59b84a8840481_l3.png?resize=225%2C53&ssl=1)

![Rendered by QuickLaTeX.com \[\max_{y \in \mathcal{Y}} \phi\left( \frac1{t} \sum_{s=1}^t u_s,y \right) - \min_{x \in \mathcal{X}} \phi\left(x, \frac1{t} \sum_{s=1}^t v_s \right) \leq \frac{2 \beta (R^2_{\mathcal{X}} + R^2_{\mathcal{X}})}{t}.\]](https://web.archive.org/web/20210425194914im_/https://i0.wp.com/blogs.princeton.edu/imabandit/wp-content/ql-cache/quicklatex.com-2fe12cc382b0a40371b5c9d7ec97d2bd_l3.png?resize=469%2C54&ssl=1)

By Alexander Gasnikov (MIPT) January 9, 2014 - 3:01 pm

The same results can be obtained by the Nesterov’s smoothing technique

http://luthuli.cs.uiuc.edu/~daf/courses/Optimization/MRFpapers/nesterov05.pdf

By Sebastien Bubeck March 15, 2014 - 11:51 am

Yes I agree! However it is not clear to me under what circumstances Nesterov’s smoothing should be preferred to Mirror Prox. Do you have any intuition about this?