Category Archives: Uncategorized

A Mind at Play: How Claude Shannon Invented the Information Age by Jimmy Soni and Rob Goodman

Advance praise from the Amazon website:

“Claude Shannon wrote the ‘the Magna Carta of the Information Age’ and conceived of the basic concept underlying all digital computers. Jimmy Soni and Rob Goodman offer a long overdue, insightful, and humane portrait of this eccentric and towering genius.” (Walter Isaacson, author of Steve Jobs, The Innovators, and Einstein)

“An exceptionally elegant and authoritative portrait of a man of few words but many big ideas. Soni and Goodman’s elucidations of Claude Shannon’s theories are gems of conciseness and clarity, and their case for placing him in the same pantheon as Turing and von Neumann is compelling.” (Sylvia Nasar, author of A Beautiful Mind, winner of the National Book Critics Circle Award)

“Jimmy Soni and Rob Goodman have written a fascinating, readable, and necessary biography of a true American genius. This is the book that finally explains Claude Shannon’s character and career as well as the context of his extraordinary life and times.” (Jon Gertner, author of The Idea Factory: Bell Labs and the Great Age of American Innovation)

“An avid biography full of freewheeling curiosity and fun. It’s a pleasure getting to know you, Claude Shannon!” (Siobhan Roberts, author of Genius at Play: The Curious Mind of John Horton Conway)

“Shannon was to information and communication what Newton was to physics. By following his curiosity through the playground of science, he discovered mathematical laws that govern our digital age. The Shannon I worked with comes alive in these pages.” (Edward O. Thorp, author of A Man For All Markets and Beat The Dealer)

“At last a biography of a man who shaped the Information Age we live in, and a thinker who combined the playfulness of Richard Feynman with the genius of Albert Einstein. For anyone interested in living both a playful and a thoughtful life, there is no better model than Claude Shannon—and no better writing team than Soni and Goodman to have written the book on it.” (Ryan Holiday, bestselling author of The Daily Stoic and The Obstacle Is The Way)

“A brilliant treatment of the life of Claude Shannon, one of the 20th century’s most remarkable scientists in the field of information technology. This giant of a man launched the digital world we now inhabit, but his not the household name it deserves to be. Jimmy Soni and Rob Goodman have corrected this with their superb new book presenting Shannon’s amazing personal and professional life.” (Professor Leonard Kleinrock, Distinguished Professor of Computer Science, UCLA, and winner, 2007 National Medal of Science)

“We are familiar with the bright young stars who brought us the web, Google and Facebook, but this engaging book demystifies the digital communications revolution and shows how it really began! In telling the story of Claude Shannon, Jimmy Soni and Rob Goodman have given a fascinating introduction to the ideas and the people who made our digital age possible.” (Robyn Arianrhod, author of Seduced by Logic: Émilie Du Châtelet, Mary Somerville and the Newtonian Revolution)

“In this fine biography of Claude Shannon, Soni and Goodman make accessible the origins of digital communications while revealing how engineers think deeply not only about things but through things; it was through tinkering that Shannon was able to bring us the modern digital world.” (W. Bernard Carlson, Professor and Chair, Engineering & Society Department, University of Virginia)

“The biography of one of the towering geniuses of the 20th century we have been awaiting for decades. In this veritable labor of love by Jimmy Soni and Rob Goodman, one has on offer an enthralling and beautifully rendered portrait of Claude Shannon, the mathematician, the engineer, the inventor, the tinkerer, and, above all, the enigmatic man who became the intellectual father of the vital lifeblood of our age: information.” (Professor Sergio Verdu, Eugene Higgins Professor of Electrical Engineering at Princeton University)

“The fact that there has never been a comprehensive biography of Claude Shannon, “The Father of Information Theory,” has seemed a particularly egregious oversight as the world has hurtled further and further into the Information Age. Jimmy Soni and Rob Goodman have finally rectified this injustice. They have woven comprehensive research into a compelling and personal narrative, accessible to non-specialists but also of interest to people in the field for whom Shannon is an almost mythical figure. A Mind at Play is an insightful and moving portrait of the very original genius whose work affects nearly every aspect of the modern age.” (Dr. Mark Levinson, Director, Particle Fever)

“A Mind at Play bubbles over with energy and verve and insight. This is biography as it should be, but seldom is.” (Edward Dolnick, author of The Clockwork Universe)

“A welcome and inspiring account of a largely unsung hero—unsung because, the authors suggest, he accomplished something so fundamental that it’s difficult to imagine a world without it.” (Kirkus Reviews)

“A key figure in the development of digital technology has his achievements, if not his personality, burnished in this enlightening biography. . . . The authors’ rundown of the science behind these advances, probing everything from the structure of language to the transatlantic telegraph, is lucid and fascinating. . . . Soni and Goodman open an engrossing window onto what a mind hard at work can do.” (Publishers Weekly)

“A Mind at Play takes its readers through the extraordinary life of someone so deserving of this well-researched and smooth-reading biography. Read it. Lose yourself in the pages. For just a few worthwhile hours, you will become a shadow following Shannon’s life and playful mind.” (Joseph Mazur, Author of Fluke: The Math and Myth of Coincidences)

“Terrific. A Mind at Play is fluidly written, thoroughly researched, and important. It brings to our attention the fascinating life of Claude Shannon, considered by his colleagues to be the Einstein of our information age. It is a story we should all know, and it is a read that you all will enjoy.” (Martin J. Sherwin, coauthor (with Kai Bird) of the Pulitzer Prize-winning biography, American Prometheus: The Triumph and Tragedy of J. Robert Oppenheimer.)

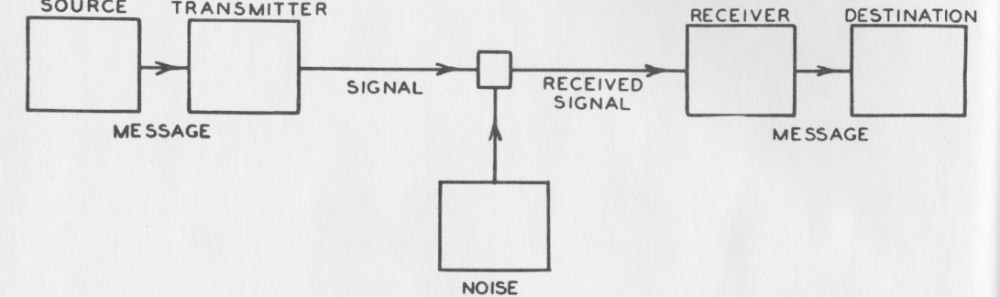

1953 film “Communications Primer”

by Charles Eames and Ray Eames

https://archive.org/details/communications_primer

David Donoho’s “Fifty Years of Data Science”, Sep. 2015

Claude Shannon’s “A Rubric on Rubik’s Cubics”

http://blogs.scientificamerican.com/cross-check/poetic-masterpiece-of-claude-shannon-father-of-information-theory-published-for-the-first-time/

New: The Princeton Companion to Applied Mathematics

http://press.princeton.edu/titles/10592.html

Richard W. Hamming: “You and Your Research”

Talk at Bell Communications Research March 1986

Alexander Holevo recipient of the 2016 Claude E. Shannon Award

https://en.wikipedia.org/wiki/Alexander_Holevo

Panel on the history of information theory. Jerusalem, April 30th, 2015

http://itw2015.eew.technion.ac.il/photos/videos/

Postdoc openings in Tel Aviv University

http://www.itsoc.org/news-events/recent-news/postdoctoral-fellowships-in-information-theory-and-interactive-communications-at-tel-aviv-university-israel-1