In this blog post, I will detail how I set out to design a way to make use of the 10 minute periods of time that crop up here and there for the average Princeton student.

Author Archives: Kiran Vodrahalli

P1: Runway

Group Name: CAKE

We are not a lie.

Team members:

Connie Wan (cwan), Angela Dai (adai), Kiran Vodrahalli (knv), Edward Zhang (edwardz)

Brainstorming List:

- 3D modelling with stereoscopic output overlapping with gestural input space for natural user interaction (perhaps a Maze game where you’re inside a cube)

- Enhanced music stand for rehearsal and concerts, with gestural/automatic page turning, automatically jumping to sections based on conductor’s decisions, and easy part markup

- Natural navigation in virtual worlds/virtual reality using gestural input.

- Solve the problem of limited screen real-estate by projecting a virtual desktop on every surface in the room that you can interact with using pen, hands, and gesture.

- 3D data exploration with visor – you put the visor on your head and you see streams of data everywhere like you’re in the matrix. just kidding, you should be able to interact with graphs with your hands, move them around, set axes differently, see different kinds of graphs for the same data, see Google maps with 3D cities, manipulate molecular models etc.

- Hands-free transportation for the lazy – Segway chair

- Make a enhanced web browser with a head-mounted display: instead of hidden tabs with no relationships, you can drag different pages around in the space surrounding you and organize them into your own mini-web (using gesture, of course).

- Modern laser tag – have computers know where “portals” are, have gun-like thingies that have cameras, and you “fire” into portals which come out an associated portal to hit someone

- Screenless navigation assistance in new environments using an audio and tactile interface – e.g. vibrating elements in clothing or gloves that warn of obstacles, audio signals that get louder or softer as you face places of interest, etc. Useful for the blind

- Piano Tutor – projection onto actual piano keys that can light up keys or recognize when keys are played, synchronized with page turning.

- living room aware computing — pointing at “TV”, turns on, knows where you are: we would use a model room and put hardware in the walls, chairs, etc. Simulate “The Living Room of 2040” or something like that

- Smart food storage: refrigerator: texts you if it shuts off, keeps updated shopping list, keeps track of when food goes bad, organizes meals and moves ingredients to front of fridge if you need to use them, talks to your computer to get search history– what food have you been searching/ looking at recently, it compiles a list of ingredients and sends them for you to get

- Smart food cooking: toaster, stove, microwave: control from your phone or computer, or via speech–it cooks it the way you want it (updated over time); alerts you if something burns, can be remotely shut off (like say you leave the house and realize you left the stove on, you could shut it off with an app, maybe with phone)

- Smart dishwasher: control remotely, automatically knows when to wash dishes, rotating water jets that sense dish shapes, make sure everything is hit. Could integrate it with your calendar

- Kitchen sink that automatically or hands-free turns on and off depending on when it’s needed, precisely filling up containers.

- Smart closet and smart clothes hangers: keeps everything sorted for you, automatically sets out clothing for the day based on weather (thicker clothing for cold days) and calendar (bathing suit for beach days), keeps your clothing updated online so friends can like clothes, and maybe even suggest fashionable combos for you to impress everyone! (this may sort of exist?) also could be helpful as a shopping app–that way, you can automatically know if you have a certain type of clothing so you don’t buy too much of the same type

- Smart mirror that allows you to virtually try on clothes (already exists?) – large screen w/ camera, can zoom in on parts, bring up clothing images, etc. update to fb/twitter if you think you’re looking pretty good today wink wink

- Enhanced clothing, e.g. integrated music player and cellphone with speakers and microphone in hood, control system in the sleeves (combine with those t-shirts that have displays in the front).

- Smart desk in schools – automatically sense who you are, directly integrated with your student account at a university. Personalized instructions in class/lab

- Augmented window with touch and display capabilities – use to display widgets like weather, news, etc. Automatically raises and lowers blinds

- Smart lamp (could double as projector — display internet pages on your desk, could be part of a desk UI (tabletop), doubles as a disco ball for room parties), automatically turns off when you get in bed

- Smart gym: dumbells that keep track of your weight-lifting abilities, prompts you sarcastically (“do u evn lft”) (“friends don’t let friends skip leg day”), all the devices talk to each other, give you suggestions as to what you should do next for an ideal workout

- Smart bike: knows directions and your schedule, “automatic”, i.e. changes gears

- Smart backpack: knows what’s inside, is connected to calendar so knows your schedule, so it can alert you if you need a book/notebook that you need for a class, reminders to pick up packages, lets you know if your water bottle is running low, interact with it there’s an interface you can strap on, or on strap, plug in Android or iPhone to give backpack computing power and access to calendar stuff, pop out stuff you need immediately, organizational system that lets you put stuff in only one compartment of the backpack

- E-readers that track your gaze and turn pages automatically

- Smart bookshelf (like the one in Google NY, but better) that links to a site like shelfari or goodreads (maybe tie to online living room) and allows you to browse books that you might like; you can insert an e-reader or tablet where a book is “displayed” and it downloads that book for you to read

- Voice and camera on computer (like Jarvis). Framework that connects with your whole house and provides an interface to turn off the lights, make toast, open your garage, anything you might have to walk to do, you can do it from your computer/voice

- smart toilet cubicle, with a projected display and hands free navigation — no more magazines/books, no more phones dropping in toilet.

- sassy oven– tell it what to bake, it talks back (maybe it tells you that you’re getting a bit chubby, hold off on the cookies) — the point is to have even more fun with baking and cooking in general. Good for lonely grandparents who don’t have anything else to do

- Go-go-gadget arm – Quadrocopter that fetches faraway things for you.

- Sunburn warning (hat that has a timer and knows what the weather is like in your area (temp sensor, maybe some sort of UV sensor based on smart glass, maybe knows your skin color/type), and calculates when you’d be exposed to too much sun, and notifies you 10 minutes before by beeping)

- Device that senses if you are falling asleep in class and subtly wakes you up – in clothing, vibrates? or a device in ear that makes a small beep straight into your ear

- Gestural Video Editor – view video as rectangular prism, make operations such as cuts/transitions/timing control more natural than with mouse. You can stretch out a square prism to slow down the video, compress it to speed it up, color different parts with different music, slice the prism and rearrange it for scene editing, etc.

- Gestural Music Editor – can be either for notation/composition or for mixing/mastering. Use gesture contours to input notation with pitch, time and dynamics. Or make cuts/EQ/effects more natural with gestures instead of mouse+dialogs.

- Ultimate student: always helps you be working on something (uber productivity) based on how long tasks will take – brings up appropriate tasks on computer, pop out homework/problem sets from your binder, etc. (might be better as a desktop application?) — have a printer built in, new form factor for “laptop” — portable desk instead of tablet. (maybe it folds up)

- Advanced video conferencing – 3D images, room-sized conference room space, locational audio.

- Whisper/subvocalization translator (like in Ender’s Game sequels) – can understand sound without you having to vocalize, for private conversations. Have a sensor on throat or tongue that detects vibrations and translates to speech

- Educational AI : speech, personality: teaches math to elementary school kids through interactive games, learns what sort of games work well for specific individuals on the fly, encourages people — could be through voice, games could be gesture/voice based

- medical auto diagnosis: can tell what your heart rate is, cholesterol level, how “sick” you look–maybe you have the flu. tells you in advance if you’re catching a cold and tells you what to do to stop it, contacts doctor if detects something serious (would use computer vision to detect stuff, maybe other sensors as well)

- Device that finds and removes split ends from hair, either using visual or physical processing of hair strands

- Invisible mouse – instead of trackpad for laptops, just use your hand as a mouse (activated when you put your hand on the table).

- make specialized pen better (write on any old paper, updates stuff in the cloud (write subject on top, it knows what document, edits that for you) (Already exists)

- Artist’s pen that can change ink color / thickness / type / etc. with small gestures/indications

- 2D character rigging with a drawing-based interface or an action figure.

- Tactile interface – deeply integrate vibrating actuators into laptop/mouse/clothing for another output modality (like in game controllers or in phone games). E.g. use it in photo editors (indicating alpha value of pixels) or in web browsing.

- Forcing you to do exercise – treadmill-activated computer that only runs while you run

- 3D game that requires actual running to move

- desk that categorizes any object you put on it and lights it up in a different color and/or actually moves it to gather with other similar objects

- mood lamps/screens/etc. that change color/brightness/temperature/etc. with the environment’s temperature/sound/brightness/general color/etc.

- touchpad that can sense when finger hovers above, gives 3rd dimension special functionality (e.g. switch between stack of applications/windows/tabs)

- origami tutor: camera finds where the paper is on the table and projects dotted lines where folds should go directly onto the paper, plus a ghost of how the paper should be folded next

- actual (new or invisible) instrument embedded in clothing (e.g. invisible violin, or instrument that depends on how your limbs/body are positioned)

- virtual interior decorator that sees what is in a room and projects things/colors from a store’s inventory that would go with them onto walls/furniture/etc

- Projector that can project onto any surface and still look flat

- Virtual tape measure based on hands for distance or start/end points

- Camera/projector combo that lets you highlight portions of a book with a finger, then can display your highlights by figuring out what page you’re on

- Building blocks for small children with letters on them that say any word that they are stacked into

- Taste-tester (can be used in cooking) – tells you how much seasoning to add, or whether or not you might enjoy something based on how similar it is to known foods. Does it really taste like chicken? http://www.digikey.com/us/en/techzone/sensors/resources/articles/The-Five-Senses-of-SensorsTaste.html

- Roomba++ – knows when to do vacuuming, as well as mopping/sweeping. Also cleans up counters, and intelligently puts objects on the floor into appropriate places.

- actual shadow boxing game: your shadows fight with each other (with tactile feedback)

- smart socks: text with people in class without anyone noticing (use flex sensors and do a morse code style communication — could be marketed for elementary school students, teach students morse code!)

- completely virtual board game playing and tutoring like chess (gesture/ 3d display based)

- object recognition chess (camera identifies when you move a physical piece, updates internet board in real time)

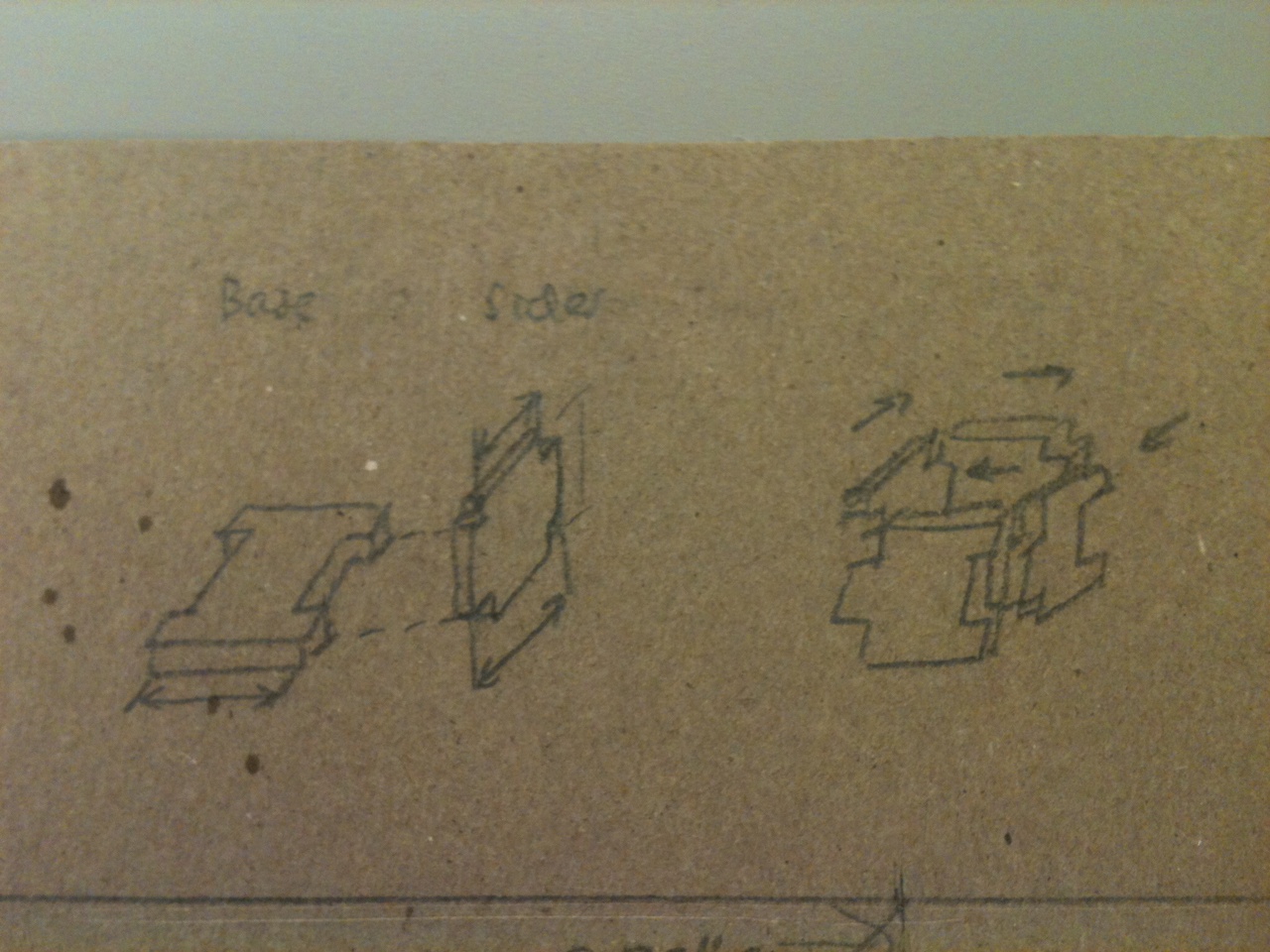

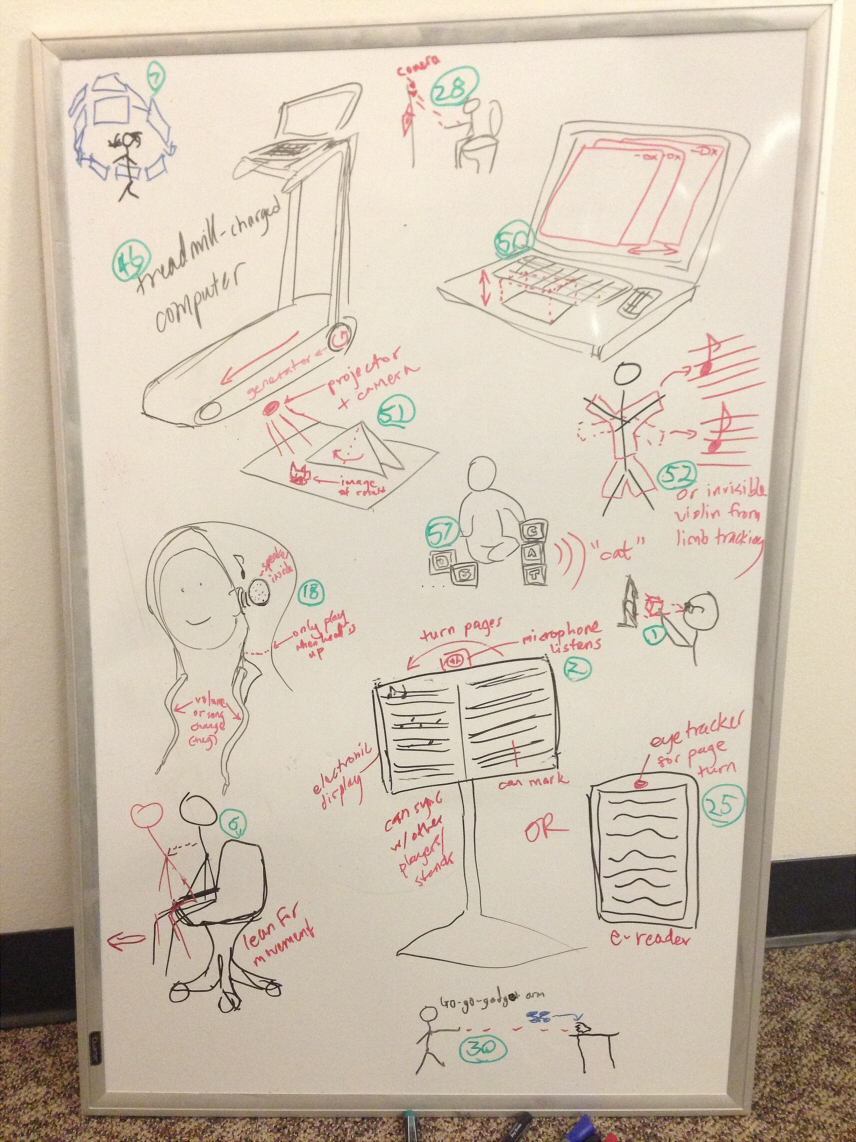

Sketches

Segway Chair, Auto-flipping Music Stand, Exercise-powered Computer, Origami Teacher, Wearable electronics, Building Blocks that spell out words to toddlers, Gestural Internet Browser (see windows in 3D and move them around!), Invisible Instrument, Go-go-gadget Arm [with Quadrocopter], Eye-tracking e-reader, Toilet Cubicle Entertainment System

Project Description:

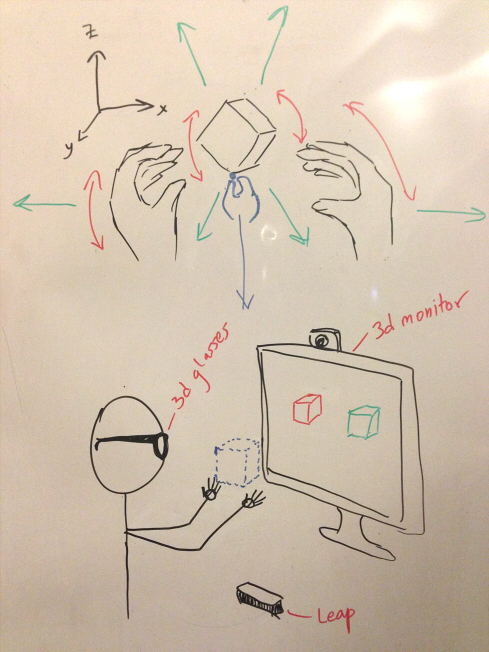

The manipulation environment consists of a 3D stereoscopic monitor that makes objects “pop-out” and look like they are floating in mid air. A gesture camera (the Leap) has its input space aligned with the output space. We also use a webcam to track the primary user’s viewpoint so that users can move their heads and see different views of a scene. All in all, users are able to translate objects by pinching them and moving them directly to where they want to go, to scale objects by stretching our hands apart, to select objects by touching them, and so on.

Why we chose our idea:

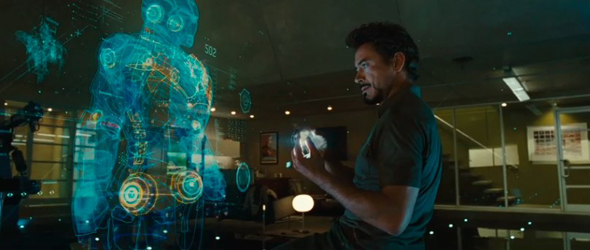

For starters, the concept is super cool! We’ve all been inspired by this picture:

Tony Stark using gestures!

and since we have some pretty awesome hardware already (Leap, Stereoscopic Monitor, and a Kinect), we think it would be feasible to implement a neat prototype of a 3D gesture interface which interacts with virtual, floating 3D objects.

Also, our idea is very modular: it’s possible to create more specific subsets of this project by building more functionality on top of the core structure. Specifically, it might make sense to add speech recognition and processing later on (if we have time) to improve that naturalness of the user interface.

Furthermore, it’s just a really cool user interface that hasn’t been properly built yet! We envision that in the future, computers will move away from the screen paradigm and become more immersive.

Target User Group(s): Artists, architects, designers, and animators who do 3D modelling, as well as scientists who manipulate 3D data, such as researchers in 3D capture who have to deal with point clouds or geneticists who manipulate and fold protein models.

Problem Description and Context: Current 3D modelling applications, such as Blender and Maya, though powerful, are unintuitive and awkward for navigation, relying on the 2D monitor and 2 degree-of-freedom mouse to represent and manipulate 3D objects. Because of these restrictions, it is very difficult to select arbitrary points in 3D space and perform rotations. By displaying objects stereoscopically, we can make them look like they are holograms, floating in the real world. Users could then interact with these objects as if they were real (as with Iron Man’s Jarvis), to make full use of our physical intuition. Although experimental gestural interfaces for 3D modelling exist, they have been shown in academic studies to be less efficient than mouse interfaces. By aligning the gestural input space with the stereoscopic output space, we make a fully natural interface for 3D modeling.

Justification of Technology Platform: The fundamental parts of our system will involve a stereoscopic display and a gesture camera. We will most likely use a standard stereoscopic monitor for output, although we are considering using a head-mounted display as well. For a gesture camera, we intend to use the Leap because of its low data latency. We also need an additional webcam to perform viewpoint tracking. These input and output technologies enable the fundamentally novel part of our system, which is colocated input and output spaces. We would also like to incorporate tactile feedback, namely a simple glove with vibrating actuators, to enhance the visual feedback provided by the 3D display (which for example vibrate when your hands intersect an object). These all add to the illusion that 3D objects are in fact “real” and thus are uniquely suited to our application of manipulating 3D objects.

Design Sketch:

A User manipulates a virtual cube in front of a screen! A Leap motion tracks the users hands, and the depth camera helps out.

Lab 0: The Wondrous Weather Cube (our very own Companion Cube!)

- Team members: Kiran Vodrahalli, Collin Stedman, Raymond Zhong, Dale Markowitz

02/19/2013

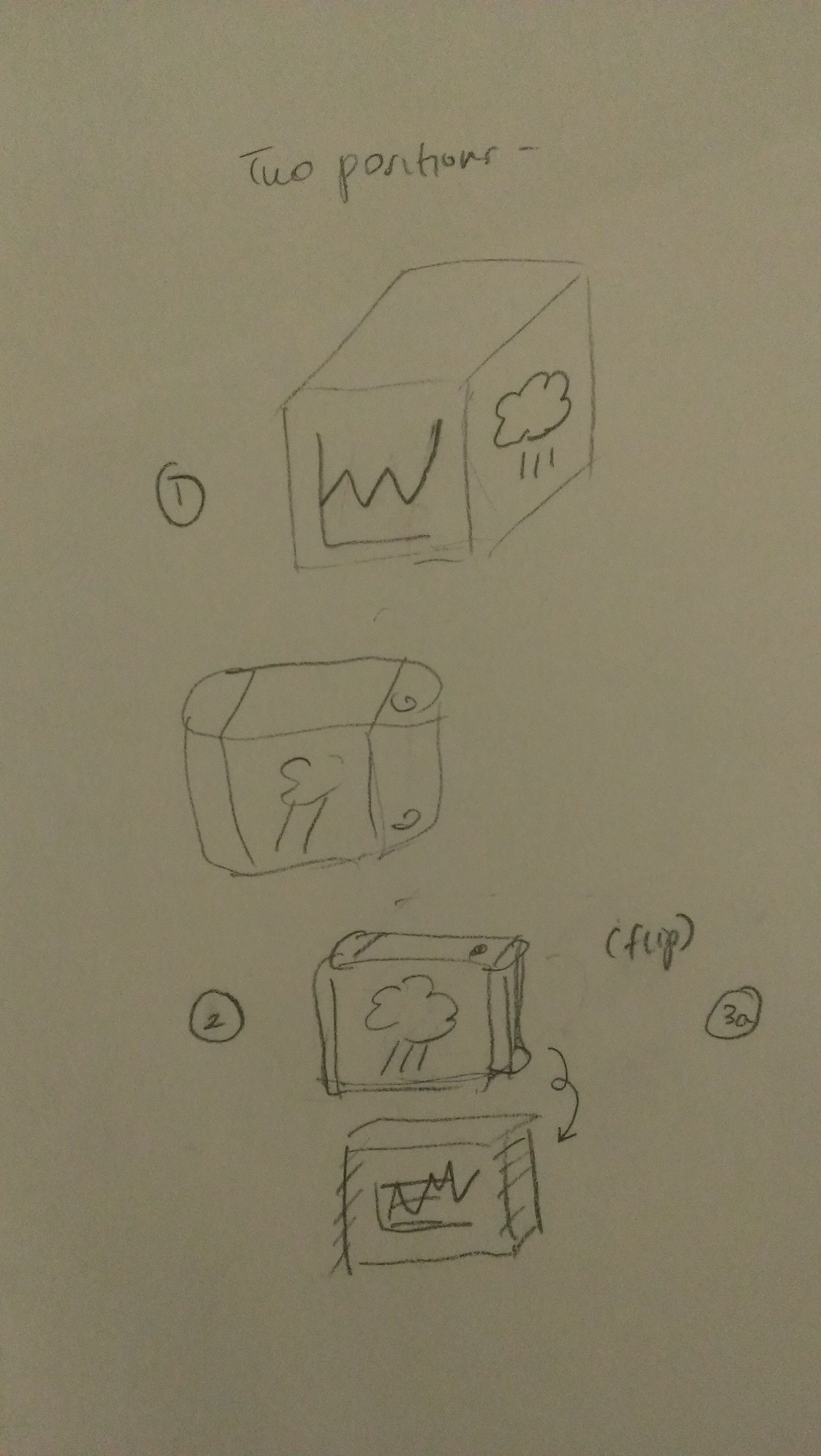

We created an Internet-enabled companion cube for your computer, which displays different kinds of information by glowing red or green. Depending on its orientation, and which labeled face is oriented upwards, our companion cube displays either stock readings of the NASDAQ or the outside temperature in Princeton. As the temperature or stock index changes, the Arduino inside the cube fetches updated data from the host computer it is connected to, and modulates the brightness of red and green LED arrays inside. We thought it would be a perfect match for the topic of the first lab, LED diffusors, and that it also makes a useful and elegant desktop accent.

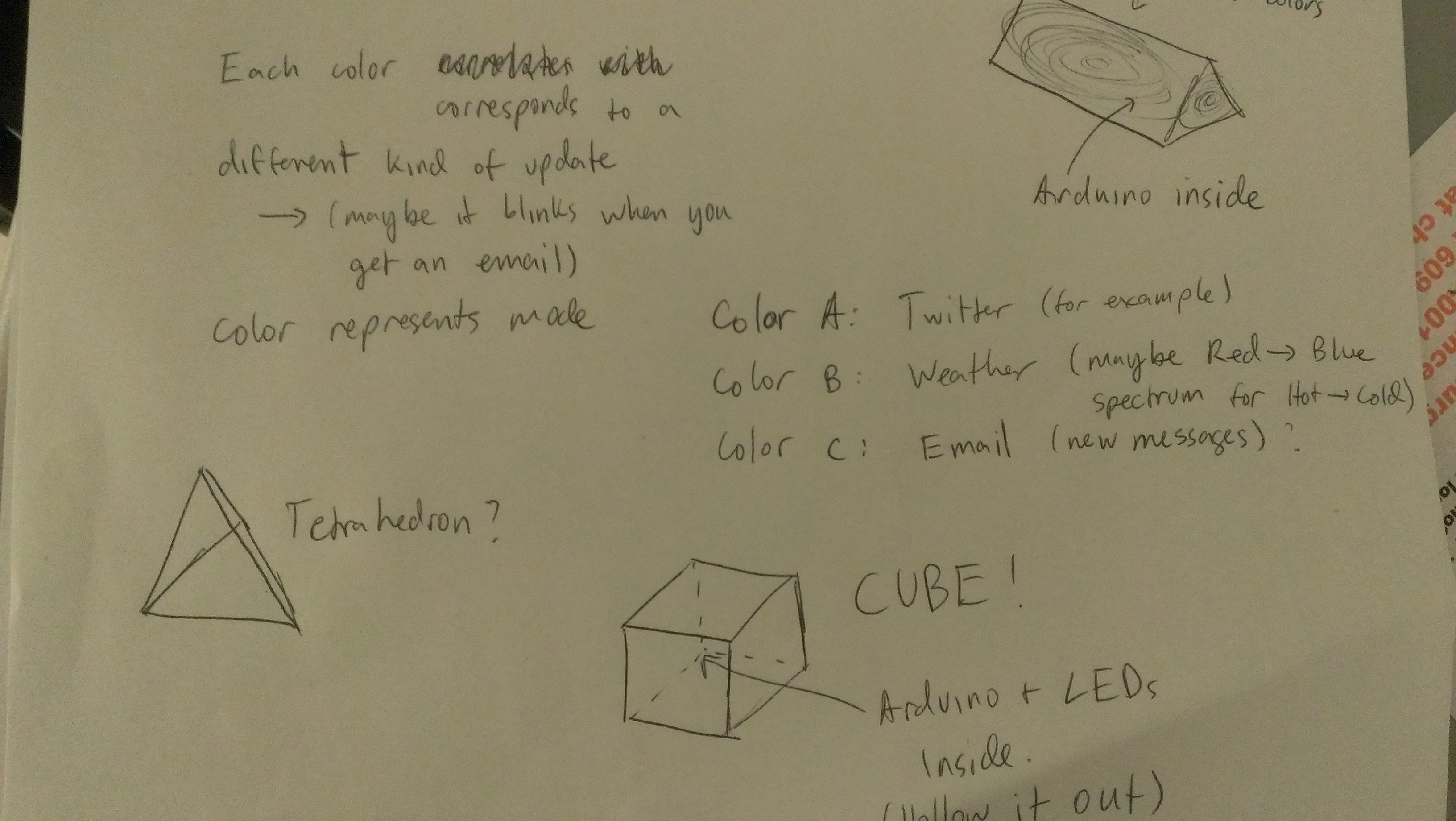

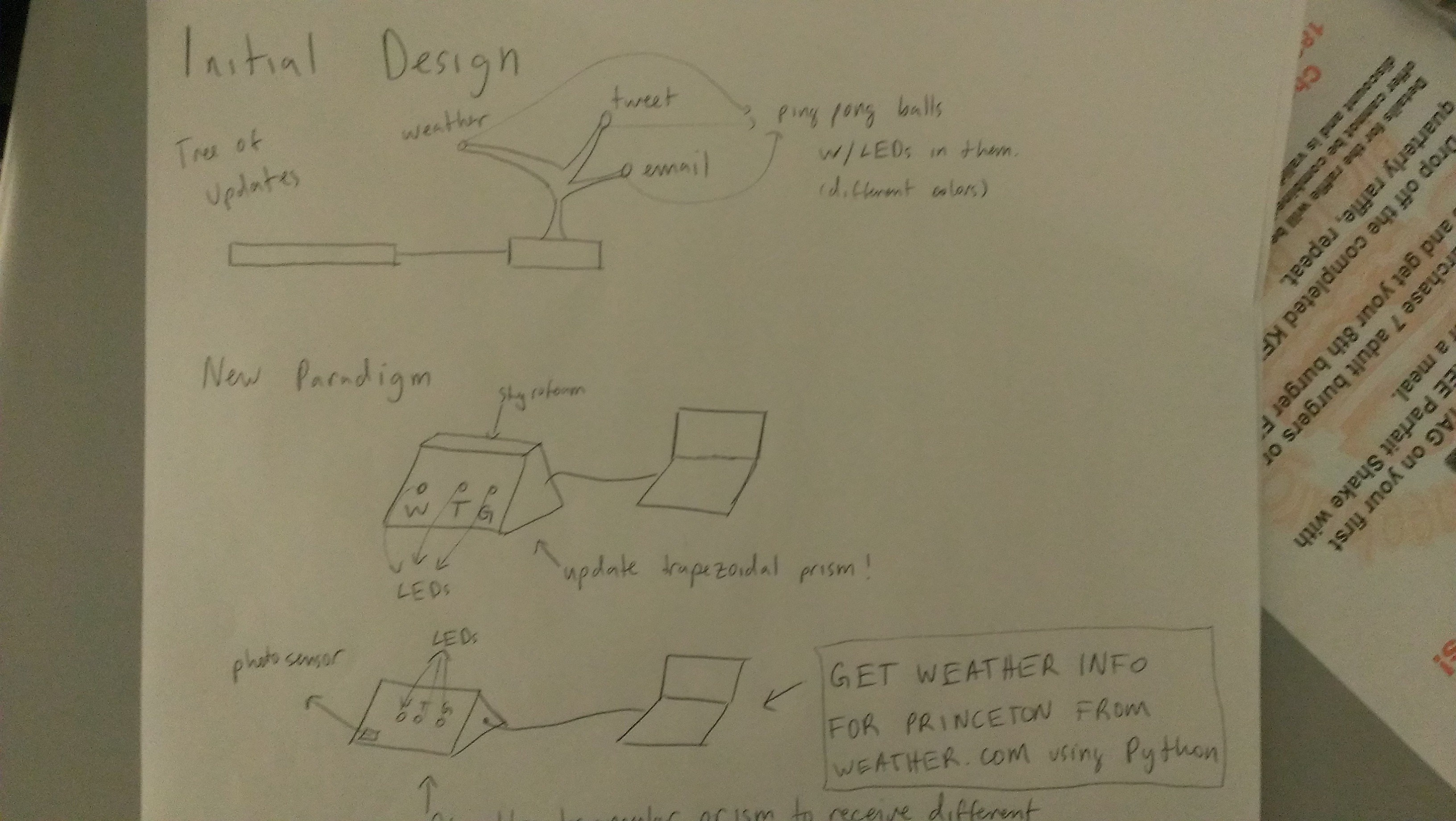

Ideation and Design

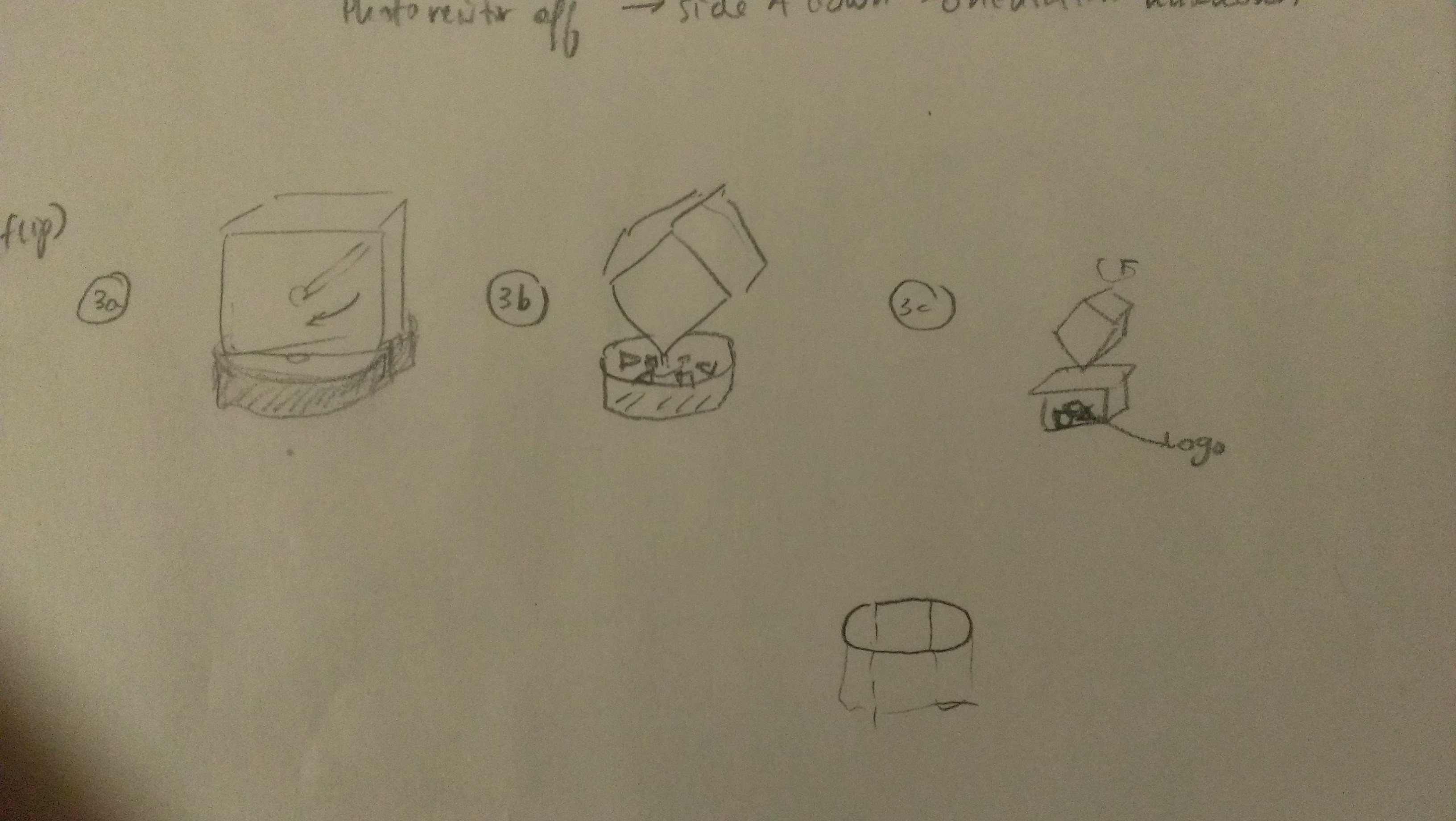

We started by thinking about LED diffusers, and found that the most interesting applications for us related to using them to display or convey information. We then considered a number of physical interfaces, including a tree, a panel, and various geometric shapes. We settled on a cube because we expected users to want multiple sources of information from the cube, and we thought rotating the cube was a better interface than configuring it by computer, since it would make use of the different sides of the cube. Since we have some Arduino experience, adding Internet connectivity so the Arduino and computer could talk to each other followed rather naturally.

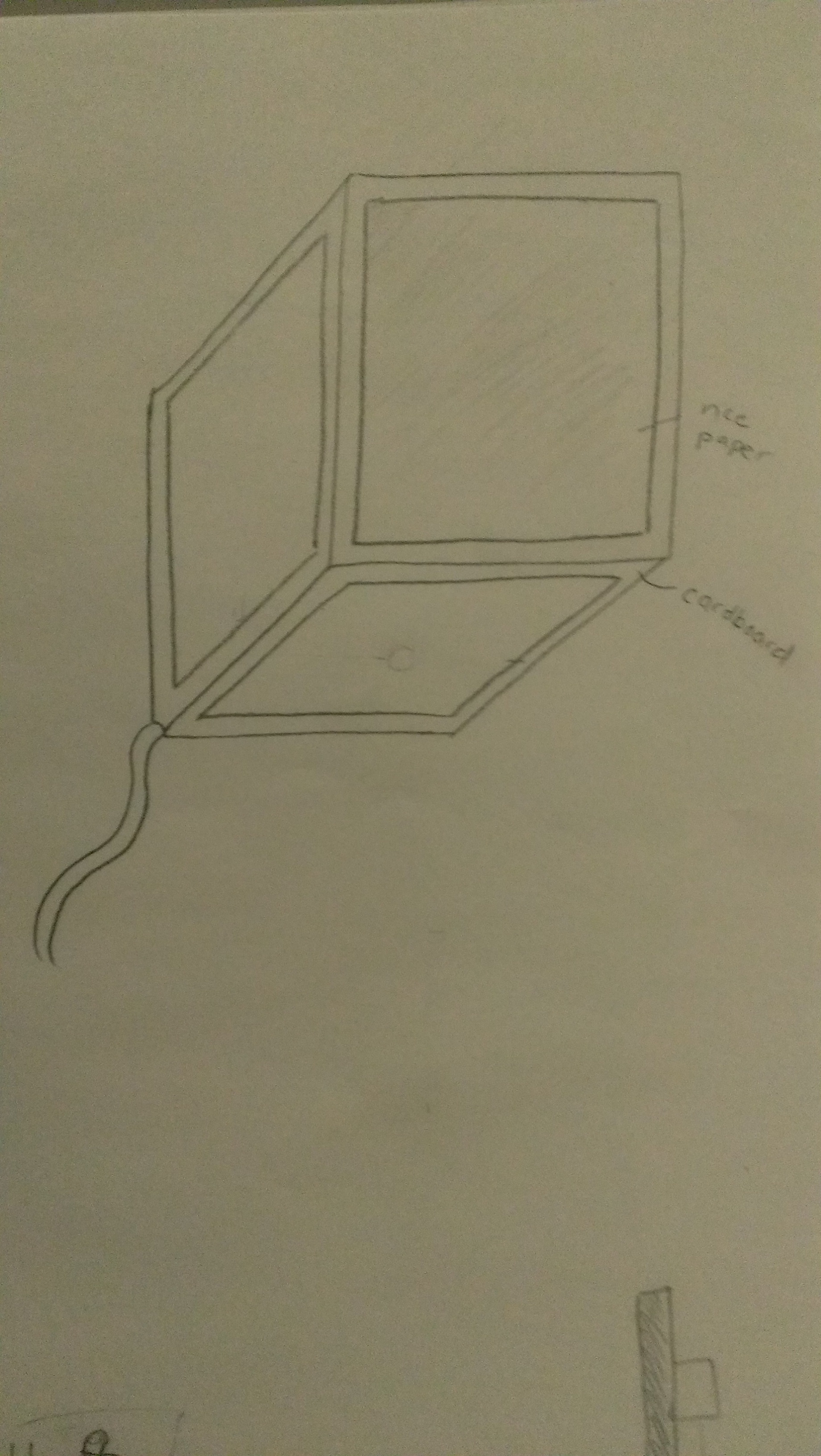

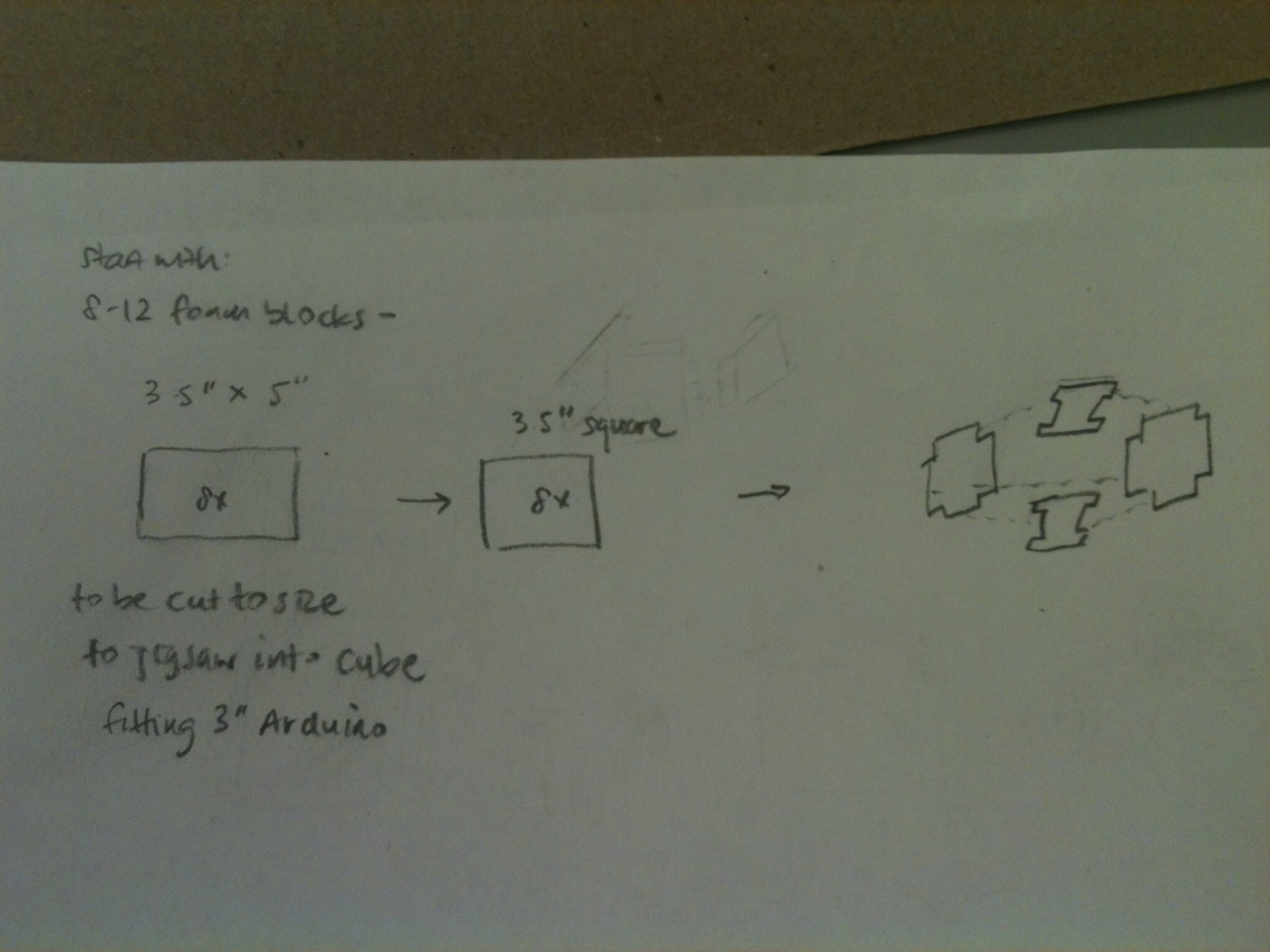

Construction

We found that there was scrap foam in many different places we looked – the upper levels of the E-Quad, the Architecture building, and Frist among others. We went with that, instead of making our cube out of paper, because foam seemed like an excellent prototyping material. It was easy to cut, already pre-formed into sheets, and most importantly fit together like a jigsaw puzzle, allowing us to assemble the foam simply by pushing metal leads into the joints. Eventually we would want to use a laser-cut enclosure for this kind of box.

Concerns and Conclusions

We would have preferred a better spectrum of colors from our cube, but we did not have enough tricolored LEDs to produce enough light to be visible through our cube. We ran into a number of problems because of the differences in brightness and voltage between red vs. green LEDs and indicator vs. lighting LEDs, but we managed to circumvent most of those because foam was such a great diffusing material and blocked little light. Finally, we observed that the Cube is sometimes slow to respond as we scrape data from the web, because of latency. So, we’d like to make it faster if possible.

We think our companion cube is pretty and works well. If we had developed the cube further, it would have more applications — possibly one for each side of the cube, including email notifications, facebook notifications, etc. — and it would use an orientation sensor rather than photoresistors on each side to figure out its orientation.

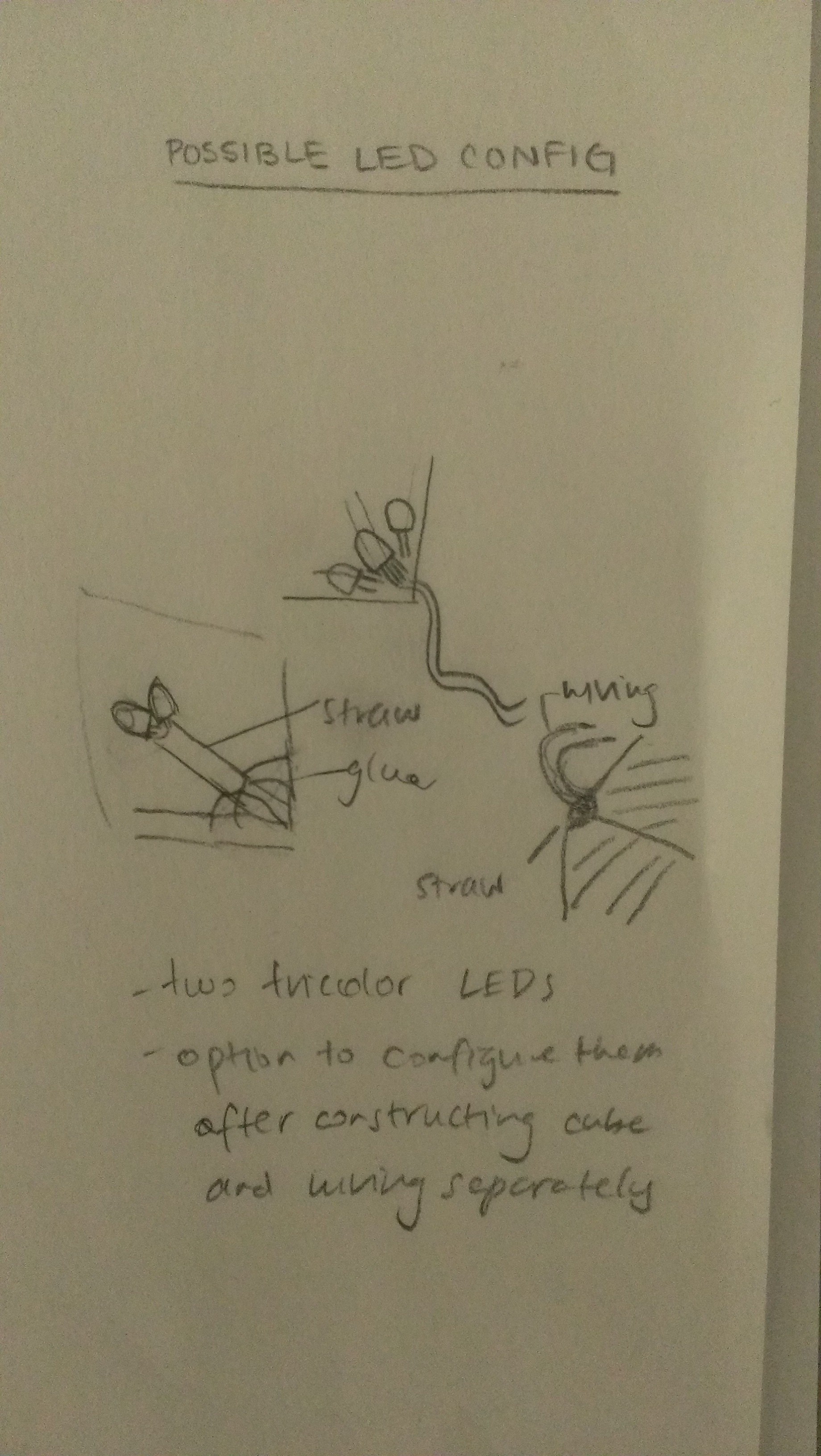

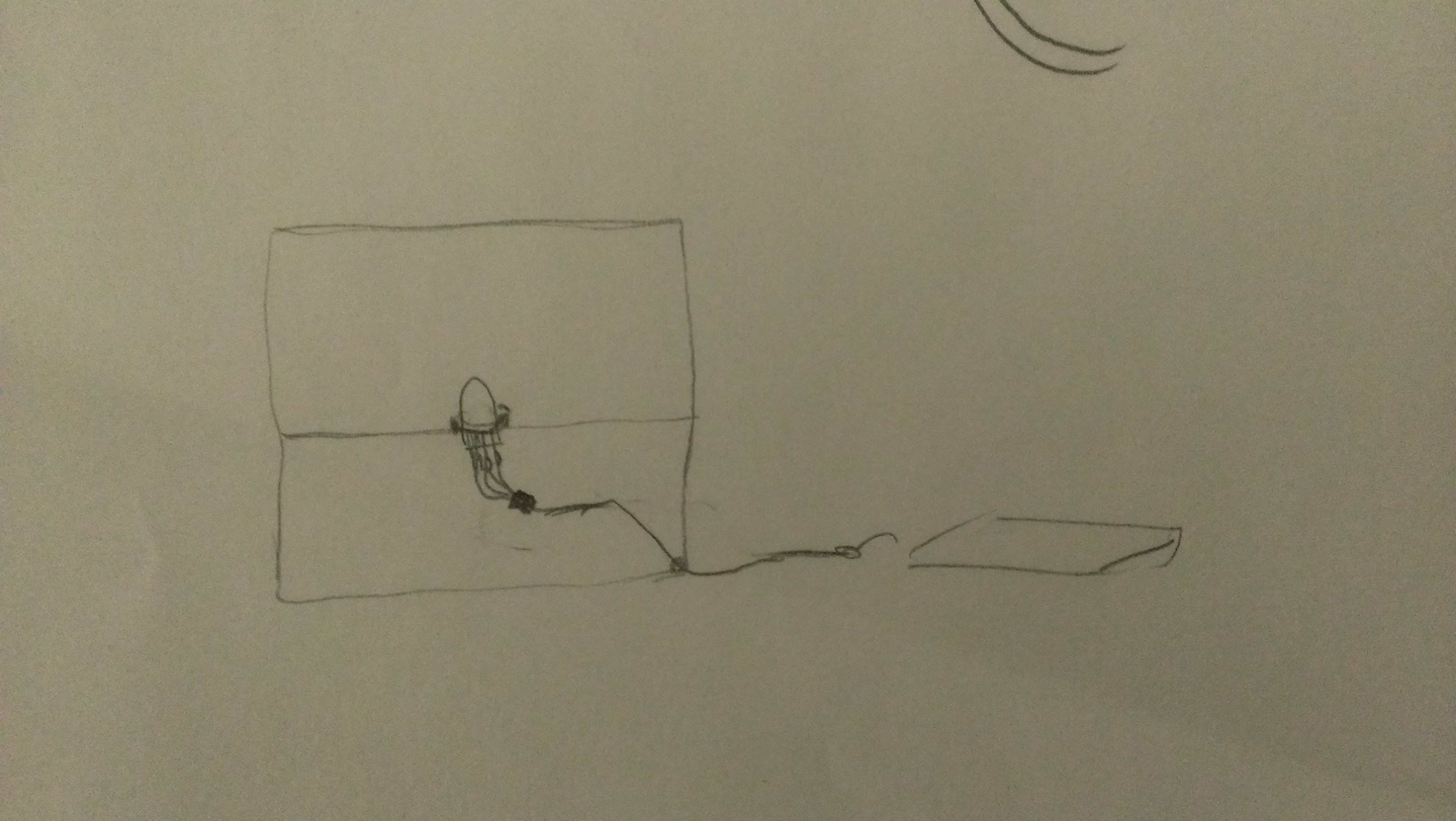

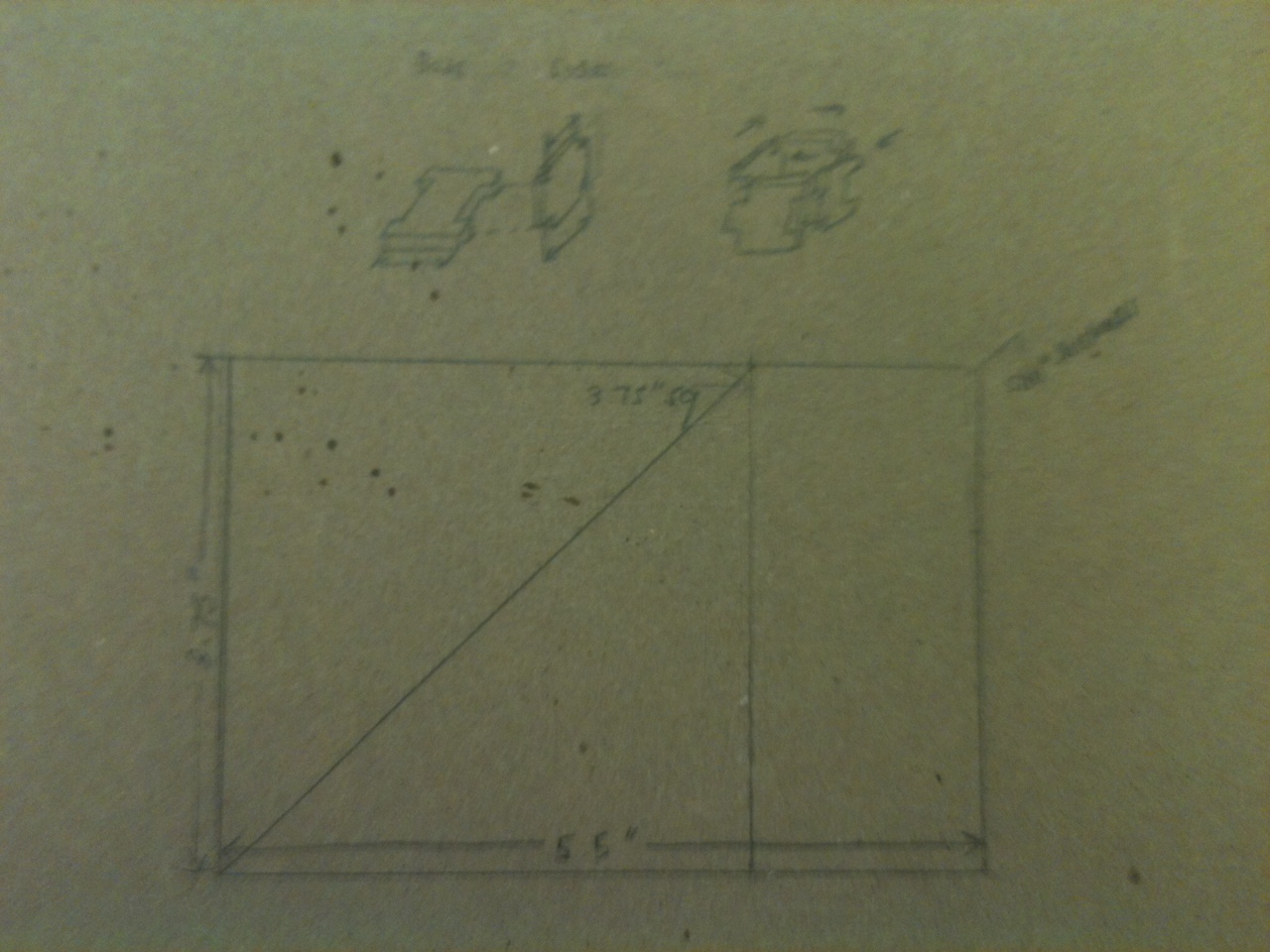

Design Sketches

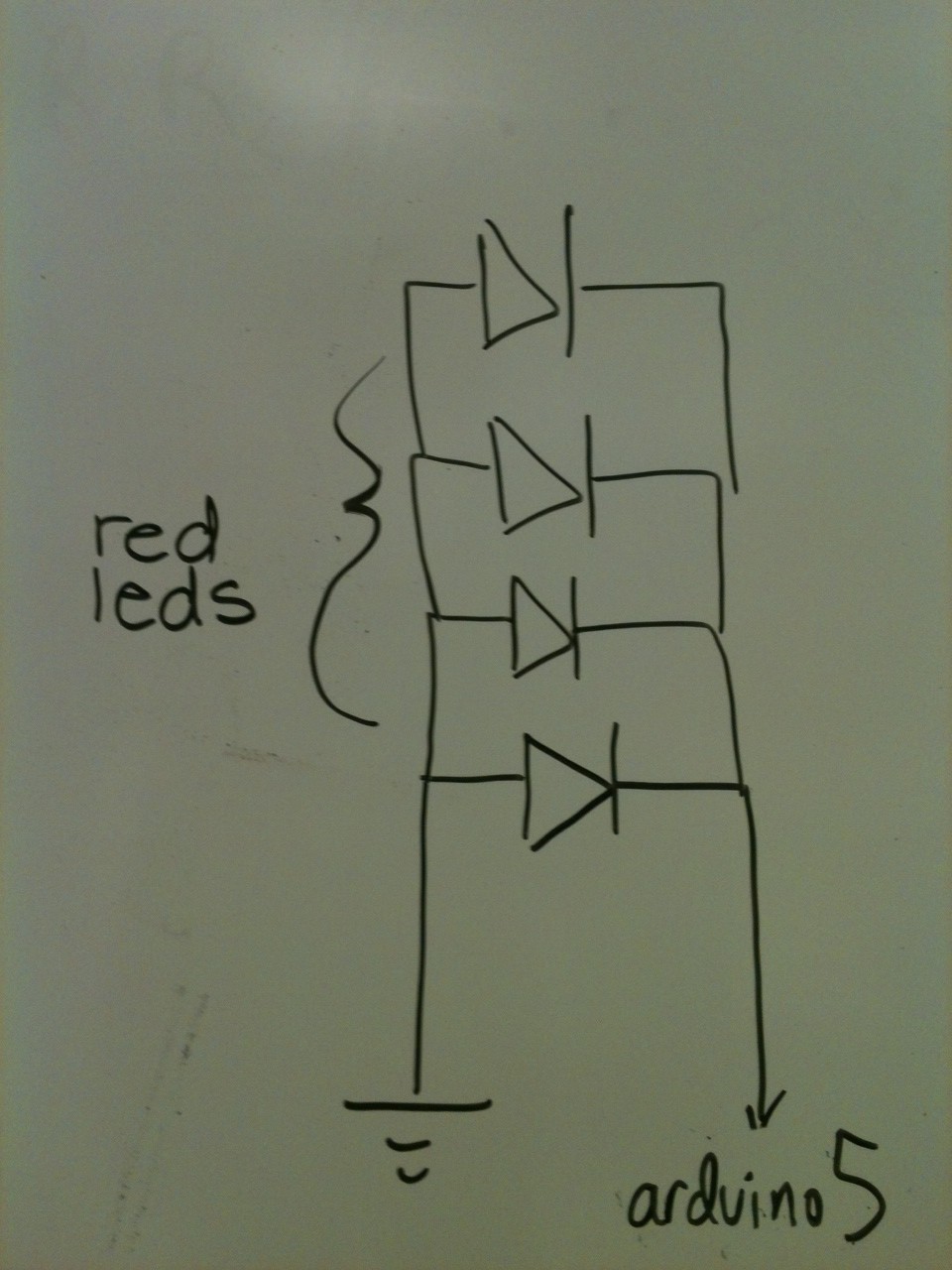

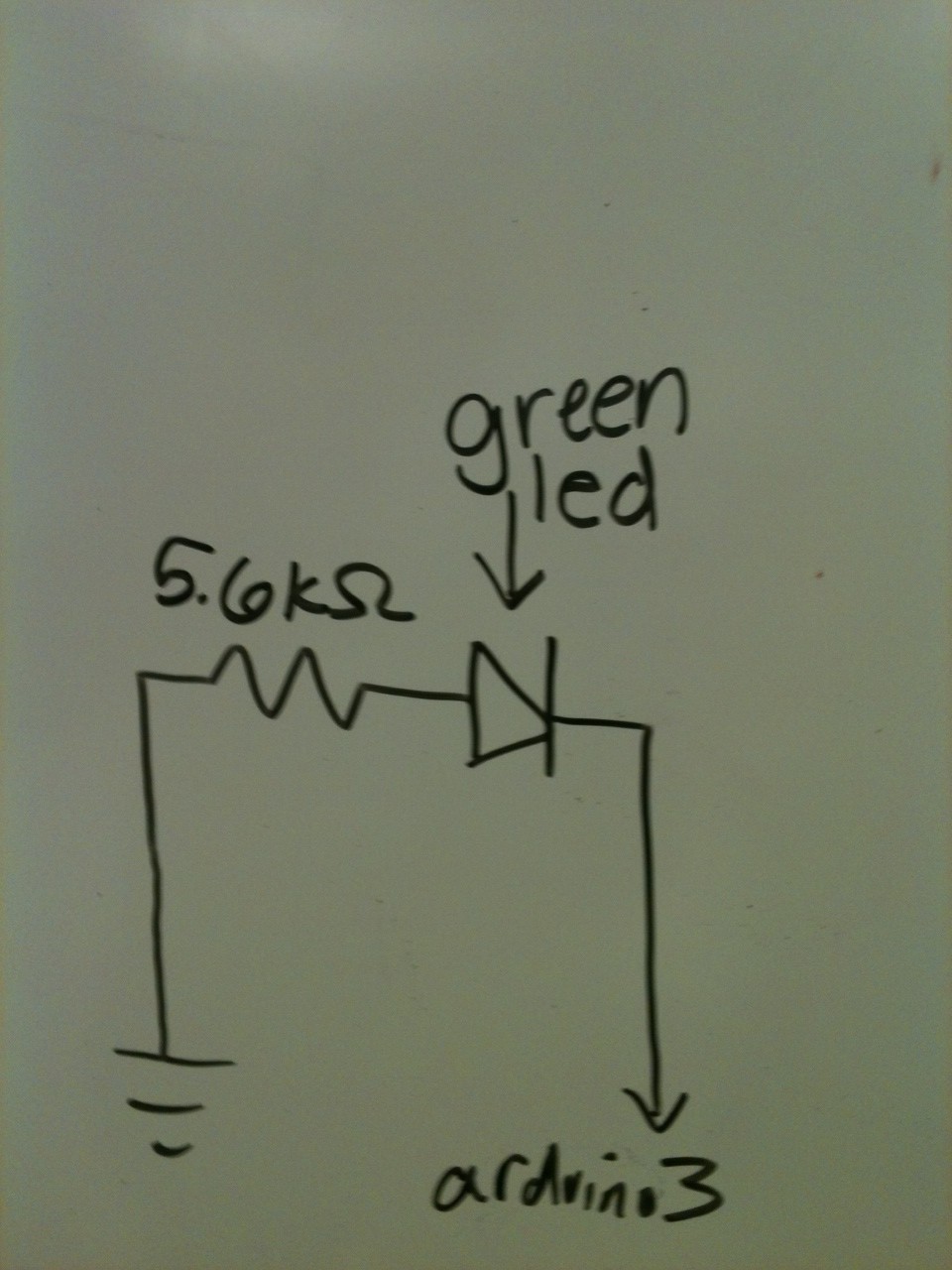

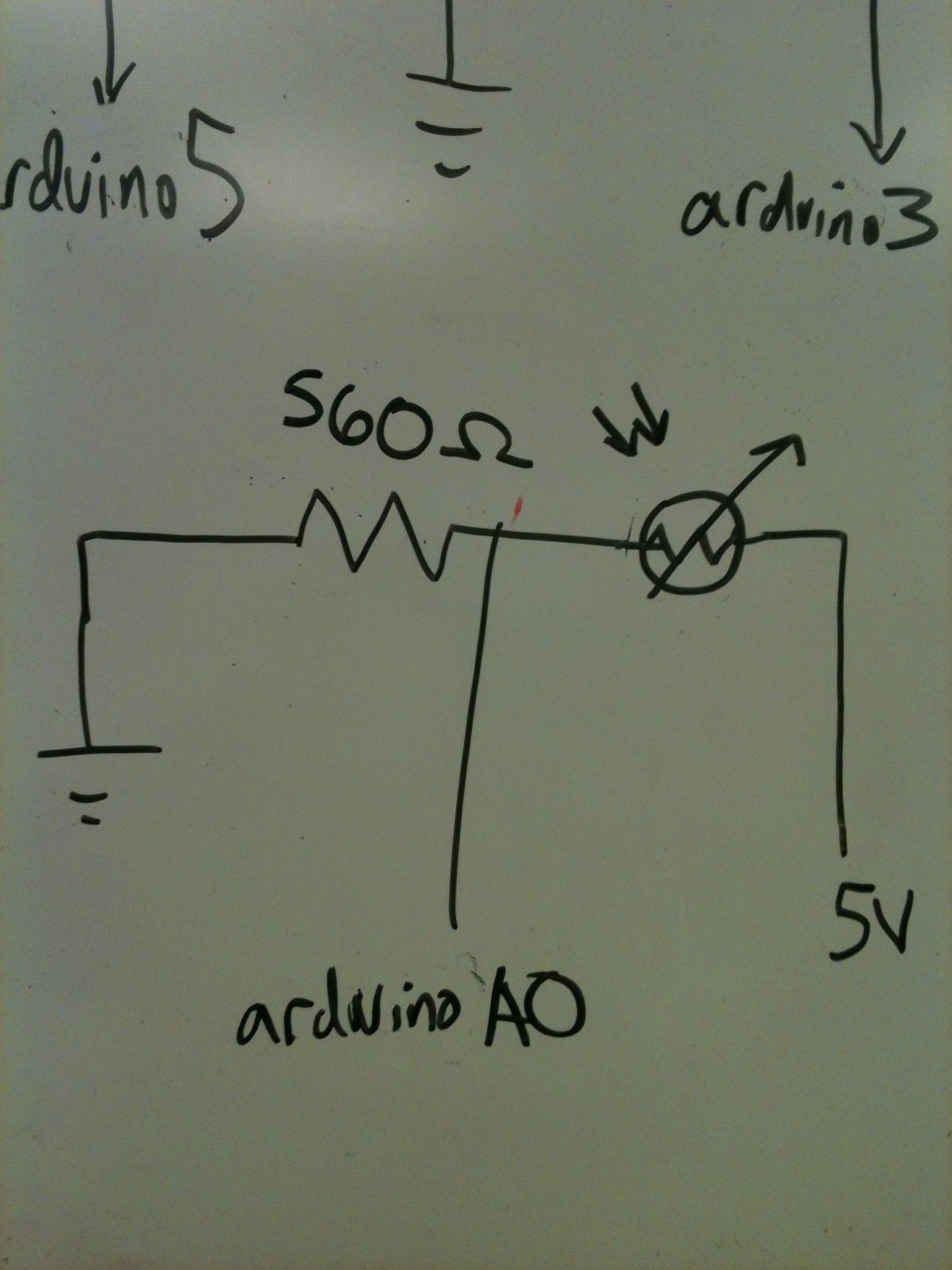

Circuit Design:

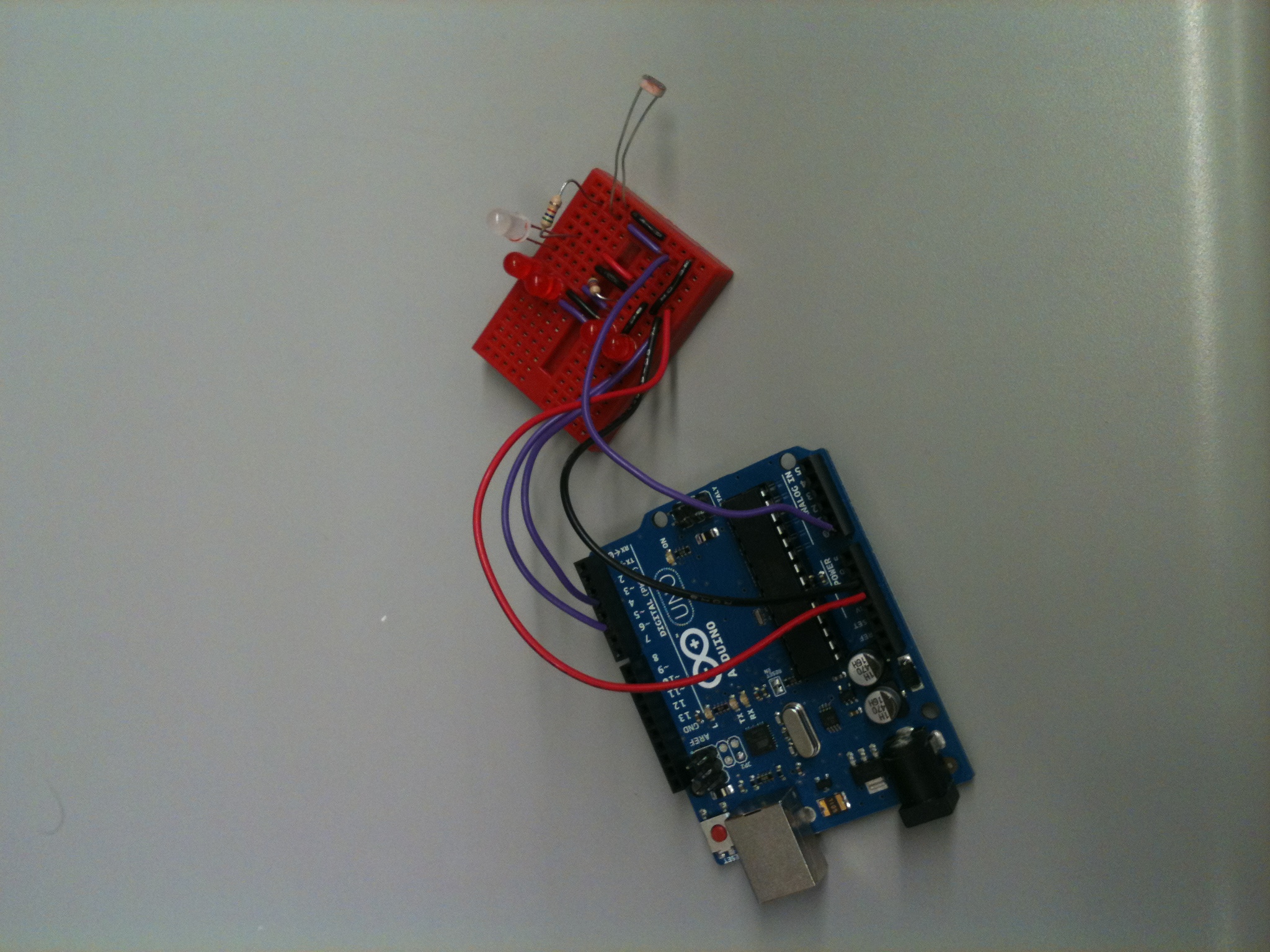

The Final Product! :

Video:

Parts we used:

4 Red LEDs, 1 White LED, Green marker, Arduino Board, USB Cable, MacBook Air, Wires, 5.6 KOhm resistor, 560 Ohm resistor, photosensor, glue, styrofoam, breadboard

Tools we used: Wire strippers, Multimeter, Scissors, Razor

We also used some Python libraries, as documented in the code.

How to make your very own Wondrous Weather Cube:

You’ll need the parts given above, as well as the code given on the GitHub repo listed below. Assemble the circuit given in the circuit diagram included above. Hopefully the product should look similar to the picture of the completed circuit provided above. Then, build the cube by cutting the Styrofoam squares as shown in the design pictures. Glue the sides of the foam together. You may have to cut a small hole for the USB cable. Then, put the Arduino into the cube, and connect to a computer. First run the arduino code (weather_nasdaqCube.ino), then run the Python code (cube_handler.py). Flip the cube over to test modes. Voila! You now have your own working Wondrous Weather Cube!

Some sources:

http://apexlogic.net/code-bank/python/pyserial-example/ http://docs.python.org/2/library/re.html http://pyserial.sourceforge.net/pyserial_api.html#module-serial

Documented Source code: https://github.com/kiranvodrahalli/WondrousWeatherCube