Cereal Killers, Group 24

Bereket, Andrew, Ryan, Lauren

We are making a gesture bracelet to do computer shortcuts.

Tasks:

We continued with our three tasks from last time, with modifications based on our testing in P4:

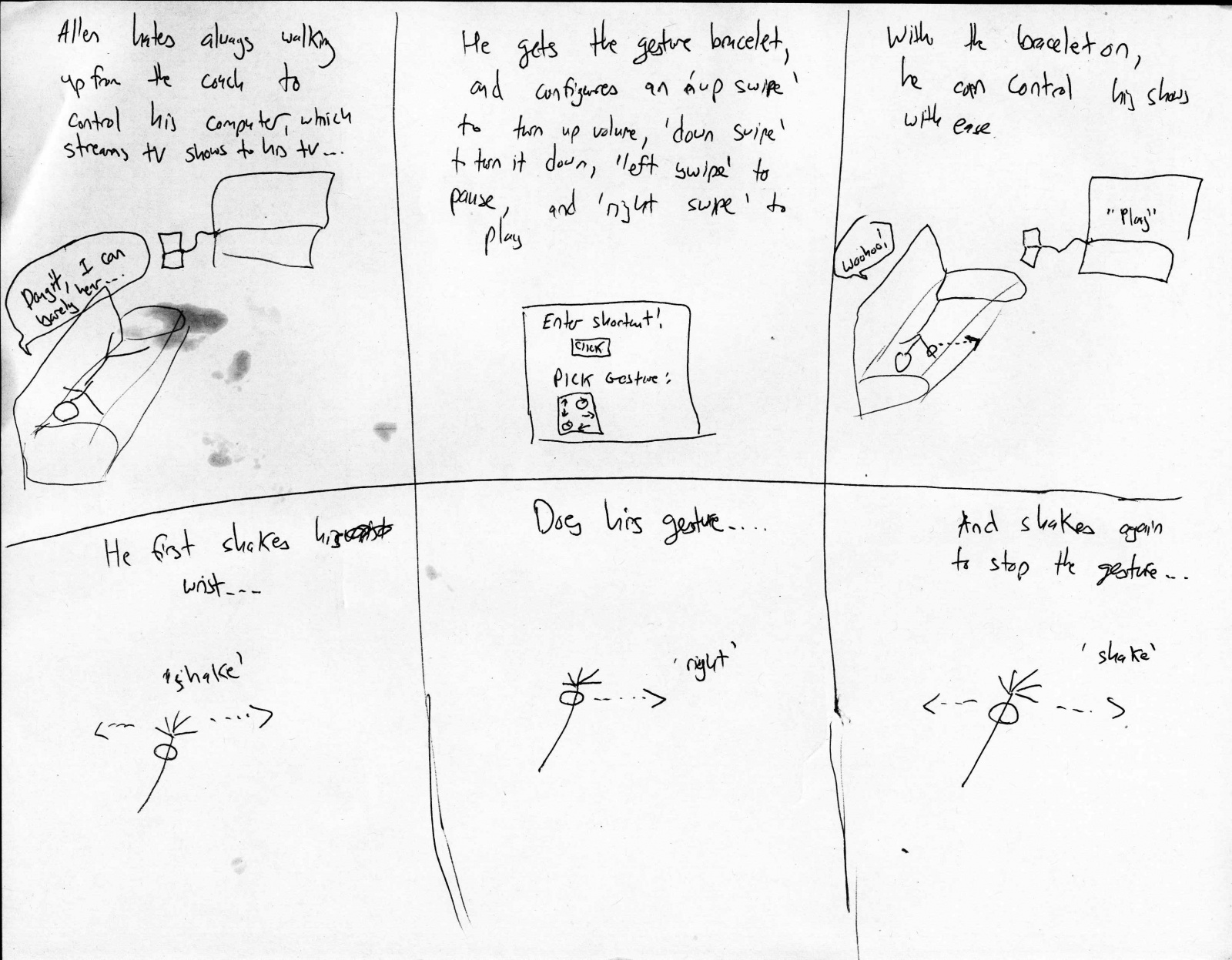

1. Controlling computer hooked up to TV without having to get up

This task is our easiest, and has a person using the gesture bracelet to control a video stream on their laptop hooked up to a TV. They can do things such as pause, play, and adjust volume.

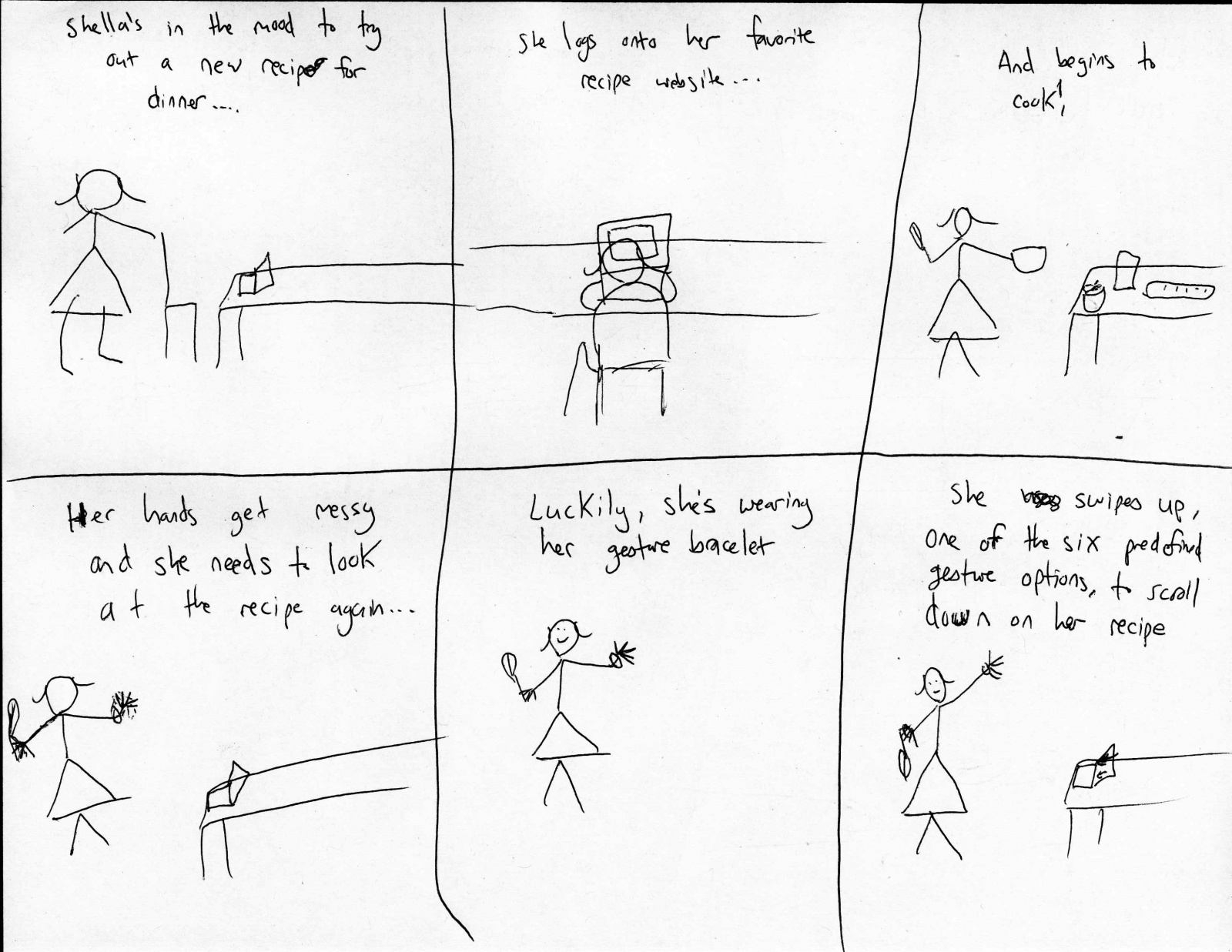

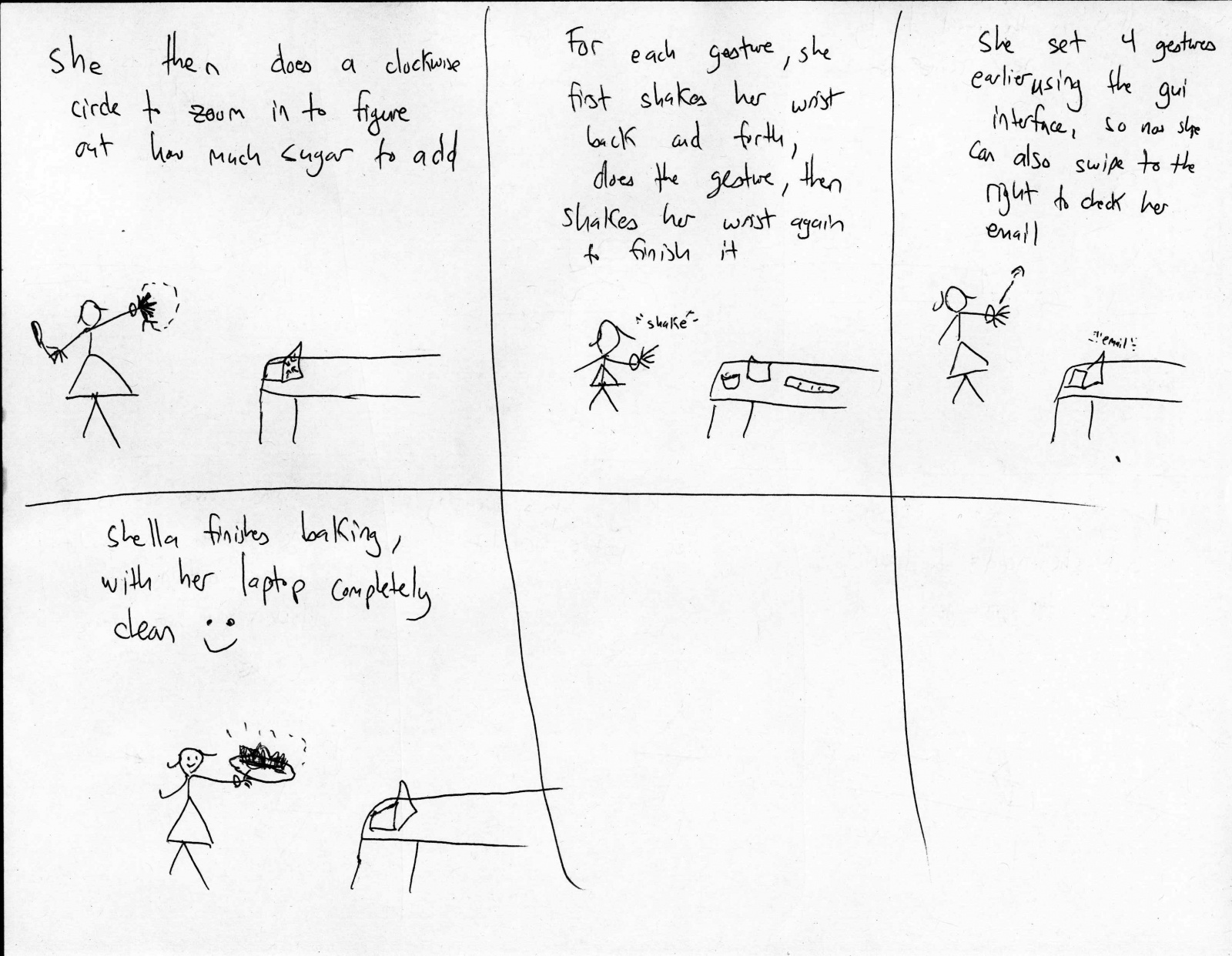

2. Using online recipe while cooking

This our our medium task. It requires the user to use an online recipe and do things such as zoom and scroll up and down while they are cooking and their hands are occupied and /or dirty. The gesture bracelet prevent the laptop from getting dirty and makes the experience easier for the experimenting cook, who may be cooking for a family or a group of roommates.

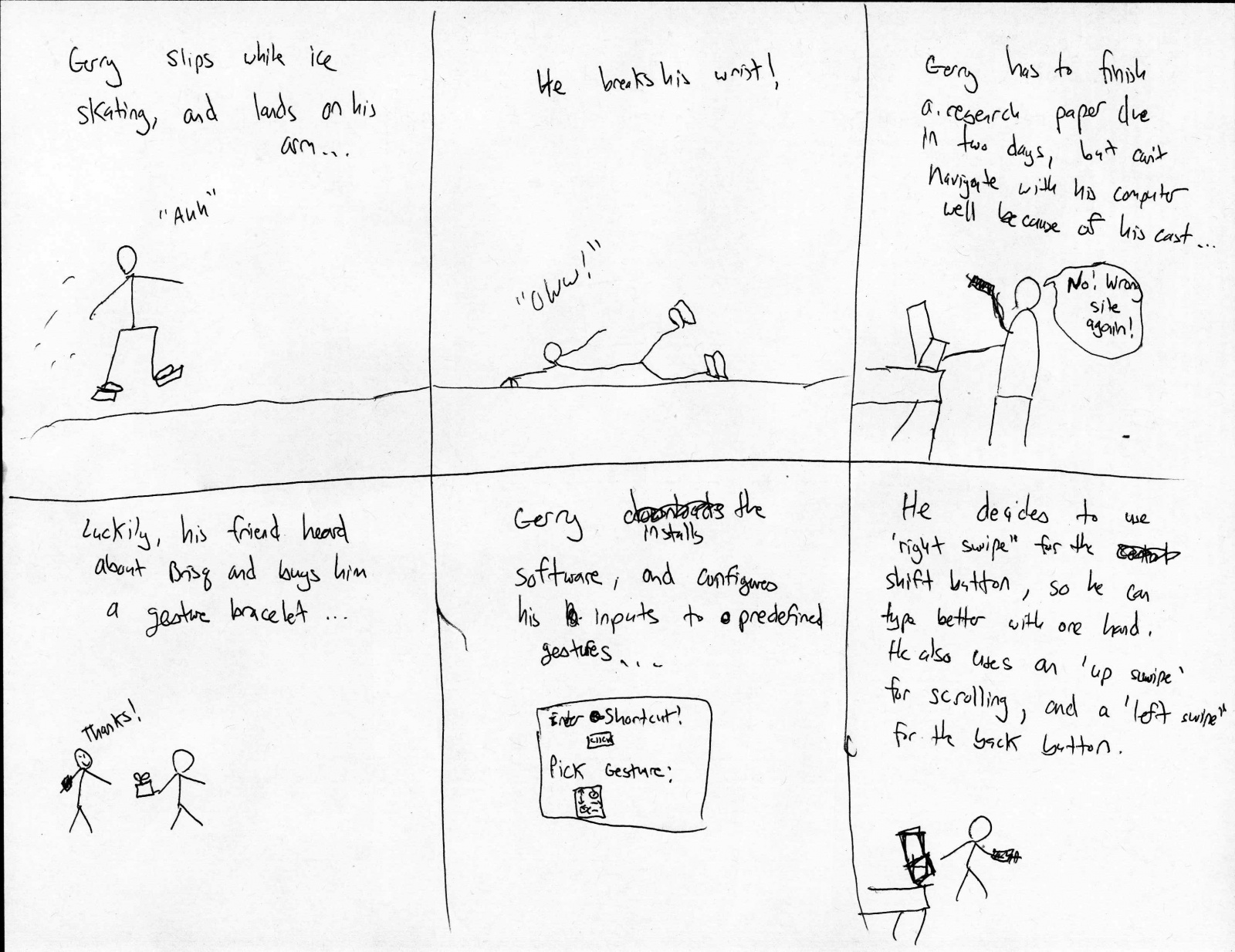

3. Assisting an injured person to aide their computing experience

This is our hard task, primarily because of the challenge for the user to pick useful shortcuts. The injured user (who can otherwise only use one hand) uses the bracelet to do things such as hold the shift key when typing, scroll through pages, and go back and forward on their web browser.

Our tasks did not change. Instead, aspects of them were adjusted to incorporate revisions to the feature bracelet. We felt that our tasks did a good job or targeting users, and based on our user tests, users agreed.

Revised Interface:

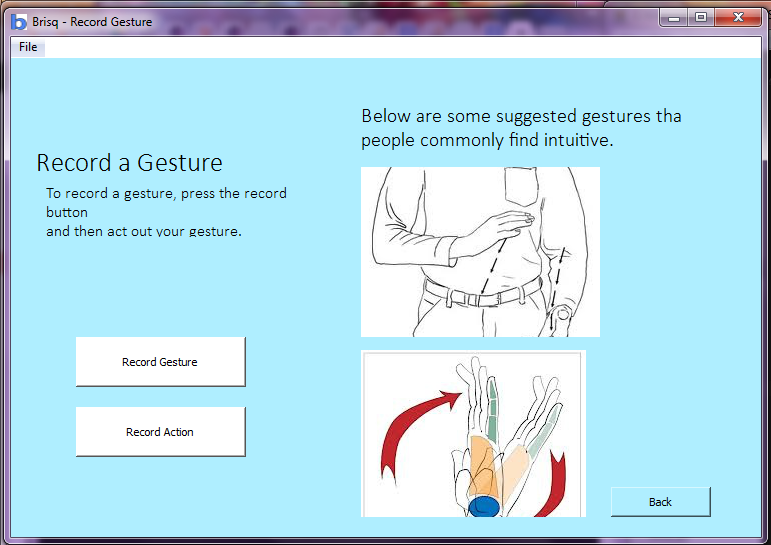

We decided to change our interface for two main features. First, we realized from user testing that users often had trouble coming up with gestures, so we made predefined gestures that they can choose from. We also added shaking the bracelet quickly, side to side before use to start and stop a gesture. This was done so that we would have an easier time recognizing gestures instead of having to look for them at all times.

Each tasks is storyboarded below and updated to include our revisions:

As can be seen, we added a list of prerecorded gestures that the user can choose from. This will be added for our final version.

New Prototype:

We implemented keyboard and mouse recording and playback. We can have a user input a keyboard sequence and map it to a gesture, or input a mouse click sequence and map it to a gesture. Videos are added below. Right now, the sequences work for windows only, but we hope to have it implemented on all operating systems eventually.

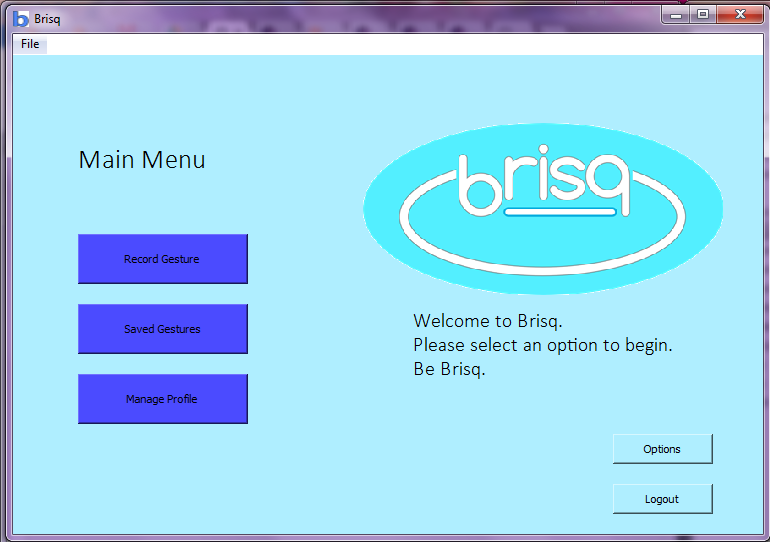

We also made a gui for the program. It is a template for our program, and we will run our code through it for the final project. The gui is pictured below.

We left out gesture recognition for our prototype. We did this since we could easily do a wizard-of-oz recognition and still get good feedback from users without having it. Meaning, we will have a group member type in a number to the terminal when the user does a gesture to trigger the matching computer shortcut. Our gui is also not perfected, so we will use a command line interface for user testing. We could then incorporate (simulate) all of the functionality of the system, and have it be believable and close to how it would actually perform for user testing. Obviously, we were time constrained, so we focused our efforts on recording keyboard and mouse input, and outputting them, and making a believable system for user testing. We were able to get data from the bracelet to python where we will do the gesture recognition, and we will work on having this and our full gui ready for our final demonstration.

The external libraries we used were pyHook, pywin32, and autopy.

Video/Images:

Logging computer input:

Emulating user output: