Basic Information (a,b,c)

Group Number: 4

Group Name: TFCS

Group Members: Farhan, Raymond, Dale, Collin

Project Description: We are making a hardware platform which receives and tracks data from sensors that users attach to objects around them, and sends them notifications e.g. to build and reinforce habits.

Tasks (d,e)

For our easy task, we plan to track trips to the gym using the accelerometer built into our sensor tags. This task should be relatively easy, as the tracker only needs to detect accelerometer motion to trigger an event. For our medium task, we will track a user’s medication usage by tagging a pill box. This task is of medium difficulty because only certain changes in measured data actually correspond to task-related events; we have to filter our data to find out when events have really occurred. Finally, for our hard task, we hope to track the user’s reading habits by tagging textbooks. This will be the hardest task for us to implement because of the complexity of pairing a magnet and magnetometer on an arbitrary book in a way that is intuitive to the user. We also have to filter our data to trigger events only in the appropriate cases, just as in our medium task.

Our team has left these tags fundamentally unchanged since P3. We found in P4 that user complaints were almost exclusively focused on interface design and aesthetics; the overall use case has received promising feedback from users. Ultimately, since our initial idea of tracking user habits remained the same, we kept the well-specified user interactions we have been using before.

Revised Interface (f)

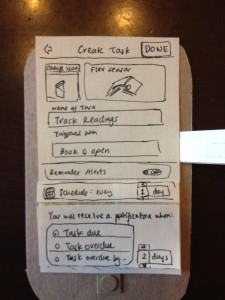

In our P4 user test, we found that users had difficulty grasping the complexity of our notifications system. We proposed that a simpler model would be a countdown timer, which is reset by the user interacting with an object. For P5, we prototyped such a system, because we anticipated that it would be interesting to test with users in P6, and also because it would get us to a state where we could start tracking tasks while being simpler technically. As a result, we removed most adjustable controls, and made it so a user only chooses a task name and task interval that they want each sensor to monitor. This has also allowed us to dedicate more screens towards walking the user through configuring the system.

The new storyboard for the application is as follows:

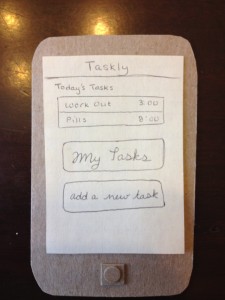

The user begins on the home screen which has updates on their tasks. There are options to go to my tasks, or add a new task

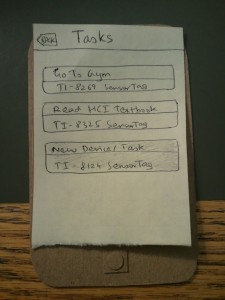

The “My Tasks” screen shows all the tasks that the user had previously configured and also any new devices that were discovered in the vicinity.

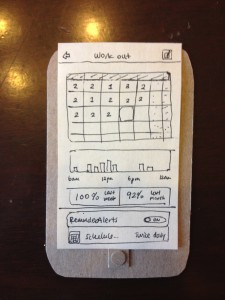

If the user clicks on a previously setup task, the page shows the user stats on the activity of that task.

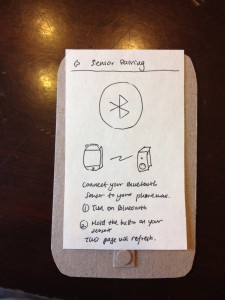

If the user clicks on a previously unseen tag, the sensor pairing screen guides the user through setting up the tag

The only place where the three tasks diverge is at this one screen where the user chooses the preset task that they wish to accomplish. This provides a unified interface across all tasks to improve the usability and ease for the user.

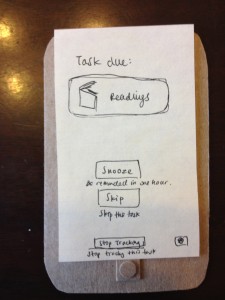

The notification part of the application will be based on APNS, the system Apple provides for application developers to send notifications to users. This relies on our web server, which will send a request to an Apple web service containing the text to be sent to the user.

As the screens demonstrate, we have simplified the interface of the app and made it very easy to setup tasks and connect new sensor tags.

Overview and Discussion (g)

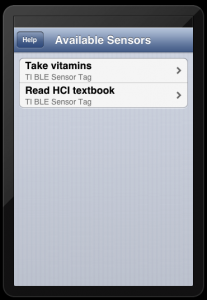

For our first working prototype, we created a working iOS application that connects to our sensor tags, logs when a task is performed, and stores data from the sensors on a database we have set up using a JSON API we designed. (For simplicity, we trigger task-performed events simply when the tag’s accelerometer/gyroscope registered values above a certain threshold, without doing any complex filtering.) These events encompass the core functionality of our app and allow us to test each of the specified tasks. The GUI is as follows:

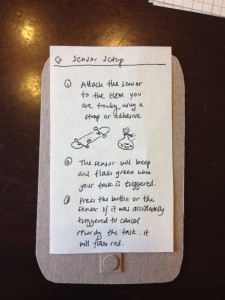

- The main page displays all the sensors in the system. If the user previously set up as a task to track and associated it with a specific sensor, when the user tries to reconnect that sensor, our application will recognize the tag and display the previously tracked task (i.e. gym tracking tag). (Image 1)

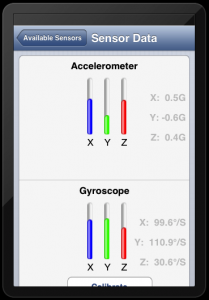

- Clicking on the task brings the user to a statistics page which displays data being read from the sensor tag. At the same time, whenever a task is recorded, the app sends our server a request specifying the task that was logged. (Image 2)

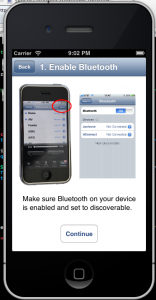

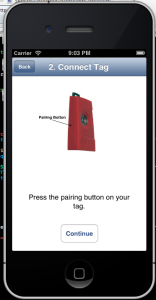

- If the user is connecting a new sensor tag (Image 1), the application will display a setup screen which explains how to connect the tag. This is a four screen walkthrough on how to setup the tag to pair with the phone (Image 4,5,6). Currently the tasks are hard-coded in the app, however in the final version the users can add the specific task they want to accomplish with their tag.

When a user triggers a sensor tag attached to an object, our iPhone app (which may run in the foreground or background) forwards the data to our server, which parses the incoming data, and stores the date/time and sensor triggering the event in a database. By abstracting away the process of sending reminders on the server, we allow ourselves to take care of it behind the scenes by manually texting the user (almost but not quite a “Wizard of Oz” tactic, since we never need to ask the user to suspend disbelief) This is shown at the end of our demo video. The data is stored in a highly normalized format, so that a simple database query SELECT sensors.taskname, sensors.apns_token FROM sensors JOIN events WHERE events.datetime < now() - sensors.interval... will retrieve all sensors which have not been triggered in due time. Thus, the server can easily send the user’s phone a push notification to alert them of overdue tasks. This simplifies changing our prototype, allows us to deploy less code to the user, and reduces the need for us to learn iOS (none of us had done Objective-C or iPhone programming at the beginning of this class).

For this prototype, we do not allow users to add their own custom tasks or to edit tasks. We found this would not help us test how a user would typically interact with the application (for P6); also, it would have hindered progress on getting core utilities working. We also do not display notifications or histograms of all of the data points recorded by the sensors. We anticipate using standard data visualization libraries to graph and display that data for the user. These compromises involve little in the way of interface or interaction design, and clearly have readymade solutions which we can draw upon; by avoiding building those into the current prototype, we grant ourselves additional flexibility as we continue to build the app’s user interface. Eventually, we would want to make data transmission as asynchronous as possible, so that the iPhone stores triggered events when it does not have an Internet connection, and then pushes those events to the server, and possibly even so that the sensor tag stores when it has been triggered, and pushes those events to the phone asynchronously.

Citations: We used the reference iOS app provided by Texas Instruments, which implements connecting to a Bluetooth Low Energy (BLE) device, collecting data, and displaying it. We replaced that application’s GUI with our own and wrote code to filter and send relevant sensor data to a remote HTTP server.

Supporting Content (h)

The data that is being logged from the sensor for the task. At the same time the tasks are being sent to the server.

Finally the user is prompted to press the connect button on the tag, which then sets up the connection. this page redirects back to the sensors page.