a. Your group number and name

Group 16 – Epple

b. First names of everyone in your group

Andrew, Brian, Kevin, Saswathi

c. A one-sentence project summary

Our project is an interface through which controlling web cameras can be as intuitive as turning one’s head.

d. A description of the test method you used. (This entire section should take up no more than roughly 1 page of text, if you were to print the blog with a reasonable font size.) This includes the following subsections:

i. A few sentences describing your procedure for obtaining informed consent, and explaining why you feel this procedure is appropriate. Provide a link to your consent script text or document.

To obtain consent, we provided prospective users with a consent form, detailing our procedure and the possible privacy concerns. We felt this was necessary since we intended to record the participants’ verbal interaction and wanted to relieve any fears that may prevent them from interacting in an honest manner.

[LINK]

ii. A few sentences describing the participants in the experiment and how they were selected. Do not include names.

Participants were selected based on how frequently they use video chat either to talk to family or friends. We chose people by selecting amongst our friends people who engaged in web chats at least once a week and were comfortable with participating in our experiment. All the selected participants are Princeton undergraduate students who fit this criteria. All three had family in distant states or countries and web-chatted frequently with them.

iii. A few sentences describing the testing environment, how the prototype was set up, and any other equipment used.

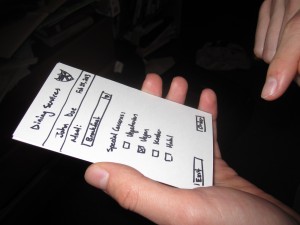

Our prototype uses a piece of cardboard with a cut out square screen in it as the mobile viewing screen. The user simply looks through the cut out square to view the feed from a remote video camera. From the feed, the user can view our prototype environment. This consists of a room with people that the user web chats with. These people can either be real human beings, or in some cases printed images of human beings that are taped to the wall and spread about the room. We also have a prototype Kinect in the room that is simply a decorated cardboard box.

iv. Describe your testing procedure, including the roles of each member of your team, the order and choice of tasks, etc. Include at least one photo showing your test in progress (see above). Provide links to your demo and task scripts.

We divided our work very evenly, and everyone was involved in each part.

Andrew: Worked on script, post, and demo

Brian: Worked on script, post, and demo

Kevin: Worked on script, post, and demo

Saswathi: Worked on script, post, and demo

Demo script:

Hello, you have been invited to test our prototype for an interface for web camera control. The purpose of our interface is to allow a user to intuitively control a web camera through simple body movements that will be viewed by a Kinect. You will be given a mobile screen through which you can view the feed of a web camera with. Just imagine this is an iPad, and you are using Facetime with it. You can naturally move this screen, and the camera view will change correspondingly. Here we will demo one task so that you can better understand our system. This is Brian. He is your friend, who I am trying to webchat with. We share many fond memories together, but he has a habit of frequently leaving in the middle of your conversation. He is a bit strange like that, but as he is my friend, I bear with him. Sometimes, he’ll leave for up to ten minutes to make a PB&J sandwich, but he expects me to continue the conversation while he is making the sandwich. When he does this, I intuitively move the mobile viewing screen to follow Brian around so that he doesn’t become an off-screen speaker. I can then continue the conversation while he is within the camera’s view.

Task Script 1: Brian get’s a PB&J sandwich

The first task that we want you to do is to web chat while breaking the restriction of having your chat partner sit in front of the computer. With a typical interface, this scenario would just cause your partner to go off screen, but with our interface, you can now simply move the screen to look and talk to a target person as he moves. In the task, the person may move around the room but you must move the screen to keep the target within view while maintaining the conversation.

Task Script 2: Brian asks you to find Waldo

The second task is to be able to search a distant location for a person through a web camera.

While you might seek out a friend in Frist to initiate a conversation, in web chat, the best you can do is wait for said friend to get online. We intend to rectify this by allowing users to seek out friends in public spaces by searching with the camera, just as they would in person.

You will play the “Where’s Waldo” game. There are various sketches of people taped on the wall and you need to look through the screen and move it around until you are able to find the waldo target.

Task Script 3: Brian and family including bolt the dog!

The third task is to web chat with more than one person on the other side of the web camera.

A commonly observed problem with web chats is that even if there are multiple people on the other end of the web chat, it is often limited to being a one on one experience where chat partners wait for their turn to be in front of the web camera. We will have multiple people carrying a conversation with you, and you will be able to view the speakers only through the screen. You can turn the screen in order to address a particular conversation partner. When you hear an off-screen speaker, you may turn the screen to focus on him.

e. 1–2 paragraphs summarizing your results, as described above. Do not submit your full logs of critical incidents! Just submit a nicely readable summary.

The prototype we constructed was simple enough for our users to quickly learn how to use it with only minimal verbal instruction and demonstration. Overall, the response was positive from the users when asked if Portal would be a useful technology to them. There were some issues brought up that were specific to the tasks given to the users. For example, in the first task we asked users to move and keep the user on the camera side in view as he ran around. One user commented that this was a bit strange and tedious, and that it might be better to just have the camera track the moving person automatically. In the second task, we asked the user to find a picture of “Waldo” hidden somewhere in the room amongst other pictures of people. Two of the users noted that our prototype environment was not an accurate representation of a crowd of people in a room as just having pictures taped to the wall cannot easily capture factors such as depth perception, crowd density, and people hidden behind other people. In the third task we ask the user to move the screen to bring an offscreen speaker into view. This was easy with our prototype because two of our users noted that they could use their peripheral vision and binaural hearing to cheat and determine the direction in which they should turn to face any offscreen speaker. However, peripheral vision and audio cues will not actually be present when using our working implementation of the product, so this is another inaccuracy of our prototype. They did note that they could still pick up on the movements of the person they were watching to determine which direction to turn.

f. 1–2 paragraphs discussing your results, as described above. What did you learn from the experiment? How will the results change the design of your interface? Was there anything the experiment could not reveal?

We obtained much useful input about our prospective design. For example, we found that using something like an iPad would be useful for the mobile screen because it would allow users to rotate the screen to fit more horizontal or vertical space. We may or may not implement this in our prototype but it is something that would be worthwhile if we chose to mass produce our product. Another possible change (depending on time constraints and difficult) is the possibility of adding capacity for 3D sound input. We recognize the possible need for this change due to our users mentioning that audio cues help identify where to turn for facing offscreen speakers. 3D sound would enable users to use their binaural hearing to assist in determining the location of an offscreen speaker with ease and precision. We could also implement a way for users to get suggestions on which way to turn the screen based on sound detection on the camera side. The possible changes brought up are, however, nonessential.

The experiment, being limited in fidelity, allowed the user to sometimes “cheat” in accomplishing some tasks (using peripheral vision when finding waldo, for example), limiting the accuracy of our feedback. Thus, our experiment did not reveal how users would perform without the use of sound and peripheral vision cues to be able to turn the camera in the correct direction. Also, it did not provide an accurate representation of how users would search for friends in a crowd due to the limitations inherent with using paper printouts in place of people. Finally, we could not simulate a rotating camera in front of users, and thus did not see how users would react to a camera in their room being controlled remotely. However, overall, the experiment revealed that there are no fundamental flaws present with our system design that would stop us from proceeding with building a higher fidelity prototype.

g. A 1–2 paragraph test plan for subsequent testing, or a statement that you are ready to build a higher-fidelity prototype without further testing.

We are ready to build a higher-fidelity prototype without further testing. We feel we have received sufficient input from users and will not gain any more information that would necessitate major usability changes by doing further testing on our low-fidelity prototype. We also noted that many of the main points that users made had to do with the inaccuracy of the prototype but did not point out any major, fundamental flaws with our system design that would prevent us from moving on to a higher fidelity prototype. The flaws pointed were mainly either cosmetic and nonessential or would require a higher-fidelity prototype to gain accurate feedback from.