Group 6 (GARP): The GaitKeeper

Group members:

Phil, Gene, Alice, Rodrigo

One sentence description:

Our project uses a shoe insole with pressure sensors to measure and track a runner’s gait, offering opportunities for live feedback and detailed post-run analysis.

Links to previous blog posts:

P1 – http://blogs.princeton.edu/humancomputerinterface/2013/02/22/team-garp-project-1/

P2 – http://blogs.princeton.edu/humancomputerinterface/2013/03/11/group-6-team-garp/

P3 – http://blogs.princeton.edu/humancomputerinterface/2013/03/27/gaitkeeper/

P4 – http://blogs.princeton.edu/humancomputerinterface/2013/04/08/gaitkeeper-p4/

P5 – http://blogs.princeton.edu/humancomputerinterface/2013/04/21/p5-garp/

P6 – http://blogs.princeton.edu/humancomputerinterface/2013/05/06/p6-the-gaitkeeper/

Pictures and Videos with Captions:

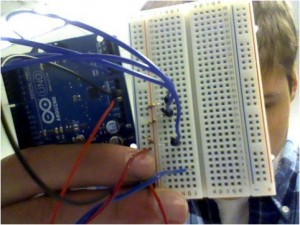

Pictures of the prototype – https://drive.google.com/folderview?id=0B4_S-8qAp4jyYk9NSjJweXBkN0E&usp=sharing . These photos illustrate the basic use of the prototype, as well as its physical form factor. You can see from them how the insole and wires fit in the shoe, and how they fit to the user’s body. This was designed to make the product have a minimal effect on the user’s running patterns, so these aspects of the user interaction are especially important.

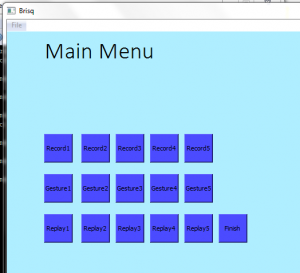

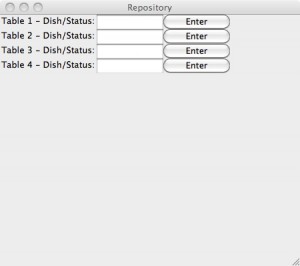

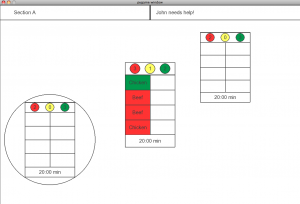

Video of computer-based user interface – Computer-Based UI . This video (with voiceover) demonstrates the use of our user interface for saving and viewing past runs.

Video of live feedback based on machine learning – Live Feedback from Machine Learning . This video (also with voiceover) demonstrates the live feedback element of the GaitKeeper, which tells the user if their gait is good or not.

Changes since P6:

-

Slightly thicker insole with a stronger internal structure – Thickness did not appear to be an issue for the testers, since the insole was made of a single sheet of paper. We observed some difficulty in getting the insole into the shoe, however, and felt that making it slightly thicker would be useful in solving this issue.

-

Laminated insole – One of our testers had previously run that day, and his shoes were still slightly sweaty. When taking off the insole, the sweat from his shoe and sock made the insole stick to him. When removing it from his foot, the insole also was torn slightly. We noticed that the tape part didn’t stick, and felt that making the entire insole of similar material would solve this issue.

-

Color changes in the UI heatmap – one of our testers noted that he found the colors in the heatmap to be visually distracting and different from traditional heatmaps. This issue was corrected by choosing a new color palette.

-

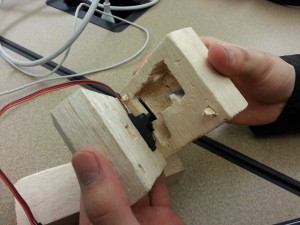

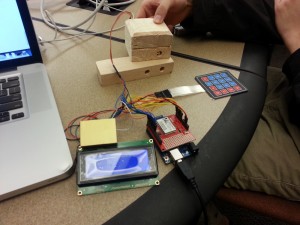

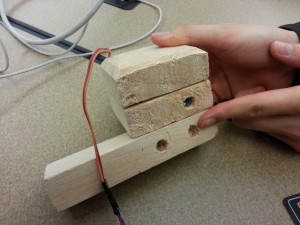

Enhanced structural support for the Arduino on the waist – After user testing, we found significant wear and tear on the arduino box which is attached to the user with a waistband. This was reinforced to make it more durable. It was made slightly larger, which we felt was not an issue since users indicated that they found the previous implementation acceptably small and this change did not significantly affect the form factor.

-

Ability to run without USB connection – This was an element which we had originally planned in the product, but were not able to fully execute for P6. We used wizard of oz techniques at the time, and this replaced that. Now, data can be imported into the computer from the arduino for analysis after a run. Unfortunately, live feedback still requires a computer connection, but given further iteration could possibly be made mobile as well.

-

Wekinator training of live feedback during running – During testing, this was a wizard of oz element, where the lights went on and off for predetermined amounts of time to simulate feedback from the system. This was replaced with true live feedback which is informed by the Wekinator’s machine learning abilities.

-

Ability to save and view saved data in the UI – User testing was done with a simulated run from our own testing data, rather than from actual saved runs. We have added the ability for the user to save and view their own data imported from the arduino

-

Ability to import arduino data – User testing relied upon user simulation of the data upload process. This is now fully implemented, and allows users to see the results of their running.

Explanation of goal and design evolution:

We began the semester with very little information about how a runner’s gait is actually assessed, but with the understanding that it was generally based on direct observation by a planned professional. We originally planned to have a device which bridged the gait analysis demands of store employees, medical professionals, and runners themselves. Over time, we realized that one of those three user groups had a very different set of needs, which resulted in us deciding to focus on just store employees and frequent runners. Both user groups were considered by us to be well informed about running, and would be using the product to observe gait through a run for technique modification and product selection. Our goals were then modified to better serve those user groups by focusing on the post-run analysis features, such as the ability to save and access old data.

Also, at the beginning of the semester, we had wanted to design the device to provide live feedback. Over time, we came to realize that meaningful live feedback required a machine learning tool like Wekinator. As a result, we were forced to maintain a computer connection for live feedback, which was a change from the fully mobile vision we had at the beginning. This has slightly changed our vision for how the live feedback element of the product would be used; given the tethering requirement, live feedback would probably be most useful in a situation where the runner is on a treadmill and is trying to actively change their gait. Other changes in design included a remake of the pressure-sensing insole, which our testers originally found to be sticky, difficult to place in a shoe, and overly fragile. We moved from a paper-based structure to a design of mostly electrical tape, which increased durability without a significant cost in thickness.

Critical evaluation of project:

It is difficult to say whether this product could become a useful real-world system. In testing, our users often found the product to be interesting, but many of the frequent runners had difficulty in really making use of the data. They were able to accurately identify the striking features of their gait, which was the main information goal of the project. One thing we observed, however, was that there were not many changes in gait between runs, with most changes occurring due to fatigue or natural compensation for small injuries. That led us to conclude that the product might be better suited for the running store environment, where new users are seen frequently. Given the relatively small number of running stores, we believe the most promising market for this product would be small, and focused on the post-run analysis features. Live feedback was much less important to the running store employees, who were willing to tolerate a slight delay to get more detailed results. We found that this space enjoys using technology already (such as slow motion video from multiple angles), and was quite enthusiastic about being able to show customers a new way to scientifically gather information about their gait and properly fit them for shoes. Their main areas of focus on the product were reusability, the ability to fit multiple shoe sizes, accuracy of information, and small form factor.

We feel confident that further iteration would certainly make the product easier to use, also more focused on the running store employee user group, since they appear to be the ones most likely to purchase the product. That being said, we are unsure that this device could progress beyond being more than a replacement for existing video systems. Despite several conversations with running store employees, including contextual interviews while they met with actual customers, we were unable to identify any real information uses outside of the ones currently performed by the visual video analysis. While our product is more accurate and takes a more scientific approach, achieving adoption would likely be a major hurdle due to the money such stores have already invested in video systems.

While the live feedback functionality is a quite interesting element of the project, it seems to have a less clear marketable use. The runners we spoke to seemed to feel that live feedback was an interesting and cool feature, but not one that they would be willing to pay for. Most (before testing) felt that their gait did not change significantly while running, and in surveys indicated that they already use a variety of electronics to track themselves while running. These products include GPS, pedometers, and Nike+. The runners consistently rated information feedback such as distance, location, pace, and comparison to past runs as more important than gait, running style, and foot pressure. They also indicated an unwillingness to add additional electronic devices to their running, which already often involves issues of carrying a large phone or mp3 player. As a result, one avenue which has some potential would be integration into an existing system. The most likely option in this field would probably be Nike+, which is already built around a shoe. Designing a special insole which communicates with the shoe (and through it, the iPod or iPhone) would be a potential way to implement the gait feedback device as a viable product for sale. Clearly, this would have significant issues with licensing and product integration (with both Nike and Apple), but otherwise there does not appear to be a real opportunity. As a result, we concluded that the product’s future would almost definitely require a stronger focus on the running store employee demographic.

Future steps if we had more time:

With more time, one of the things we would spend a great deal of time on would be the training of the arduino for live feedback. Our users gave feedback several times that the two light system was not enough to really guide changes in gait, especially given that many changes in running style happen subconsciously over time as the runner gets tired. The system did not give enough indication on how to fix the problem, only indicating the fact that a problem existed. This could be solved through integration into a system like Nike+ or other phone apps, which would allow a heatmap gui to give directions to the runner. Before implementing such a system, we would like to speak more with runners about how they would interact with this format of live feedback, as well as if they would want it at all. Following that, more testing would be done about the most effective ways to convey problems and solutions in gait through a mobile system.

Although live feedback is likely the area which has the most opportunity for improvement in our prototype, our understanding of the targeted users indicates a stronger demand for the analysis portion for use in running stores. Therefore, we would likely focus more on areas such as reusability and durability, to ensure that multiple users of different characteristics could use the product. Furthermore, we would revisit the idea of resizing, which is currently done by folding the insole. It is possible that multiple sizes could be made, but resizing is a more attractive option (if it is feasible) because it allows running stores to purchase only one. This would likely involve more testing along the lines of what we already completed: having users of different shoe sizes attempt to use the product, either with or without instructions on resizing. Additionally, for the running store application, we would seriously consider doing something to limit the amount of wires running along the user’s leg. This could be done using a bluetooth transmitter strapped on the ankle, or through a wired connection to a treadmill. While this is a significant implementation challenge, it seems that a feasible solution would likely exist. Lastly, we found the machine learning tools to be quite interesting, and would also consider explore using a veteran employee’s shoe recommendations to train our device to select shoes for the runner. This would allow the store to hire less experienced employees and save money. Such a system would also likely require testing, in which we would gain a better understanding of how this would affect the interaction between the store employee and customer. It would be very interesting to see if such a design undermined the authority of the employee, or if it made the customer more likely to buy the recommended shoe.

Source code and README zip file:

https://docs.google.com/file/d/0B4_S-8qAp4jyZFdNTEFkWnI2eG8/edit?usp=sharing

Third-party code list:

-

Wekinator (http://wekinator.cs.princeton.edu/) was used for machine learning in the live feedback on the device. We used it to help train the arduino to give accurate feedback about running style, rather than creating our own heuristic rules.

-

ControlP5 (http://www.sojamo.de/libraries/controlP5/) was a Processing library we used for creating the GUI. It included simple commands for buttons, colors, etc. which were used to make the GUI look more polished and professional.

-

File chooser (http://wiki.processing.org/w/Open_Files_through_a_GUI) was used for opening files from eeprom

- Processing Arduino to OSC example sketch (http://liamtmlacey.blogspot.com/2011/03/arduino-to-maxmsp-via-osc-guide-and.html) was used for the Wekinator integration.

PDF Demo Materials:

https://docs.google.com/file/d/0ByIgykOGv7CCbGM3RXFPNTNVZjA/edit?usp=sharing