Group 17 – BlueCane

Members: Joseph Bolling, Jacob Simon, Evan Strasnick, Xin Yang Yak

Project Goal

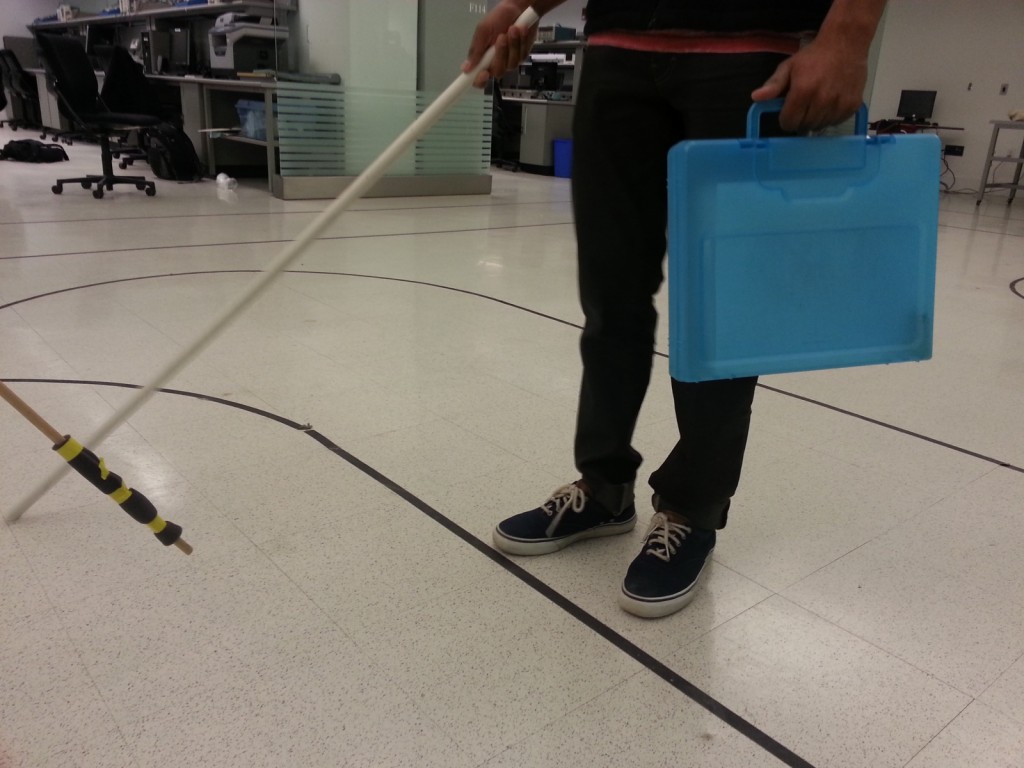

Our project goal is to improve the autonomy, safety, and overall level of comfort of blind users as they attempt to navigate their world using cane travel by augmenting the traditional cane with haptic and touch-based feedback.

Test Methods

When conducting a test with a volunteer user, we first had them read and sign our consent form. We also verbally reiterated the information contained on the form, including the fact that the user would be asked to move while blindfolded and wearing headphones. We explained that we would be taking pictures during the testing process, but that the user would not be identifiable from the pictures. We assured the user that we would verbally, and, if necessary, physically warn them before they ran into any objects, if it appeared that they would. Before continuing, we ensured that the user was comfortable with the test and that they had no unanswered questions. Participants were selected among students at Princeton according to availability and were all equally (un)familiar with blind navigation. None had extensive prior knowledge about the project.

We used the electrical engineering laboratory as our experimental environment. It provided an expansive area for the participant to walk around freely, and the existing floor markings adapted quite well to our use. We first had participants watch an instructional video on cane navigation and practice proper technique, then we had them perform the three tasks that we described earlier—navigation while carrying an item, navigation in a noisy environment, and indoor navigation with a static reference direction. We randomized the order of the tasks to reduce the chance that user feedback was skewed by learning effects. (scripts here)

Results

Generally, all the users found cane travel unfamiliar, and tended to seek tactile feedback before taking a bigger step. There was a significant learning effect, as the users became more used to being blindfolded and traveling with a cane. One user walked very slowly, which allowed him to following the directions closely, while the other two users took bigger steps, leading them to occasionally veer off the path. All three users found tasks 1 and 2(following navigation directions) easier than task 3(walking in a cardinal direction). Users did not notice any additional difficulties from carrying an object in one hand or from the lack of auditory cues from the ambient environment, which shows that our generally approach works well with users having to carry extra items on one hand and in noisy environments.

Tasks 1 and 2: at sharp turns (more than 5 times), the cane swing is not wide enough to ‘hit’ the direction where the tactile feedback will be given, and the user is left wondering where he/she is supposed to go. One user compensated by walking with very wide cane swings (more than 180 degrees), but we think that this is unrealistic as blind users are unlikely to make such wide swings. Then it takes some time for the user to swing the cane wide enough before the user gets some tactile feedback. If there are obstacles in the direction where the tactile feedback is given, this can be annoying. So we also tapped the user on the shoulder to simulate the raising of the ridges when there is a sharp turn ahead, but this was confusing, since the indication happens even though the user is walking in the same direction as where the tactile feedback is given (making the user also think that she is walking in the correct direction). Users also tend to become quite reliant on the tactile feedback after a while, and became disoriented once the tactile feedback is no longer given.

Task 3: One of the users also mentioned that the prototype would need a way for the user to know which mode the cane is in (whether it is navigating or just acting as a compass). Two of the users often veered off significantly from the desired direction, sometimes by more than 30 degrees, even when a reference cardinal direction is given. This happened about 4 times. Another thing mentioned by a user is that indications of cardinal directions other than the north would be useful, since the user would have to swing all the way back to check bearings if the user wants to head south. With the task where the user is allowed to set a direction, one user mentioned that once the cane is set to an incorrect direction, the direction will continue to be incorrect and would cause the user to veer off in the wrong direction consistently.

Discussion

In general, our three participants confirmed the basic elements of the design. With no prior experience in sightless navigation, they were still able to navigate the experimental space, albeit at a slower pace than blind users would. The users agreed that navigating in the “noisy environment” task was not more difficult, which supports our belief that haptic feedback is a useful mechanism for navigation.

One of the most important questions left unanswered by our first prototype is whether our observations can be generalized to blind users. Because we used blindfolded students as participants in our experiment, we can only learn so much about the effectiveness of the prototype. Furthermore, these participants lack some of the navigational skills that blind people obtain through experience, and they may rely instead on navigational heuristics that come from visual knowledge. We tried to mitigate these effects by teaching participants how to use a cane for navigation and allowing them to practice, but a longer-term study might have done a better job of ruling out these confidence-related issues altogether.

We would also like to determine if the experimental environment can be extrapolated to the real world. Users completed the three tasks without real buildings, roads, or other common obstacles. In later tests, we would like to simulate these conditions more accurately. For the purposes of this test, however, it was important to verify that the prototype was functional without added difficulties and variables.

Our findings led us to several possible improvements on our original design. Firstly, because subjects did not clearly understand when to execute a turn relative to when the cue to do so was given, we have given additional consideration to how we can clearly convey the distance of an upcoming turn. Most of these solutions are software based; for example, we can warn about an upcoming turn by using a flicker of the raised indicator in that direction, and only when the turn is actually to be made will the indicator stay raised. In addition, we noted that, at least for our seeing test subjects, navigation at some point became reliant on the cues that we provided, raising the question of what might happen when the system fails (e.g. the battery runs out). As one of our original interviewees pointed out, it is more difficult for the blind to recognize, diagnose, and fix unforeseen errors. Thus, we are discussing plans to include a battery indicator that cues the user to change the power source. Finally, we were asked multiple times how to differentiate between the different “modes” of the cane (route guidance vs. cardinal reference), which has led us to consider the most minimal type of cue that can elucidate this distinction for the user.

Testing plan

We will continue to search for a blind user to get feedback from, but in the meantime, we will begin to work on a higher-fidelity prototype based on the feedback obtained during this round of experimentation.