a. Group information

Group number: 18

Group name: %eiip

Erica (eportnoy@), Mario (mmcgil@), Bonnie (bmeisenm@), Valya (vbarboy@)

b. Project description

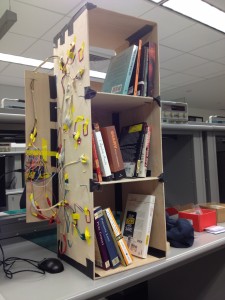

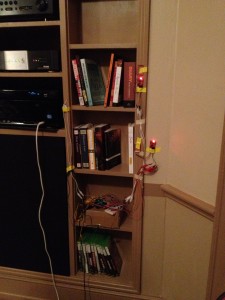

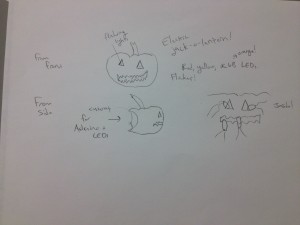

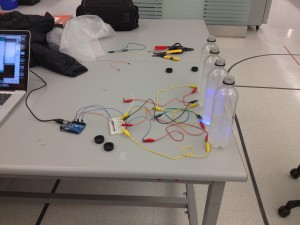

Our project is a smart bookshelf system that keeps track of which books are in it.

c. Previous blog posts

P1: https://blogs.princeton.edu/humancomputerinterface/2013/02/19/biblio-file/

P2: https://blogs.princeton.edu/humancomputerinterface/2013/03/11/eiip-p2-interviews/

P3: https://blogs.princeton.edu/humancomputerinterface/2013/03/27/eiip-p3-low-fidelity-prototype/

P4: https://blogs.princeton.edu/humancomputerinterface/2013/04/07/eiip-p4-usability-testing/

P5: https://blogs.princeton.edu/humancomputerinterface/2013/04/22/biblio-file-p5/

P6: https://blogs.princeton.edu/humancomputerinterface/2013/05/06/biblio-file-p6/

d. Video

Here is a video demonstration of our final project and its capabilities.

e. List of changes since P6

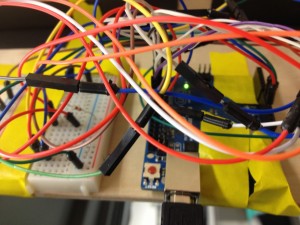

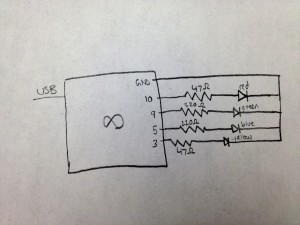

- We made the tag-query code on the Arduino interruptible, so that we can command a shelf LED to light up without waiting for the query to finish. This allows the shelf to respond more quickly to user requests to light up a shelf.

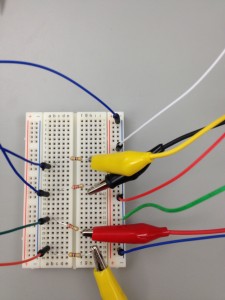

- We were able to get the third RFID scanner working. It wasn’t working at the time of our P6 blog post, but after replacing some wires it is now functioning.

- We cached the database to help our web application load faster.

f. How our goals and design evolved

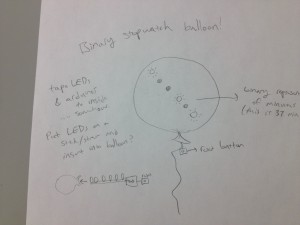

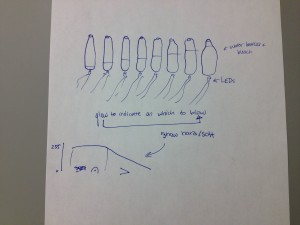

Over the course of the semester, we revised our design to take into account the technical limitations of our hardware. For instance, we had originally envisioned having a setup in which each bookshelf’s RFID reader would statically sense the books on the shelf, and be able to report a change in the set of books it detected. This would have made the shelf somewhat more convenient to use, but it turned out to be impossible with the RFID readers we had (they had too short of a range and could only detect one ID at a time). We also added features, such as the system beeping when a book was successfully read, that gave the user more feedback, making the system more responsive. Many of these sorts of usability changes evolved from feedback from our user tests; our web-interface, especially, was refined and simplified greatly over the course of the semester, in response to tests which revealed overly complex or counterintuitive aspects of the interface.

Though our design changed greatly over the semester in response to technical limitations and user feedback, our design goals have remained basically the same: to create an intuitive, usable bookshelf to help users keep track of their books without getting in their way. The user-driven changes we made were all informed by this desire for simplicity and usability, and our final design reflects our constant pursuit of these design goals. Throughout our user tests with different iterations of our project, we found that our design goals resonated with users.

g. Critical evaluation of our project

Our final project is in some respects a success. Our user testing showed that users enjoyed interacting with it, and didn’t find the time constraints induced by the “tap-in, tap-out” system of RFID readers too annoying. We saw some exciting moments of “joy” as our users realized how the bookshelf worked, which was very gratifying. However, we’d be worried about the ability to scale our current system, and about the impacts of long-term use, which we weren’t able to effectively user-test. Because users have to tap in and tap out, it’s likely that over time, the database would become incomplete, and if the user ever decides not to wait to tap out books it’s hard for the database to recover enough to be useful again in the future. Still, with further iterations, more involved user testing, and more exploration of available technologies, we think that our product definitely has the potential to become a useful real-world item.

From our project, we’ve learned a lot about the constraints of this space. Users we interviewed have a very emotional attachment to their books, which spurs dislike of systems that force constraints on them. Additionally, we’ve learned a lot about the technical constraints of RFID chips and scanners. Originally we believed that scanners would have a longer range and, more critically, that we would be able to read multiple RFID tags if multiple tags were present in the scanner range. We’ve since found that both these assumptions, at least when dealing with consumer-priced RFID tags and scanners, are false. Implementing the scanning software was also an interesting exercise; for example, we learned that we needed to power-cycle the RFID scanners in order for tag reading to effectively work.

h. Moving forward

Our first step in moving forward with this system would be to invest in higher-quality RFID readers; as mentioned above, readers that could passively sense the presence of multiple books at a longer range (say, about two feet) would make the system easier to use by eliminating the need to tap in and tap out. If we make this critical improvement, it will be necessary to conduct further user-testing to see how this change affects the system’s overall usability, and whether it introduces unexpected usability bugs. We would also invest in a real bookshelf to replace our plywood frame; while the shelf we currently have certainly works, it’s not pretty and is somewhat fragile. Having a nicer-looking and more solid shelf is also necessary for our system to be pleasant and usable.

In conducting further usability tests, we would stick to the same general scheme and set of tasks we used in previous tests, since the key tasks and goals of our system will not have changed. The main difference would be that the tasks would involve just putting the book on the shelf or taking it off (rather than tapping in and tapping out); this is a relatively minor change.

i. Source code

Our code is stored in a git repository which can be found here. You can use this to download and view all of our source code. There is also a README, which we linked to again for your convenience.

j. Third party code

- pic2shop: A free smartphone app and API that provided us with ISBN barcode reading.

- Google Books API: We used this API to obtain information such as book title, author, and cover image given the ISBN number extracted with pic2shop.

- Heroku: We did not use Heroku code per se, but this is where our web app is hosted.

- flask: flask is a Python-based web micro-framework. We wrote our web app using flask.

- flaskr: An example flask app (a simple blog) which served as the foundation for our web app code.

- Twitter Bootstrap (css library) and jQuery (js library): We utilize these in the frontend for our web app.

- redis: We use redis, a simple key-value store database, for storing book information.

- RFID Seeedstudio Library: This library provides the basis for our RFID reading. However, it only supports a single scanner at once, so we had to make significant edits to this code.

k. Materials for demo session

Everything that we will be presenting is saved here!