Group Number and Name

Group 10 – Team X

Group Members

- Osman Khwaja (okhwaja)

- JunJun Chen (junjunc)

- Igor Zabukovec (iz)

- (av)

Mission Statement

Our project aims to provide a way for dancers to interact with recorded music through gesture recognition. By using a Kinect, we can eliminate any need for the dancers to press buttons, speak commands, or generally interrupt their movement when they want to modify the music’s playback in some way. Our motivation for developing this system is twofold: first of all, it can be used to make practice routines for dancers more efficient; second of all, it will have the potential to be integrated into improvisatory dance performances, as the gestural control can be seamlessly included as part of the dancer’s movement.

Prototype Description

Our prototype includes a few screens of a computer interface which would allow the user to setup/customize the software, as well as view current settings (and initial instructions, gestures/commands). The rest of the prototype depends heavily on Wizard of Oz components, in which one member of our team would act as the kinect and recognize gestures, and then respond to them by playing music on their laptop (using a standard music player, such as itunes).

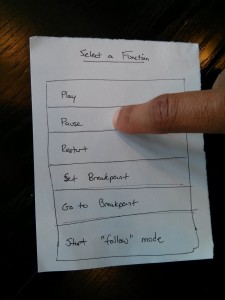

Our prototype will have three different screens to set-up the gestures. Screen 1 will be a list of preprogrammed actions that the user can do. These include stop, start, move to last breakpoint, set breakpoint, go to breakpoint, start “follow mode”, etc.

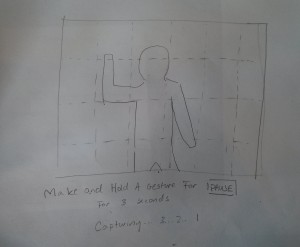

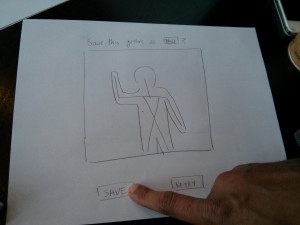

Once the user selects a function, another screen pops up that instructs the user to go make a gesture in front of the kinect and hold it for 3 seconds or so.

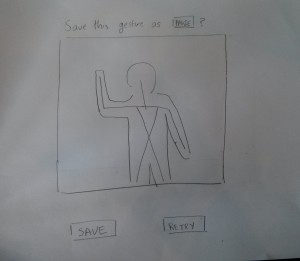

Once the user creates a gesture, there will be a verification screen that basically reviews what functionality is being created and prompts the user to verify its correctness or re-try to create the gesture.

Tasks

(So that we can also test our setup interface, we will have the user test/customize the gestures of each task beforehand, as a “task 0”. In real use, the user would only have to do this as an initial setup.)

The user selects the function that they want to set the gesture for:

The user holds the position for 3 seconds:

The user confirms that the desired gesture has been recorded, and saves:

An illustration of how our prototype will be tested is shown in the video below. For task 1, we will have the users set gestures for “play” and “pause”, using the simple menus shown. Then we will have them dance to a recorded piece of music, and pause / play it as desired. For task 2, we will have them set gestures for “set breakpoint” and “go to breakpoint”. Then they will dance to a the piece of music, set a breakpoint (which will not interrupt the playback), and then, whenever desired, have go to that breakpoint. For task 3, we will have the users set a gesture to “start following”, and record a gesture at normal speed. We will then have the users dance to the piece of music, set the following when desired, and then adapt the tempo of the music playing according to the speed of the repeated gesture.

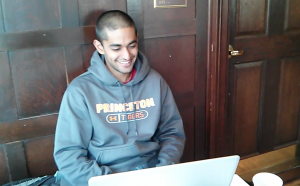

Our “Wizard in the Box”, controlling the audio playback:

Discussion

i. We made our initialization screens using paper, but the bulk of it was “Wizard of Oz”, and just responding to gestures.

ii. Since our project doesn’t really have a graphic user interface, except for setup and initial instructions, we relied heavily on the Wizard of Oz technique, to recognize and respond to gestures and voice commands. Since what the user would mostly be interacting with is music and sound, which can’t be represented well on paper, we felt it was appropriate to have our prototype play music (the “wizard” would just push play/pause, etc on a laptop).

iii. It was a little awkward to try to prototype without having the kinect or even having a chance to get started creating an interface. Since users would interface with our system almost completely through the kinect, paper prototypes didn’t work well for us. We had to figure out how to show interactions with the kinect and music.

iv. The Wizard of Oz technique worked well, as we could recognize and respond to gestures. It helped us get an idea of how tasks 1 and 2 work, and we feel that those can definitely be implemented. However, task 3 might be too complicated to implement, and it might be better to replace it with a simpler “fast-forward / rewind” function