Group Number: 9

Group Name: VARPEX

Group Members: Abbi, Dillon, Prerna, Sam

In this assignment, Sam was responsible for writing up the mission statement and brainstorming prototypes. Dillon was responsible for writing up the discussion of the prototype and brainstorming prototypes. Abbi was responsible for building the prototypes. Prerna was responsible for describing the prototype and how it applies for our tasks.

Mission Statement:

The purpose of this project is to create a prototype piece of clothing which can take input from an MP3 player and create sensations on a user so that the user can feel lower bass tones. The sensation will be generated using vibrating motors. The device should be comfortable and portable. This product will allow users who are unable to generate loud, feelable bass tones for reasons of cost, noise pollution or portability to overcome these obstacles and feel low bass tones. The current design of the system proposed would use a microcontroller to analyse music tones and actuate motors spaced on the user’s chest. The motors will be incorporated into clothing for ease of use and the microcontroller will be battery-powered and portable. The prototype at this stage aims to discover basically how users will react to primitive actuation from the motors (to determine placement and power). This prototype will also aid in the design of the clothing (fit, weight, etc.). The goal of this team is to produce this final product without going over budget. In particular, our focus is on user experience.

Prototype Description

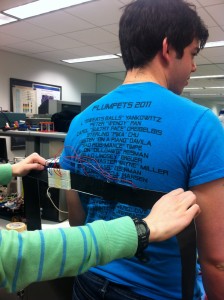

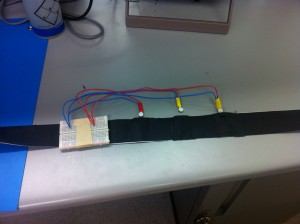

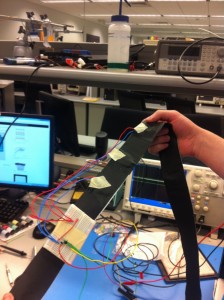

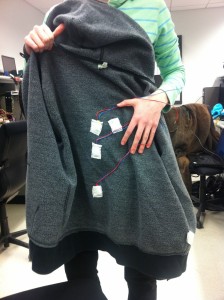

Since our device does not have a visual user interface, we decided to use the lo-fi prototypes to perform further tests on its usability and functionality. With this in mind, we will have two iterations of our lo-fi prototype. In the first iteration, motors will be placed in a band of tape that can be tightly attached to the users body with the motors contacting the user around the spine. This will allow us to test if the motors (and modulation of their intensity) properly replicate the sensation we found users feel in P2. The second portion of our prototype will implant these motors in a loose-fitting sweater. This will allow us to test our form factor- hopefully the jacket offers the user the appropriate level of sensation, but it is possible a tighter-fit will be needed in order to achieve the desired level of intensity.

Use of Prototype in Testing

In P2, we identified the following key tasks of our users:

-

Experience/listen to music without disturbing the quiet environment of the people around you (in the library while studying, in lab, etc.)

-

Experience/listen to music while being mobile (walking to class, in the gym, etc.)

-

Experience/listen to music without disturbing residential neighbors (roommates, suitemates, etc.)

These tasks have informed the characteristics our system needs: proper replication of physical sensations felt from loud speakers and portability. From these characteristics, we’ve determined two fundamental questions we will answer in P4:

-

Can the vibrating motors replicate the feeling of powerful bass tones from speakers?

-

Does the form factor of wearing the motors in the jacket produce the proper sensations?

To answer these two questions, we’ll explore user’s responses to the intensity of the motors and the comfort and wearability of motors worn on a loose fitting jacket. Since the differences in our three tasks are linked with the user’s usage environment, and do not differentiate between the actual design of the device, we decided to use P3 to build a prototype that allows us to test the comfort and wearability of the device, as well as test how users feel about the physical locations of the motors in the jacket. This will help us better understand how and where users want to feel the sensations.

As our mission is heavily dependent on these sensations, a paper prototype or otherwise non-functioning system would not allow us to test anything that would help us see if our proposed system would properly accomplish our mission. At its core, our prototype will have three motors.

For the first iteration, we will see how strongly the vibrations are conducted through the motors when attached closely to your spine via a tight band. It will allow us to understand how comfortable users are with these vibrations and whether they feel it accurately replicates the live music sensation. In the second iteration, we will attach the motors to a jacket, which will allow us to test for fit, comfort and wearability, which is key to every task we listed above.

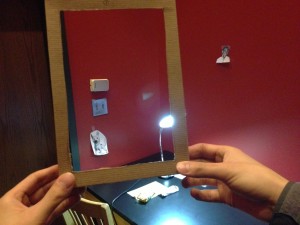

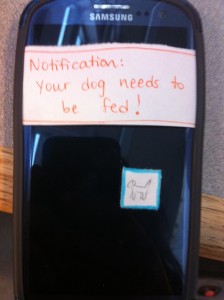

Basic Prototype Demo

A Closer Look at the Prototype

Prototype Discussion

Our prototypes required us to implement three of our motors- there is no lower-fidelity method to test our system. We want to test if the vibrating motors at all replicate the feeling users get in going to concerts. Our desired system should also be as ubiquitous as possible. An ideal final product would have the user simply plug their “music-feeling jacket” (or whatever form the product takes) into their iPod, with no interface required at all. This led us to conclude that a paper prototype would not offer us the ability to properly evaluate our system, leading to our implementation of several of our motors.

This made our prototyping process a bit more difficult than we had originally anticipated, since it required us to concentrate more on technical questions that might not otherwise be appropriate at this stage of prototyping (but that we have deemed necessary). For one, how we would power the motors in our prototype became an issue, since it might not be possible to power the motors off of the arduino board due to current limitations. It is these sort of questions that we were forced to wrestle with at an early stage of our prototype. On the bright side, it has forced us to think more practically about what we are hoping to build towards with our prototype.

![solo_bracelet[1]](https://blogs.princeton.edu/humancomputerinterface/wp-content/uploads/sites/127/2013/03/solo_bracelet1-300x225.jpg)

![on_hand_bracelet[1]](https://blogs.princeton.edu/humancomputerinterface/wp-content/uploads/sites/127/2013/03/on_hand_bracelet1-300x225.jpg)