Group 16 – Epple

Member Names

Saswathi: Made the prototype & part of the Final document

Kevin: Design Idea & Part of the Final document

Brian: Large part of the Final Document

Andrew: Created the Prototype environment & part of the Final Document

Mission Statement

The system being evaluated is titled the PORTAL. The Portal is an attempt at intuitive remote interaction, helping users separated by any distance to interact in as natural a manner as possible. Current interaction models like Skype, Google Hangouts, and Facetime rely entirely on users to maintain useful camera orientation and affords each side no control of what they are seeing. We intend to naturalize camera control by implementing a video chatting feature that will use a Kinect to detect the orientation of the user and move a remote webcam accordingly. Meanwhile, the user looks at the camera feed through a mobile viewing screen, simulating the experience of looking through a movable window into a remote location. We hope to learn in our first evaluation of our prototype ways to make controlling the webcam as natural as possible. Our team mission is to make an interface through which controlling web cameras is intuitive.

Description of Prototype

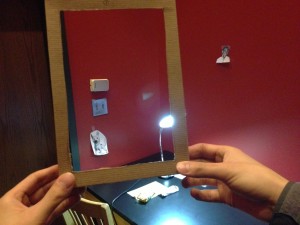

Our prototype uses a piece of cardboard with a cut out square screen in it as the mobile viewing screen. The user simply looks through the cut out square to view the feed from a remote video camera. From the feed, the user can view our prototype environment. This consists of a room with people that the user web chats with. These people can either be real human beings, or in some cases printed images of human beings that are taped to the wall. We also have a prototype Kinect in the room that is simply a decorated cardboard box.

Three Tasks

Task 1 : Web chat while breaking the restriction of having the chat partner sit in front of the computer

Difficulty: Easy

Backstory:

A constant problem with web chats is the restriction that users must sit in front of the web camera to carry on the conversation; otherwise, the problem of off-screen speakers arises. If a chat partner moves out of the screen with our system, we can eliminate the problem of off-screen speakers through simply allowing the user to intuitively change the camera view to follow the person around. The conversation can then continue naturally in this situation.

How user interacts with prototype to test:

We have the user look through the screen to look and talk to a target person. We have the person move around the room. The user must move the screen to keep the target within view while maintaining the conversation.

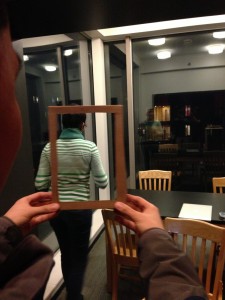

Saswathi is walking and talking. Normally this would be a problem for standard webcam setups. Not so for the Portal! Brian is able to keep Saswathi in the viewing frame at all times as if he were actually in the room with her, providing a more natural and personal conversation experience.

Task 2 : Be able to search a distant location for a person through a web camera.

Difficulty: Medium

Backstory:

Another way in which web chat differs from physical interaction is the difference in the difficulty of initiation. While you might seek out a friend in Frist to initiate a conversation, in web chat, the best you can do is wait for said friend to get online. We intend to rectify this by allowing users to seek out friends in public spaces by searching with the camera, just as they would in person.

How user interacts with prototype to test:

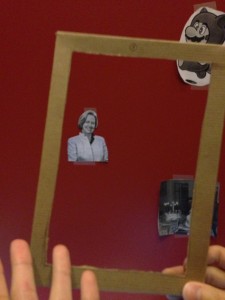

User plays the “Where is Waldo” game , there are various sketches of people taped on the wall. The user looks through the screen and moves it around until he is able to find the Waldo target.

After looking over a wall filled with various people and characters, the user has finally found Waldo above a door frame.

Task 3 : Web chat with more than one person on the other side of the web camera.

Difficulty: Hard

Backstory:

A commonly observed problem with web chats is that even if there are multiple people on the other end of the web chat, it is often limited to being a one on one experience where chat partners wait for their turn to be in front of the web camera or crowd together to appear in the frame. Users will want to use our system to be able to web chat seamlessly with all the partners at once. When the user wants to address another web chat partner, he will intuitively change the camera view to face the target partner. This allows for dynamic, multi-way conversations not possible through normal web camera means.

How user interacts with prototype to test:

We have multiple people carrying a conversation with the user. The user is able to view the speakers only through the screen. He must turn the screen in order to address a particular conversation partner.

The webcam originally faces Drew, but Brian wants to speak with Kevin. After turning a bit, he finally rotates the webcam enough so that Kevin is in the frame.

Discussion

The prototype is mainly to understand the user’s experience so we have a portable display screen that resembles an iPad made from a cardboard box with a hole for a screen cut out. One can walk around with the mobile display and also look through it at the environment. The Kinect is also modeled as a cardboard box with markings on it and placed in a convenient location as a real kinect that is detecting user movement would be placed. The prototype environment is made from printouts of various characters so that one can search for “Waldo”.

In creating our prototype, we found that the standard prototyping techniques of using paper and cardboard was plenty multi-purpose for our needs. It was difficult to replicate the feature of the camera following a scene until we hit upon the idea of simply creating an iPad “frame” which we would just use to pretend to be remotely viewing a place. Otherwise, the power of imagination made our prototype rather easy to make. We felt that our prototype worked well because it was natural, mobile, easy to carry, and enhance our interactions well (since there was literally nothing obstructing our interaction). Even with vision restricted to a frame, however, we found that our interactions were not in any way impaired.