Group Number: 17

Names: Evan, Jacob, Joseph, Xin Yang

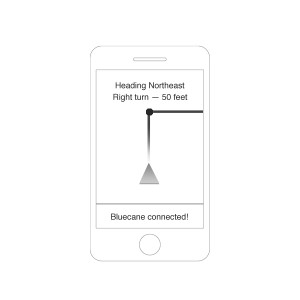

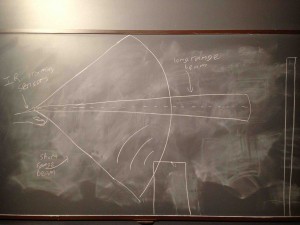

Project Summary: We are testing an add-on device for the cane of a blind user which integrates GPS functionality via bluetooth and gives cardinal and route-guided directions via haptic feedback.

Introduction: Over the course of our project, we have prototyped an attachment for the long white cane used by the blind. Intended to work as a bluetooth extension to a GPS device, the BlueCane provides haptic and touch-based navigation guidance and features a passive mode in which it haptically gives an intuitive compass orientation to the user. We are now testing this prototype with blind users. Our purpose is to determine the usability of our current prototype, in terms of how much of an improvement (if any) it would provide over current systems, and to determine which features promote the usability and which should be improved or removed. We hope to understand the usefulness of haptic and touch-based guidance in a navigation interface for the blind.

Implementation:

Link to our P5 prototype:

https://blogs.princeton.edu/humancomputerinterface/2013/04/22/p5-team-bluecane-group-17/

Since our P5 submission, we have made the following changes:

-

Cut down on the size of the PVC apparatus to facilitate easier attachment to individual canes

-

Consolidated and organized wiring to prevent shorts, breaks, and entanglements

-

Added an accelerometer and experimented with gravitational tilt compensation for the compass unit

Method:

Participants: All three participants were blind or visually impaired individuals, with varying levels of mobility and experience with cane travel, living in the Mercer county area. They were recruited via a notice sent over the listserv for the New Jersey Foundation for the Blind which advertised an opportunity to help test a prototype for new technologies in the area of navigational tools for the blind. Participant #1 was a completely blind, retired female, who, despite having above-average mobility and confidence, was primarily a seeing-eye dog user and thus had limited experience with cane travel. She used a GPS device regularly. Participant #2 was a blind woman who held a full-time job, but primarily used transit services to get around. Though she had far more experience with cane travel, she had limited experience with GPS technology. Participant #3 was a legally blind, working male who had moderate experience with cane travel. He worked in the field of technology and had experience with GPS. No participant had any physical issues with mobility, and all seemed to understand well the nature of the task and were excited about the advancements that we were proposing.

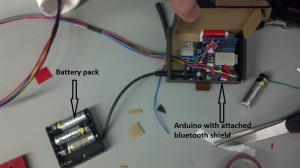

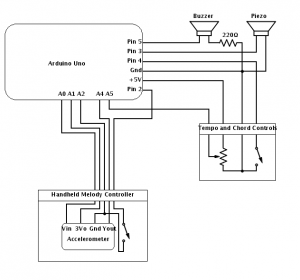

Apparatus: Our prototype was essentially the same as demoed in our P5 video, with the small modification that we carved out a portion of the cane handle to give the attachment a better form factor. An accelerometer had been added to allow for more accurate directional calculations in the final version, it had not yet been implemented at the time of testing. In addition, we also utilized a small blue briefcase for use in the 3rd task, as well as audio tracks of city background noise. In order to minimize the demand on our participants, who had difficulty traveling, all testing was performed at the house of the individual, typically outdoors in their yard or neighborhood because of space requirements. As a result, the participants all had a fair degree of familiarity with their environment, which, while perhaps allowing them to rely less on purely external directional instructions, lessened the already considerable stress associated with their participation.

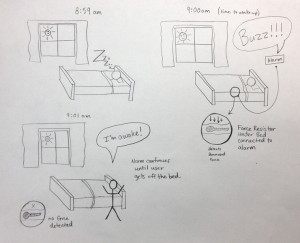

Tasks: Our first, easiest task arises whenever the user is in an unfamiliar space, such as a shopping mall or store, but does not have a well defined destination, as is often the case when browsing or shopping. As they mentally map their surroundings, it’s imperative that the user maintain a sense of direction and orientation. Failure to do so can reduce the user’s ability to do the things they want, and can even be a safety concern if the user becomes severely lost. Our cane will allow users to find and maintain an accurate sense of north when disoriented by providing intuitive haptic cues, increasing the reliability of their mental maps.

Our second and third tasks both confront the problems that arise when a user must rely on maps constructed by someone else in order to navigate an unfamiliar space with the intent of reaching a specific destination, as is the case with navigation software and GPS walking guidance. In the second task, (medium difficulty) our cane would assist users on their afternoon walk by providing haptic and tactile GPS directions, allowing users to explore new areas and discover new places, in much the way that a sighted person might want to visit a new street, building, or park on a leisurely stroll.

In our third and most difficult task, our cane alleviates the stress of navigation under difficult circumstances, such as frequently occur when running errands in an urban environment. In noisy, unfamiliar territory, the BlueCane would allow users to travel unimpaired by environmental noise or hand baggage, which can make it very difficult to use traditional GPS systems.

Procedure: Upon arriving at the participants’ houses, we began by explaining to them who we were and what our system hoped to accomplish. After obtaining their informed consent, we introduced them to the prototype and its features, and allowed them to get familiar with it before explaining each task. We performed the tasks sequentially, gathering data during the trial itself (success on responding to cues and appropriately reaching destinations), and then obtained intermediate feedback after each task. After all tasks were completed, we reminded each participant to be as honest as possible and read out the survey questions, allowing the them to qualify their answers as freely as they wished (after stating a value on the likert-style questions). Finally, when all of our predetermined questions had been answered, we opened the conversation to a full discussion of any questions or other feedback they had.

Test Measures:

Task 1:

- Whether user was able to turn himself/herself towards a given cardinal direction given tactile feedback from the cane when it points.

- If unsuccessful, approximate angle at which user deviated from the correct direction.

- Qualitative Feedback

Task 2:

- Without any additional cues, we gave the user a few turns to follow using the raised ridges in our navigational hardware. Out of these, we counted how many they were able to follow.

- Qualitative Feedback

Task 3:

- Same as task 2.

Results:

Participants who succeeded in task 1: 3/3

Overall fraction of turns followed in task 2: 1/3, 0/5, 2/5

Overall fraction of turns followed in task 3: 3/3, 3/5, 2/2

Likert ratings: (1 for “Strongly Disagree”, 5 for “Strongly Agree”)

I found the vibration in the direction of North useful in maintaining a sense of my orientation. 4 + 5 + 4 (avg: 4.33)

I found the vibration in the direction of North intuitive and easy to use. 5 + 5 + 4 (avg: 4.67)

I found the turn-by-turn commands useful in navigating to a destination. 4 + 4 + 5 (avg: 4.33)

I found the turn-by-turn commands intuitive and easy to use. 3 + 4 + 5 (avg: 4)

I would prefer to have directions read to me aloud instead of or in addition to haptically (as in the current system). 3 + 2 + 4 (avg: 3)

I would prefer to use (a refined version of) this system over a standard cane. 5 + 5 + 4 (4.67)

I feel that having such a system available to me would increase my confidence or feeling of autonomy. 2 + 5 + 4 (avg: 3.33)

I feel that (a refined version of) such a system would help me navigate indoor spaces. 5 + 4 + 4 (avg: 4.33)

I feel that (a refined version of) such a system would help me navigate outdoors (with or without GPS navigation). 5 + 4 + 4 (avg: 4.33)

Given that the profiles of our 3 users varied considerably, it is likely that there are other profiles of blind users we have not considered/encountered. This causes us to be hesitant with any assumptions about our external validity.

Variations between blind users:

– Amount of experience in cane travel

– Cane travel technique (how they like to hold the cane)

– How good their sense of direction is (none of them normally think in terms of cardinal directions, but they generally know how much a 90 degree turn is)

– Experience with assistive technologies

– Sense of autonomy.

Our system generally received positive reception (as indicated by Likert feedback). All users were enthusiastic about our developments and asked to be informed of future possibilities to test the system.

Salient points from post-task discussions:

– Our method for indicating the turn-by-turn instructions needs to be more ergonomic – the current placement makes it difficult to detect both left and right signals with a single finger.

– Because of the many variations in hand placement, users are not always aware of when turn signals are passed.

– When user missed a turn, it was hard to recover using current system.

– We will need a way to adapt the layout of the ridges to many different hand placements and holding styles.

Discussion: For this round of testing, we were fortunate enough to work with visually impaired individuals and receive their feedback. We found that demonstrating, testing, and discussing our prototype with them was highly informative—affirming some features of our prototype and challenging others. The three individuals we visited had varying amount of experience with cane travel, degree of autonomy, navigational technique, experience with technology, and sense of direction. Each participant acknowledged his or her degree of autonomy or “mobility” as well as how age has affected their ability to navigate independently. Furthermore, they lived in different environments and performed different tasks on a day-to-day basis. Together they provided a variety responses to our questions and offered alternatives to some of our presumptions in the design process.

Naturally, it was more difficult to control the testing process, and our results were almost certainly influenced by testing location, individual preferences, and level of visual impairment. Whereas previously we performed the tasks in the confines of the electrical engineering lab with blindfolded students, this round of testing required traveling to participants’ neighborhoods. Even so, this revealed a range of use cases for our device and was ultimately helpful.

Participants’ performance on the three tasks helped reveal differences between individuals, owing in part to their particular impairment. All three fared well on the first cardinal direction task. They understood the task and were able to identify the direction of north using haptic feedback from the cane; they also identified other cardinal directions using north as a point of reference within an acceptable degree of error. When asked if this feature was useful and intuitive, all three participants (as well as one participant’s husband) responded either “Agree” or “Strongly Agree”. One participant expressed an interest in being able to set the direction indicated by the cane, which affirmed our original intention. Interestingly, few if any of the participants said that they navigate with respect to cardinal directions currently and prefer to think of their environment as a series of relative turns and paths. This challenged one of our presumptions about users’ perception of their environment. We originally suspected that blind people discarded relative direction in favor of absolute direction, but this turned out to be incorrect. Nevertheless, all participants indicated that they were open to the idea of using the device to learn cardinal directions, and they acknowledged that the feature would be helpful in unfamiliar environments.

The turn-by-turn navigation task was more challenging and ultimately more informative. The task relied on the user’s ability to perceive and respond to instructions sent from our laptop. Variation in grip technique and hand size led to some difficulty performing the task or accomplishing a turn in an adequate time frame. We found that users were better at the task when they were walking on well-defined paths (i.e. a sidewalk) where the location of the turn is already demarcated along the path itself. Navigation in the user’s backyard was more difficult because it lacked these cues, and so the user had to infer the timing and magnitude of turns.

The first two users gripped the cane the way that we had anticipated in design, but the third user preferred the less-frequent “pencil grip,” perhaps owing to height or cane length. As a result, we learned that the design of the cane handle should be more ergonomic—not only more comfortable but flexible to different preferences, or at least designed to suggest the intended grip more clearly. We were also told that the distance between the turn indicators was too long and made it difficult to receive instructions exogenously (i.e. without attending to the device directly). Perhaps for this reason, most users agreed that they would prefer to use the cane in conjunction with an optional auditory GPS program. Despite these difficulties and qualifications, users still reported the turn-by-turn navigation feature as intuitive and easy to use in our survey questions. Two of the participants were especially optimistic about the potential for the device in indoor environments, and the third said that he would prefer to use a normal cane indoors.

In the third task—as in our previous round of testing—users were not hindered by the addition of background noise and even demonstrated a notable improvement over the second task. We were also informed by one user about the concept of “parallel traffic” noise, which is used for inferring traffic patterns and deciding when to cross roads. With this in mind, the ability to navigate without aural distractions seems more important than ever.

We also asked about the desired form factor for the final product, and participants gave varying responses. Some preferred the idea of a built-in, integrated navigational cane, but others decided that a device that attaches to their existing cane would be preferable (in case the cane breaks, for example). Most of the users expressed a desire simply to see more affordable technology, since existing screen-readers and navigational devices cost thousands of dollars and aren’t covered by health insurance. Overall, the participants were gracious with their feedback and asked to stay informed about the future of the project.

Appendices:

Document 1: Demo script https://docs.google.com/file/d/0B8SotZYUIJw4V3pPTWhNRDJRaVU/edit?usp=sharing

Document 2: Consent form https://docs.google.com/file/d/0B8SotZYUIJw4bEhyMzdtbXR0LVE/edit?usp=sharing

Document 3: Post-task questionnaire https://docs.google.com/file/d/0B75b-7tqGKTkbUpwdU9SeHFQY3M/edit?usp=sharing

Figure 1: Participants were introduced to the system and shown its relevant features.

Figure 2: Participants were tested on their ability to use the cardinal features of the BlueCane in task #1.

Figure 3: Participants followed directional cues in task #2.

Figure 4: Participants completed the same navigational task, but with the added distraction of background noise and luggage to carry.

Figure 5: A video of a Participant undergoing testing is hosted at the link above.