a) Group Information: Group #14, Team Chewbacca

b) Group Members

Karena Cai (kcai@) – in charge of designing the paper prototype

Jean Choi (jeanchoi@) – in charge of designing the paper prototype

Stephen Cognetta (cognetta@) – in charge of writing about the paper prototype

Eugene Lee (eugenel@) – in charge of writing the mission statement and brainstorming ideas for the paper prototype

c) Mission Statement

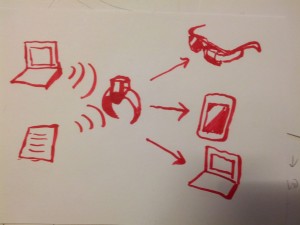

The mission of this project is to create an integrated system that achieves the goal of helping our target users take care of their dog in a non-intrusive and intuitive way. The final system should be almost ready for consumer use, excepting crucial physical limitations such as size and durability. The purpose of the system is to aid busy dog-owners who are concerned about their dogs’ health but who must often spend time away from their household due to business, vacation, etc. Our system does so by giving the user helpful information about their dog, even when they are away from home. It would help busy pet-owners keep their dogs healthy and happy and give them greater peace of mind. By helping owners effectively care for their pets, it might even reduce the number of pets sent to the pound, where they are often euthanized. In our first evaluation of the prototype system, we hope to learn about what users consider the most crucial part of the system. In addition, we will learn to what extent the information about their dog should be passively recorded or actively notified to the user. Finally, we will try to uncover as many flaws with our current design as possible at this early step. This will prevent us from using our limited time on a feature that does not actually provide any benefit to the user.

d) Description of prototype

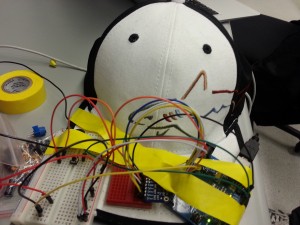

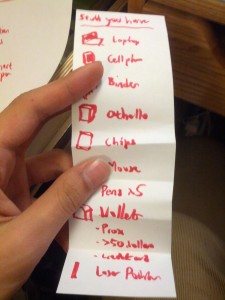

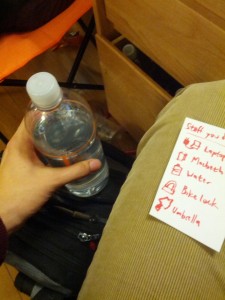

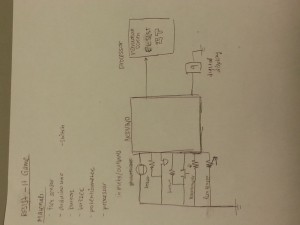

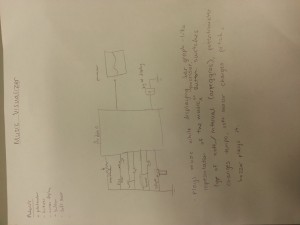

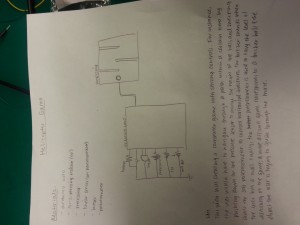

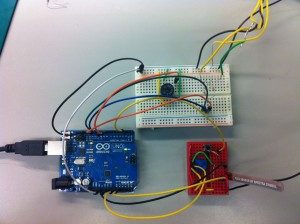

Our prototype includes three components: a paper prototype of the mobile application, a prototype of the dog bowl, and a prototype of the dog collar. The mobile application includes the home screen and screens for tracking the dog’s food intake, tracking its activity level, and creating a document that includes all pertinent data (that can be sent to a vet). The dog bowl includes an “LED” and a “screen” that shows the time the bowl was last filled. The collar includes an “LED”.

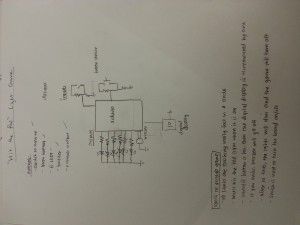

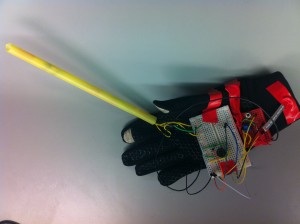

A prototype for the dog bowl (Task 1)

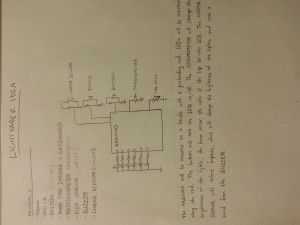

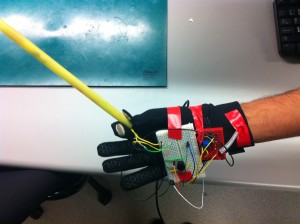

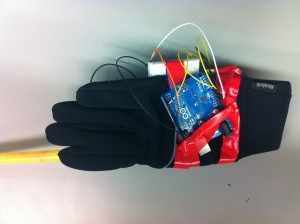

Prototype for the dog collar (Task 2)

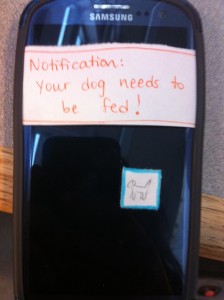

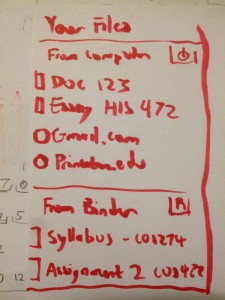

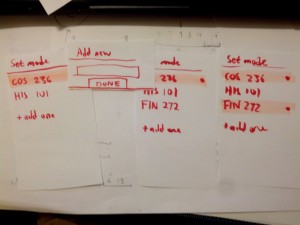

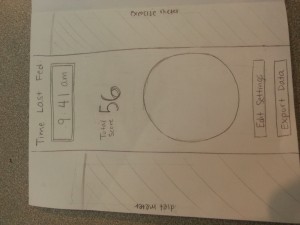

Notification set-up for the application

Main page for the app, where it shows exercise, diet, time since last fed, a picture of the dog (would go in the circle), edit settings, and exporting the data.

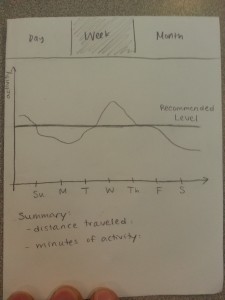

Exercise information for the dog, shown over a day, week, or a month. (Task 2)

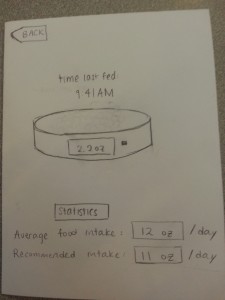

Diet information for the dog, where it shows the bowl’s current filled amount, and the average information for the dog’s intake. (Task 1)

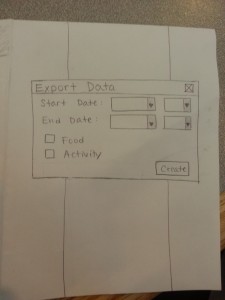

The function for exporting data to the veterinarian or other caretakers for the dog. (Task 3)

e) Task Testing Descriptions

Task 1: Checking when the dog was last fed, and deciding when/whether to feed their dog.

The user will have two ways to perform this task. One, they may look at the color of an LED on the dog bowl (which indicates how long it has been since the bowl was filled), or look at the exact time of the last feeding, which is also displayed on the bowl. Alternatively, they can look at the app, which will display the time the bowl was last filled.

If no feeding has been detected for a long time, the user will receive a direct alert warning them that they have not fed their dog. We intend for our prototype to be tested using both the bowl alone and using both the mobile application and the bowl. The “backstory” for the bowl alone is that the owner is at home and wishes to see if they should feed their dog, and/or whether someone else in their family has already fed their dog recently. The “backstory” for the mobile application + bowl prototype test is that the owner has gotten a notification that they have forgotten to feed their dog, and they check the mobile application for more information and subsequently go to fill their dog’s bowl.

Task 2: Checking and regulating the activity/healthiness of your dog

The user can check the activity level of his or her dog by looking at its collar – a single LED will only light if the dog has lower levels of activity than usual (for the past 24 hours). The user can also find more detailed information about their dog’s activity level by looking at the app, which shows the dog’s level of activity throughout the day, week, or month, and assigns a general “wellness” level according to the dog’s activity level that is displayed on the home screen as a meter. This prototype should be tested in two ways — using the collar alone or just the mobile application. The backstory for testing only the collar prototype is that the owner has just arrived home from work and wants to know whether the dog needs to be taken on a walk (or whether it has received enough physical activity from being outside during the day when the owner was not home) — using the LED on the collar, the owner can make a decision. The backstory for testing only the mobile prototype is that the owner has recently changed their work schedule and wishes to see whether this has adversely affected their ability to give their dog enough physical activity — they can check this by looking at the week/month views of the mobile app.

Task 3: Generate, view, and share a summary of your dog’s health over a long period of time.

The user can generate, view, and share a summary of their dog’s health over a long period of time by using the “Export data” button on the application, which also has the option of sending the information to someone else (probably a veterinarian). This mobile application prototype will be tested by having users interact with the relevant prototype screens. The backstory for testing is that the user has a veterinarian appointment the next day, but does not remember exactly how much they have been feeding their dog/how much activity it has gotten, and would not be able to tell the vet much from memory. Using the prototype, they can automatically send detailed information straight to the vet.

Video of the tasks here: https://www.youtube.com/watch?v=KIixVJ21zQ0

f) Discussion of Prototype

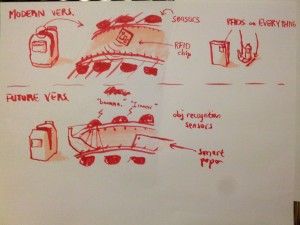

We started the process of making our prototype by first brainstorming the most convenient ways that a user could perform these tasks. Continuous revisions were made until we believed we had streamlined these tasks as much as possible within our technological limitations. Afterwards, we created an initial design for the application, and quickly created prototypes for the mobile application, collar, and bowl. While not particularly revolutionary, we used a physical bowl (made out of paper) to simulate the usage of the bowl. While we were considering including some surrogate imitation of a dog, we decided against it, as all of our ideas (hand puppets, images, video, etc) were considered too distracting for the tester. Because the collar is an interface ideally out of the hands of the user, we decided to simply show them a prototype of what they would see on the collar, as well as their data updating on the application.

Perhaps the most difficult aspect of making the prototype was figuring out how we could make the user “interact” with their dog, without actually bringing in their dog. It was also difficult to design prototypes that had minimal design (i.e. tested all of the relevant tasks, while not distracting the user with “flashy” icons or features). We found that the paper prototypes worked well to help us envision how the app would look, and how it would be improved. The prototypes for the bowl and collar were also helpful in helping us identify exactly what information the user would need to know and what was superfluous. Using very simple prototype materials/designs for the bowl and collar were helpful to our thinking/design process. While the paper prototypes submitted in this assignment were created through multiple revisions, the prototype will probably continue to be revised for P4.