Group Number: 4

Group Name: Team TFCS

Group Members: Collin, Dale, Farhan, Raymond

Summary: We are making a hardware platform which receives and tracks data from sensors that users attach to objects around them, and sends them notifications e.g. to build and reinforce habits and track activity.

Previous Posts:

P6: https://blogs.princeton.edu/humancomputerinterface/2013/05/06/p6-team-tfcs/

P5: https://blogs.princeton.edu/humancomputerinterface/2013/04/22/5642/

P4: https://blogs.princeton.edu/humancomputerinterface/2013/04/08/p4-team-tfcs/

P3: https://blogs.princeton.edu/humancomputerinterface/2013/03/29/p3-2/

P2: https://blogs.princeton.edu/humancomputerinterface/2013/03/11/p2-tfcs/

P1: http://blogs.princeton.edu/humancomputerinterface/2013/02/22/964/

Final Video

http://www.youtube.com/watch?v=1j8ZQd-cJJw&feature=em-upload_owner

Changes from P6

– Added a “delete” function and prompt to remove tracked tasks

This was a usability issue that we discovered while testing the app.

– Improved the algorithm that decided when a user performed a task

The previous version had a very sensitive threshold for detecting tasks. We improved the threshold and also used a vector of multiple sensor values to decide what to use asa cutoff instead of only one sensor.

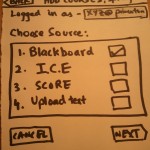

– Simplified choice of sensors to include only accelerometer and magnetometer

This was a result of the user testing which indicated that the multiple sensor choices vastly confused people. We simplified it to two straightforward choices.

– Updated text, descriptions and tutorial within the app to be more clear, based on user input from P6

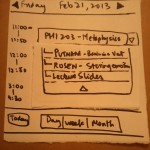

– Updated each individual sensortag page to display an icon representative of the senor type, simplified the information received from the sensortag in realtime, and added a view of user’s progress in completing tasks

Goal/Design Evolution

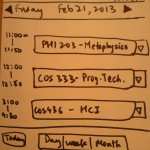

At the beginning of the semester, our goal was to make an iPhone application that allowed users to track tasks with TI sensortags, but in a much more general way than we actually implemented. For example, we wanted users to decide which sensors on our sensortag–gyroscope, magnetometer, barometer, thermometer, etc–they would use and how, and we would simply assume that users would be able to figure out how best to use these readings to fit their needs. This proved to be a poor assumption, because it was not obvious to nontechnical users how these sensors would be used to track tasks they cared about.

We quickly reoriented ourselves to provide not a sensortag tracking app but a *task* tracking app, where the focus of the app was in registering when users took certain actions–opening a book, taking a pill from a pillbox, going to the gym with a gym bag–rather than activated the sensors on certain sensortags. Within this framework, however, we made the model for our application more general, exposing more of how the system functions by allowing them to set up sensors for each task, rather than choose from a menu of tasks within each application. This made our system’s function easier to understand for the end user, which was reflected in our second set of interviews.

Critical Evaluation

Our work over the semester provided strong evidence that this type of HCI device is quite feasible and useful. Most of our tested users expressed an interest in an automated task-tracking application and said that they would use Taskly personally. Still, one of the biggest problems of our implementation of a sensor-based habit tracking system was the size and shape of the sensors themselves. We used a sensortag designed by TI which was large and clunky, and although we built custom enclosures to make the devices less intrusive and easier to use, they were still not “ready for production.” However, as mentioned above, this is something that could easily be fixed in more mature iterations of Taskly. One reason to believe that our system might function well in the real world is that the biggest problems we encountered–the sensortag enclosures and the lack of a fully-featured iPhone app–are things we would naturally fix if we were to continue to develop Taskly. We learned a significant amount about the Bluetooth APIs through implementing this project, as well as about the specific microcontroller we used; we expect BLE devices, now supported only by the iPhone 4S and later phones, will gain significant adoption.

The project ended up being short on time; our lack of iOS experience (initially) made it difficult to build a substantively complex system. The iPhone application, for example, does not have all of the features we showed in our early paper-prototypes. This was partly because those interfaces revealed themselves to be excessively complicated for a system that was simple on the hardware side; however, we lost configurability and certain features in the process. On the other hand, we found learning new hardware platforms (for both iOS and the SensorTag) to be something that could definitely be accomplished over the course of weeks, especially making use of previous computer science knowledge.

One final observation that was reinforced as a result of our user testing was that the market for habit-forming apps is very narrow. People were very satified with the use cases that we presented to them and their recommendations for future applications for the system very closely aligned to the tasks we believed to be applicable for Taskly. Working on this project helped us recognize the diversity of people and needs that exist for assistive HCI-type technologies like this one, and helped us gain a better idea of what kind of people would be most receptive towards systems where they interact with embedded computers.

Moving Forward

One of the things we’d most like to improve upon in later iterations of Taskly are custom sensortags. The current sensortags we use are made by Texas Instruments as a prototyping platform, but they’re rather clunky. Even though we’ve made custom enclosures for attaching these sensors to textbooks, bags, and pillboxes, they are likely still too intrusive to be useful. In a late iteration, we could create a custom sensor that uses just the bluetooth microcontroller core of the current sensortag we’re using (called the CC2541) and the relevant onboard sensors like the gyroscope, accelerometer, and magnetometer. We could fabricate our own PCB and make the entire tag slightly larger than the coin-cell battery that powers the tag. We could then 3D print a custom case that’s tiny and streamlined, so that it would be truly nonintrusive.

Beyond the sensortags, we can move forward by continue to build the Taskly iPhone application using the best APIs that Apple provides. For example, we currently notify users of overdue tasks by texting them with Twilio. We would like to eventually send them push notifications using Apple Push Notifications Services, since text messages are typically used for communication. We could also expand what information the app makes available, increasing the depth and sophistication of historical data we expose. Finally, we could make the sensortag more sophisticated in its recognition of movements like the opening of a book or pillbox by implementing Machine Learning data to interpret these motions (perhaps, for example, using Weka). This would involve a learning section where the user performs the task with the sensortag attached to the object and the system would learn what sensor information corresponds to the task being performed.

Another thing we need to implement before the system can go public is offline storage. Currently the sensor only logs data when the phone is in range of the sensortag. By accessing the firmware on the sensortag, it is possible to make it store data even when the phone is not in range and then transmit it when a device becomes available. We focused on the iOS application and interfacing to Bluetooth, because the demonstration firmware already supported sending all the data we needed and none of us knew iOS programming at the start of the project. Now that we have developed a basic application, we can start looking into optimizing microcontroller firmware specifically for our system, and implementing as much as we can on the SensorTag rather than sending all data upstream (which is more power-hungry). A final change to make would be to reverse the way Bluetooth connects the phone and sensor: currently, the phone initiates connections to the Bluetooth tag; reversing this relationship (which is possible using the Bluetooth Low Energy host API) would make the platform far more interesting, since tags would now be able to push information to the phone all the time, and not just when a phone initiates a connection.

iOS and Server Code

https://www.dropbox.com/s/3xu3q92bekn3hf5/taskly.tar.gz

Third Party Code

1. TI offers a basic iOS application for connecting to SensorTags. We used it as a launching point for our app. http://www.ti.com/tool/sensortag-ios

2. We used a jquery graphing library for visualization. http://www.highcharts.com

Demo Poster