Group Number:

15

Group Members:

Prakhar Agarwal (pagarwal@)

Gabriel Chen (gcthree@)

Colleen Carroll (cecarrol@)

We all worked together or equally on all parts of the assignment. Each member did one contextual interview and storyboard, and we worked together on the other parts for a balanced effort.

Problem and Solution Overview:

The problem we have chosen to address is the difficulty of interacting with one’s phone when it is cold outside. Specifically, when we are wearing gloves, using a phone requires that we take them off because they block capacitive sensing and are clunky in such a way that pressing buttons is rather difficult. Our solution is to develop a glove with a variety of strategically placed motion and flex sensors that would recognize hand movements and gestures and translate these to perform simple tasks on the phone such as picking up the phone or changing the music playing.

Description of Users:

Our target user group consisted of people who were walking around campus, wearing gloves and holding or using a smartphone. On campus, we specifically looked for younger users as these are the most likely to be technologically connected and dependent. For further observation of possible users outside of campus, we could definitely look at urban professional and commuters also. However, for the campus demographic, we chose young users as they are more technologically connected; in fact, we tried to interview an older man, and he said that he didn’t even own a cell phone. The first person whom we interviewed was on her way back from class; she was wearing knit white gloves and held a pink iPhone. From talking to her, we learned that she was from Georgia and preferred warmer weather, and wore gloves quite often in chillier weather. Her priority was functionality, and she seemed most interested in being able to use her phone effectively and conveniently. Our second interview was with a girl from California. She wore leather gloves, and used an old school smartphone with a very small screen. Her priority was cost, and because of this, she was skeptical of the necessity for touch sensitive gloves. She also mentioned that she didn’t especially dislike the cold. The third interviewee was chosen as a control. This person was inside using their phone as they would if they did not have the hassle of cold weather and gloves. They were asked to do many of the same basic tasks and notes were taken on the speed and comfort level of performing these tasks.

Contextual Inquiry:

For our contextual interview, we stood outside of Frist on a cold afternoon, and looked for users who fit the description above that we were looking for. Once we found someone who fit the description and was willing to answer a few question, we asked them about their phone usage and asked them to perform several tasks on the phone. We were most interested in what they did in order to bypass the inconvenience of having to wear gloves, and made observations on their strategies. The third interviewee was asked to perform the same tasks and was observed as a standard for the level of difficulty for those tasks in warm conditions compared to those asked to perform them outside.

The tasks generally performed by the people we observed and interviewed were pretty standard and common. All interviewees had similar habits in terms of what they used their phones for while walking; the most common functions were phone calls, text, music and email. Each of these tasks was often preceded by the unlocking of the phone, although not all of our contextual inquiry subjects had this function enabled. In addition, a common theme amongst all interviewees was that each of them admitted that cold weather deters them from interacting with their phones in certain situations. For comparison, the the interviewee inside used their phone so often and easily that they were almost distracted from the interview. Between completing the tasks, the interviewee would check their email and search things online. Switching between tasks was extremely simple and the user seemed almost not to notice it. From this we can conclude that the user needs to have an even simpler way to switch between tasks with glove to match the ease of use in warm conditions.

The interviewees differed in the strategies they used in order to cope with cold weather and phone usage. The first girl we interviewed used a method where she took off enough of her glove to expose her thumb, but left the rest of her glove on. In addition, if she could use her phone with one hand, she would leave the other glove completely on. The second girl took off both of her gloves in order to use her small smartphone. Perhaps the size of her phone required her to hold it with both hands. The third interviewee often switched between using one finger or hand to using several, with different orientations depending on the task that they were performing. Again, it was much easier for the user indoors to use their hands in whatever way they wanted than for those outside.

Task Analysis:

Part A

- Who is going to use system?

Our system is a glove that lets people perform simple tasks on their phone when it is cold outside without actually touching their phone. The target user base is mobily connected individuals who need to walk outside in colder climates. Those who want to wear gloves to keep their hands warm but do things on their phones at the same time would benefit from this system. We also found that it was more likely for younger people to use the system. When we tried to do a contextual interview with an older man we saw wearing gloves, we found that he didn’t even own a cell phone. On the other hand, younger people are generally more technologically connected.

- What tasks do they now perform?

The users currently perform a number of tasks on their phones. From the contextual interviews, we found that the most common activities performed with smartphones while walking outside are texting and checking emails. Some people also suggested that they enjoy listening to music on their phones while they are walking to and from class for which they will generally only perform simple tasks such as playing/pausing/skipping songs en route. Users who we talked to for contextual interviews also mentioned that they don’t generally talk to others on the phone, with texting and email being much more common alternatives. However, for communicating with family or for professional purposes, they said that phone calls are the communication medium of choice. At the moment, users have to take off their gloves or use “Smart Touch” gloves, which work on touch screens, to perform these tasks.

- What tasks are desired?

During cold weather, people usually try to stay bundled up rather than interact with their phone too much. While on a nice day, someone may do more complicated tasks while walking, people generally want to get from point a to b as quickly as possible, performing only essential tasks, when it is cold. The simpler, essential tasks that people do want to perform are picking up phone calls, responding to texts, turning on their phone, etc without having to fumble clumsily with their phone or taking off their gloves.

- How are the tasks learned?

Smartphone interactions are usually learned by what is on screen. An effective smartphone UI is either intuitive or has written instructions. Many, particularly the tasks we are interested in implementing, rely heavily on convention for users to learn them. For example, the keyboard is standard across all applications of the phone so sending a message or typing a search term is the same across the phone. Most keyboards resemble the qwerty desktop keyboard, there is considerable variation on how to type special characters. Picking up a phone is usually pressing or swiping a green button, with a possibly with a slightly different interaction for picking up when the phone is locked. This relates back to the standard on landline phone answer buttons. Music players rely on the play, pause, and skip symbols established in many of the earliest digital playback systems. However, unlocking varies greatly from keyboard to numberpad to the android unlocking grid, with either visual or haptic feedback.

- Where are the tasks performed?

The tasks can be performed in transit on a mobile device in the cold (really, anywhere).

- What’s the relationship between user & data?

An intuitive or easy to learn UI is usually a positive user experience, in the sense that the interactions go mostly unnoticed and require little effort to remember and accomplish. Users are interested in their end goal such as answering the phone or listening to music, not the interaction. Therefore these tasks should be easy to perform and remember so that they disappear into the background.

- What other tools does the user have?

As the most obvious solution, the user can take take off the gloves. Using fingers in the cold is the core problem we are trying to solve, though. Using a headset is one possible solution to this problem, but it is inconvenient in noisy rooms and uncomfortable in most public areas (such as outside where this glove is intended to be used.) The other option is to use “Smart Touch” gloves which work on touch screens, but are clunky and definitely make it difficult to press small buttons or links on the screen in comparison to not wearing gloves.

- How do users communicate with each other?

Using our system, users experience improved communication, as they can send messages more conveniently and answer calls more easily.

- How often are the tasks performed?

People are frequently in motion, and the tasks are intended to be performed every time they need to use their mobile devices. As people only need to wear gloves during the winter, the device might not be completely useful outside of the winter season. This means that the system could potentially be performed only seasonally.

- What are the time constraints on the tasks?

Unlocking the phone should be performed rapidly, but can vary depending on the length of a password. Picking up a phone call or bringing up the voice recognition command for messages should be instantaneous. Interactions with the music player should be performed using a single gesture and should also be instant.

- What happens when things go wrong?

When things go wrong with unlocking a password, such as a misused/unrecognizable gesture, the user should just attempt to unlock the password as they normally would or try again using the gestures. With communication and music, the same logic applies. In any case, the user would not be terribly inconvenienced by these events outside of being frustrated that the glove didn’t work as advertised.

Part B (Description of Three Tasks):

- Unlocking phone

Current Difficulty: Medium

Proposed Difficulty: Medium

Unlocking a phone has considerable limitations currently. For one, the phone has limited space and users generally use only one or two fingers, so long,complicated passwords are even more cumbersome than they are on a desktop keyboard. Because users are limited in the speed that they can enter passwords in an onscreen character based password system, even a secure password (based on randomness of characters) can be more easily observed by an onlooker. The android unlocking grid is perhaps more convenient to use with one finger but it is even more easily observed than the character-based passwords. Cold weather and stiff fingers make unlocking even more difficult to perform. The task needs to be quick and simple to learn and perform, as it will be used often and users (based on our CI) seem to rate convenience first when choosing passwords. In addition, the system should have at least the potential to be hidden, such as by keeping the glove in your pocket, for security.

- Communication

Current Difficulty: High

Proposed Difficulty: Medium

A task that users commonly face themselves with is the task of communicating with other mobile phone users. In transit, communication commonly takes on two forms: phone calls and text messages. The interactive tasks associated with phone calls are answering the call, rejecting the call, and hanging up the call. Each of these tasks can be performed pretty easily as is, but removing the dependency on a touch screen can make things even easier, as gesture accuracy and ease are reduced when limited to pointing on a screen. The second task, which is sending text messages, can be quite complicated for mobile phone users. They need to deal with typing out a message and then sending it. As an alternative, users can currently select the voice recognition command, which is a tiny button on the messages screen. With the system, users can easily select the voice recognition command with a gesture away from the screen. As another function, gestures can be set to map to characters that can string into a message.

- Music

Current Difficulty: Medium

Proposed Difficulty: Low

Another tasks users may choose to perform while out in the cold is listening to music as this is generally a more passive activity. There are a couple of tasks they might want to perform while doing so including changing the volume and controlling music (play, pause, switch songs). Currently, the iPhone is compatible with special headphones with a “control capsule” that performs similar tasks, but users with other phones do not have this option. We would provide a gesture based means to do this. Users may pinch their fingers and move their hand up or down to change the volume. Flicking one’s wrist to the left or right may switch to the next or previous song. Other similar simple gestures may pause and play. This would be become a relatively simple interface for users. The simplicity and limited number of tasks that users need to perform in interacting with music would make this relatively easy from the design side. As with the other tasks, we would need to establish a way by which we get the glove to start recognizing gestures (maybe a switch or a location on the glove to press and hold), and then we could recognize these simple commands.

Interface Design

Description

Users can use our product to interact with their smartphone in cold weather. Rather than ineffective smartphone gloves which attempt to let you interface with the smartphone screen with conductive fingertips, our gloves are an interface themselves. With small, simple gestures such as bending a finger or squeezing two fingers together, combined with a headset for voicing message text, users can accomplish the essential tasks that one might have to make while on the go in the winter. We will implement locking and unlocking the phone, answering the phone, sending a message, and playing music. Our design does not have the frustrating problems of fit and surface area that existing smartphone gloves have, nor does it put the user in the awkward situation of using voice commands constantly. By creating a winter-weather interface for smartphones, we can a provide a simple, useful experience for smartphones users in the cold.

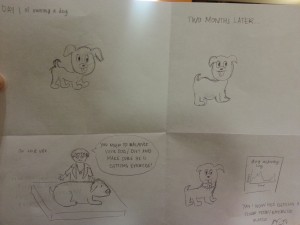

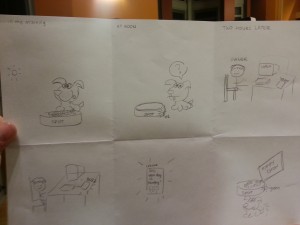

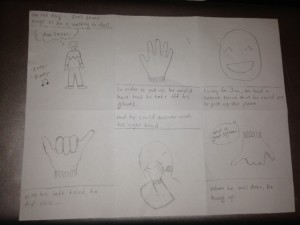

Storyboards

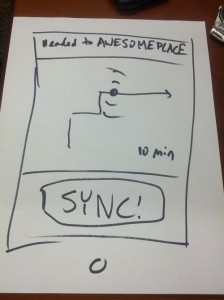

This story board shows the ease of picking up and then ending a phone call while wearing the smart gloves.

The final storyboard shows how the system could be used for the last task described, unlocking the phone.

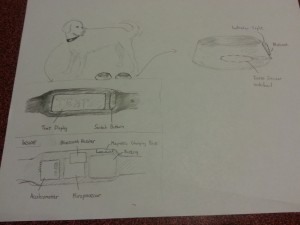

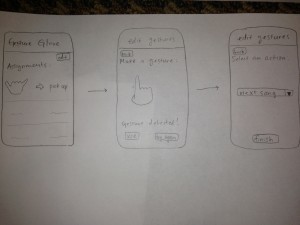

System Sketches:

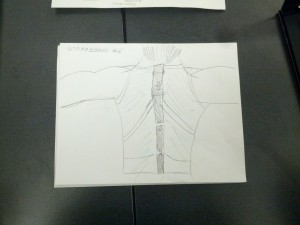

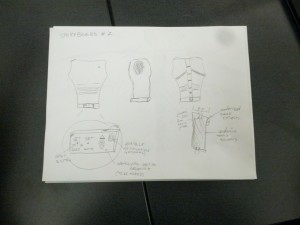

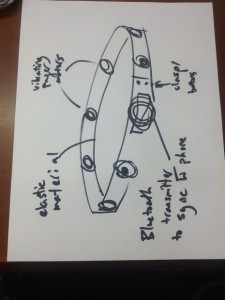

The glove contains flex and force sensors on each of the fingers and an accelerometer at the wrist in order to make it easy to read a variety of actions.

The glove could be controlled by an application on the phone that allows users to map gestures to tasks on the phone. Certain tasks would be premapped. Also, certain simple gestures would be preloaded as suggested actions for users to map to functionality they may desire in order to deal with user concern about the difficulty of coming up with usable gestures.