Group Number: 1

Group Mates: Kuni Nagakura, Avneesh Sarwate, Yaared Al-Maheiri, Joe Turchiano, John Subosits. All group members were involved with the interviews and design. Avneesh and John worked on the task analysis questions, Kuni worked on the sketches and storyboard, and Yaared and Joe answered the Contextual Inquiry.

Problem and Solution Overview:

Servers and restaurant staff tend to be over tasked at busy times, which leads to unhappy customers, overworked staff, and decrease in revenue. Our solution a system that passively gathers low-level information (ex. Status of food, drinks, etc.), and displays it in a way that allows the restaurant staff to make high level decisions more quickly and effectively.

Description of Users in Contextual Inquiry:

As our system affects both patrons and servers in restaurants, we decided to focus primarily on the servers for the majority of our interviews. We made this decision because putting more control in the hands of the patrons may exacerbate the issue of overworked waiters. Focusing on the servers, we concluded, would not only decrease their workload and reduce any inefficiency, but it would also improve the quality of service for the patrons. Thus, our target user group for our system is servers and restaurant staff (waiters, bus boys, managers, etc.). We observed and interviewed four different users from this target group. Our first user was a middle-aged waitress at Zorba’s Restaurant, who served us while we ate lunch. When we interviewed her, she had no major complaints and seemed satisfied with her job. Our second user was a young waiter at Zorba’s Restaurant, who had just gotten off his shift. He emphasized the teamwork that is necessary in an effective waiting staff and complained about large parties that demand all available staff. Our third user was a Princeton student who has waitressed for numerous summers at an upscale Country Club. Our final user was a young manager at Triumph. As a manager, she focuses on efficiency and customer satisfaction of the whole restaurant, not simply specific sections or tables.

Contextual Inquiry Interview Descriptions:

We prepared a set of questions before each interview. The first set of interviews/observations was performed while our group sat down to eat lunch at Zorba’s Restaurant on Nassau St. We spent most of our meal observing and taking down notes on the movement/activities of the waiting staff. Afterwards, we interviewed two waiters—one that was directly serving us, and another who had just gotten off his shift. We asked them both about the range of tasks that they perform and how they perform it on a daily basis. The interview with the student was performed in an informal setting, and we asked her to recount her past summer experiences as a waitress. We met with a manager at Triumph at around 5:00 before the bar got too busy. She was still on her shift but was able to answer our questions for a couple minutes.

Most of the people we spoke to agreed that time management and balance, especially during busy hours, was the key to good service. Teamwork and covering for each other’s tasks also seemed to be a prominent component of making it work. It seemed that the tasks themselves were not especially difficult, but during peak hours, the sheer number of simple tasks to be completed and the difficulty of organizing those tasks led to poor service. Most of our interviewees mentioned that a key part of the job was balancing the ability to provide for patrons as soon as possible but without being overbearing. We found that in all three settings, there was a clear division of labor in place between management, service, and bus boys, but there was usually some degree of overlap between the positions.

In each interview, we did pick up a few pieces of unique information that other interviews did not provide. Our first two interviews took place at Zorba’s Restaurant on Nassau Street, a small family restaurant. We interviewed both the waitress who served us and another waiter on staff at the time. These two emphasized the need to always be keeping an eye on every table they were staffing; in retrospect this sort of tactic is probably only possible at a smaller restaurant like Zorba’s. Otherwise, they seemed to have very little to complain about. Next, we interviewed a student waitress in an informal setting. She, by virtue of having worked at a country club, had a different experience from the rest. The focus of her customers tended to be on alcoholic beverages (especially wine), and her major problems arose from trying to coordinate bringing out food for one table while having to refill drinks at another. She also mentioned that it was difficult to determine when to take food or drinks away when different people at the same table ate and drank at different paces. She also mentioned that, because the country club was divided into several segments, she was not always able to check on all tables as she moved around the floor. She also mentioned that not letting food get cold while refilling drinks was another big issues that she often faced. The final interview was with a manager at Triumph Bar, also on Nassau Street. Our questions for her were therefore more geared toward a management perspective rather than that of small-scale waiting. Since Triumph is a large establishment with multiple levels and a bar, the manager stressed that coordination and division of labor was especially important to her success. We found that the Triumph staff had been using a service called Digital Dining – which didn’t work especially well and actually turned into a bottleneck-point when waiters had to manually input orders to the service after first writing them up.

11 Task Analysis Questions:

1. Who is going to use system?

Servers, managers, and kitchen staff at large/busy sit down restaurants would be

the target users of our system. Servers would be the primary users, and would

benefit most using it during “rush” periods where many parties come in, or during

times where particularly large parties unexpectedly come in. The kitchen staff

would use the system to provide information to the servers to make their work

more efficient.

2. What tasks do they now perform?

Servers must (roughly in order of increasing difficulty) refill drinks, clear plates,

refill condiments/coffee/napkin dispensers, bring food from the kitchen in a

timely manner, take and send orders to the kitchen, bring the bill at the right time,

determine what order to perform the previous tasks.

3. What tasks are desired?

Waiters want an efficient way to transfer orders from waiter to kitchen and would

like to know when food is ready in the kitchen. They would also like to know when

tables are “antsy” and impatient. They would like a way that helps them minimize

mistakes in taking down and delivering orders. They’d also like to know when

customers’ drinks are low.

4. How are the tasks learned?

Waiters either learn tasks by serving as a busboy first, or shadowing another waiter

for a day. More experienced waiters are given more tables to handle, while less

experienced waiters are given fewer tables.

5. Where are the tasks performed?

The tasks occur mainly on the restaurant floor and the “waiter’s area” where the

waiters pick up food from the kitchen or congregate for other tasks.

6. What’s the relationship between user & data?

Users must be attentive in collecting data themselves and must make real time/

online decisions on how to act. There is a lack of quantitative data – the only well-

specified type of data is orders and check amounts; everything else must be guessed

or estimated. The sharing of data between servers and kitchen is fairly structured

(notes passed about what orders to cook), and there is some sharing of data

between servers to help each other out, but this is incidental and always verbal.

7. What other tools does the user have?

Paper and notepad are used to remember orders, which are often written in

shorthand. Some (very few) use mobile devices for this instead. There are

sometimes computer-scheduling systems, but not often. At Triumph, “Digital

Dining” is used, a system that supposedly helps waiters perform some of the

previously mentioned activities (but the waiters are dissatisfied with it).

8. How do users communicate with each other?

Servers communicate almost exclusively verbally, some body language/implicit

communication or inference occurs when a server tries to discern a customer’s

mood. Between the servers and the kitchen, communication is generally written,

maybe with verbal emphasis for some unusual aspect.

9. How often are the tasks performed?

Each waiter can be assigned up to 5-6 tables at the same time. Tasks in general are

being performed all the time, it depends on server how they are ordered/scheduled.

10. What are the time constraints on the tasks?

There are no explicit time limits on tasks, but in general all individual tasks are

completed as fast as possible and the “optimization” comes with deciding what

order to perform tasks in as to minimize “lateness” across the entire set of tasks.

11. What happens when things go wrong?

This depends on how wrong things go. For small delays, customers may simply

shrug it off, or become slightly grumpy. For larger delays customers may cut back

on the tip or complain to a manager. For meal mix-ups, users may again complain to

a manager or cutback tip. Depending on how angry customers are, they may refuse

payment. In some restaurants, servers are granted authority to make concessions to

customers (i.e, free deserts) to make up for mistakes.

Description of 3 tasks:

1. Checking customer status

This task involves checking to see what tables have drinks that need refilling, what

tables are waiting on orders, and what tables are requesting attention. Currently

this task is of medium difficulty, but can be quite difficult when things are busy.

Some information, such as how long tables have been waiting for food, are not at all

currently available. Using our system, this task should be very easy, as the server

would only have to look at a screen to see the status of all their tables.

2. Determine task order

This task involves deciding what to do first, for example, whether to make a

round of filling drinks before checking the kitchen to see if food is out. This task is

currently the hardest task for servers because of the lack of information available

in making the decision. Servers do not always know the status of all of their

tables and generally do not have data on whether their orders to the kitchen are

finished or not. Combined with the solution to task 1, incorporating kitchen data

into the system makes this task much easier, as with a single glance servers will

have customer data and kitchen data, which will help servers determine the most

“urgent” tasks to complete.

3. Signal for help

This task is generally easy, but during busy times it can become difficult. Servers

generally verbally ask other servers/busboys nearby for help if they need another

hand to bring food or quickly fill a glass. However, during really busy times it might

be the case that a server can’t immediately find a free hand for a task. In this case,

pressing a button or sending some signal to a central area could alert all servers that

a free hand is needed in a certain area.

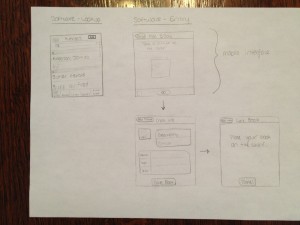

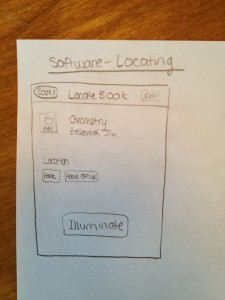

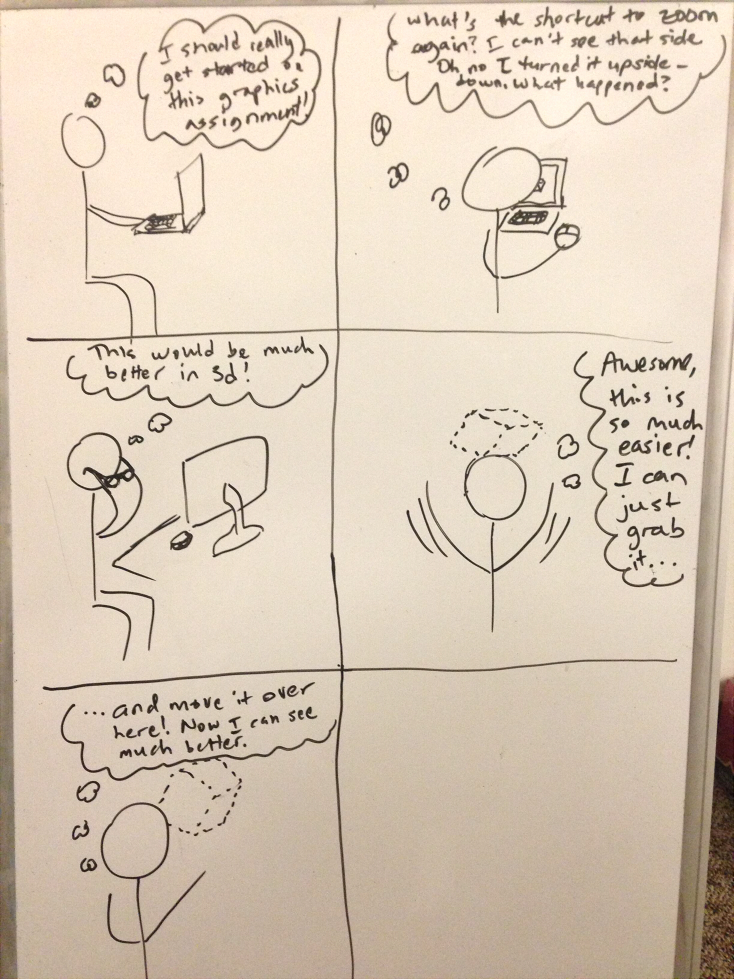

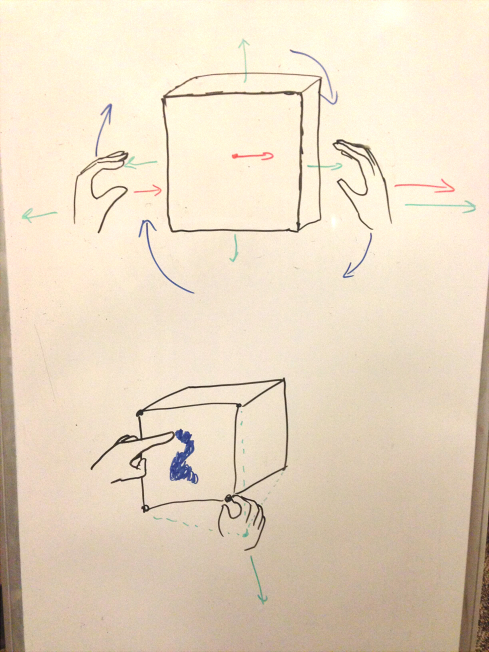

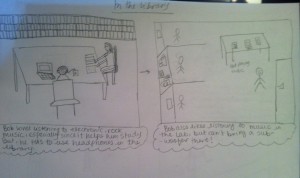

Interface Design:

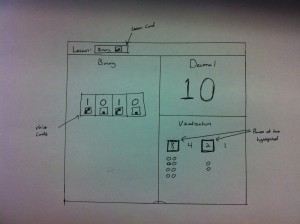

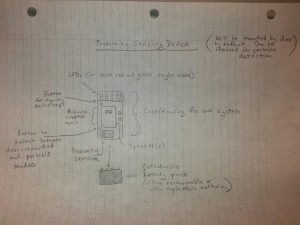

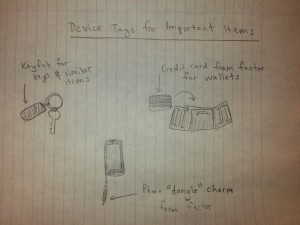

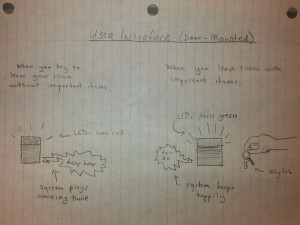

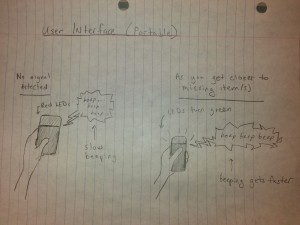

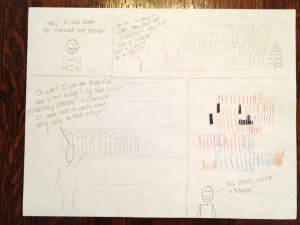

Our system works over two “layers” of interaction. The interaction between the waiters and the customers, and the interaction between the waiters and the kitchen. The central unit of the system is a set of screens in the waiter’s area that displays the floorplan of the restaurant and displays the status of each table. For each table, the screen lists the number of empty, half empty, and full cups, the list of dishes ordered by that table, and how long it has been since the table placed their order (if the table has their food delivered, the time display shows the text DELIVERED, if the table is not occupied, the time display shows EMPTY). Each screen represents a section of the restaurant that a waiter or small group of waiters is responsible for. On the table, there are pressure sensitive coasters that can detect how full a glass is. These send the information on the state of the glasses to the main screens. Waiters also have a “help” button on their belts. If a waiter cannot solicit help from a nearby waiter, they can press their help button, causing their section’s screen in the waiter’s area to flash, alerting any free waiters that assistance is required in a particular section.The screen displays the list of food items that have been ordered by each table. Each item in the list is displayed in red, and its color changes to green when it is finished by the kitchen. If a finished item has been sitting for some specified amount of time (which can be set for the user) the table that ordered it flashes red as a warning that an item at that table may be getting cold, and the table should be served.

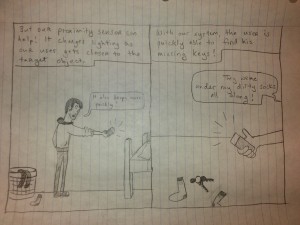

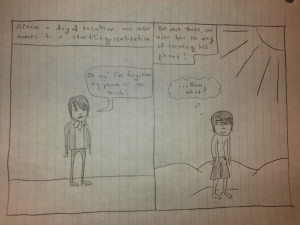

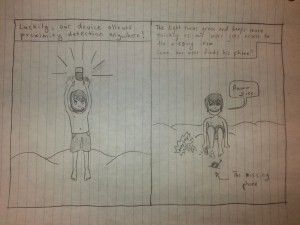

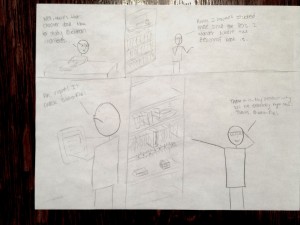

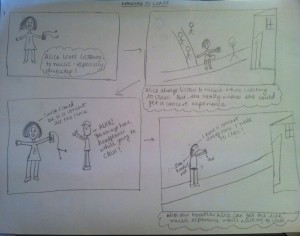

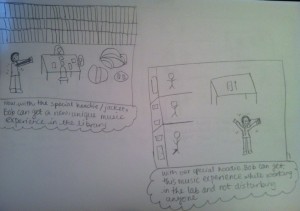

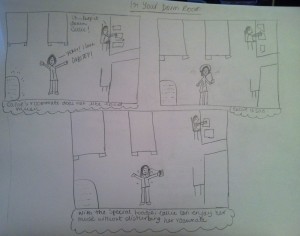

Storyboards

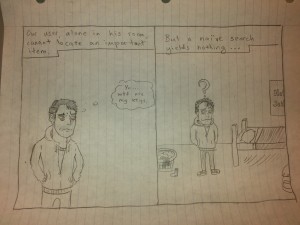

Task 1: Checking customer status

- “I wonder if I need to refill cups right now”

- Waiter looks at the motherboard, and notices that there’s only 1 cup that’s halfway

- Waiter checks again and notices that now 5 people have finished their cups

- The waiter goes to refill water

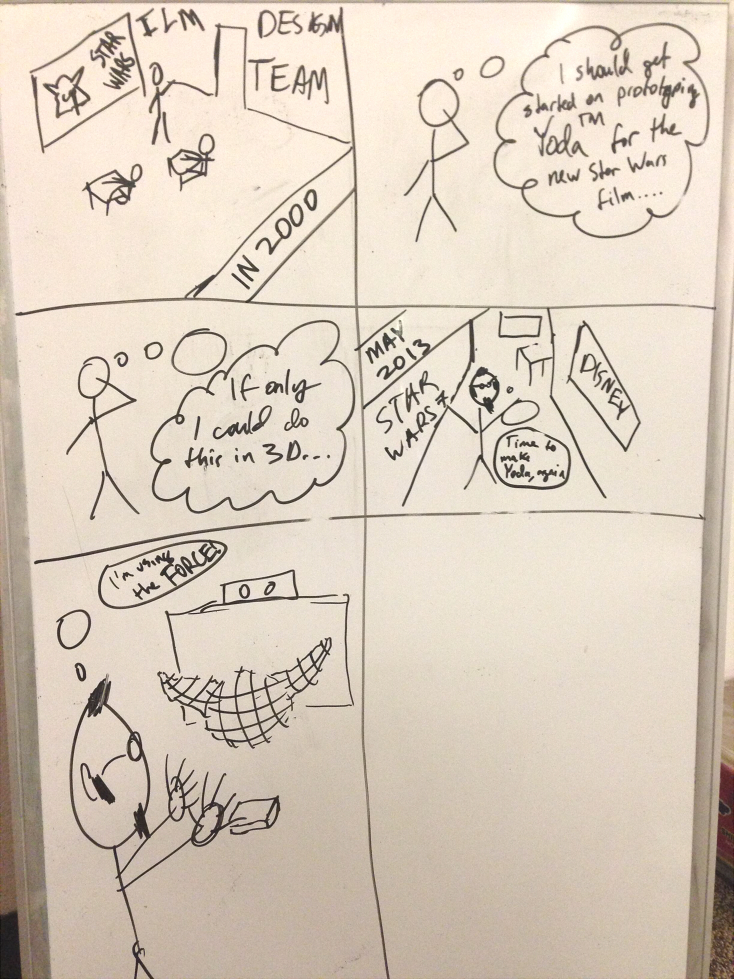

Task 2: Determine task order

- Waiter wonders what he has to do

- Waiter checks the motherboard and prioritizes what he has to do

- Sets out to go refill water

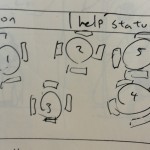

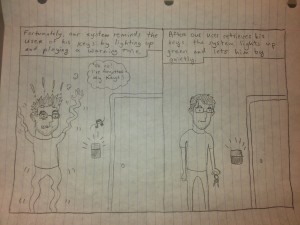

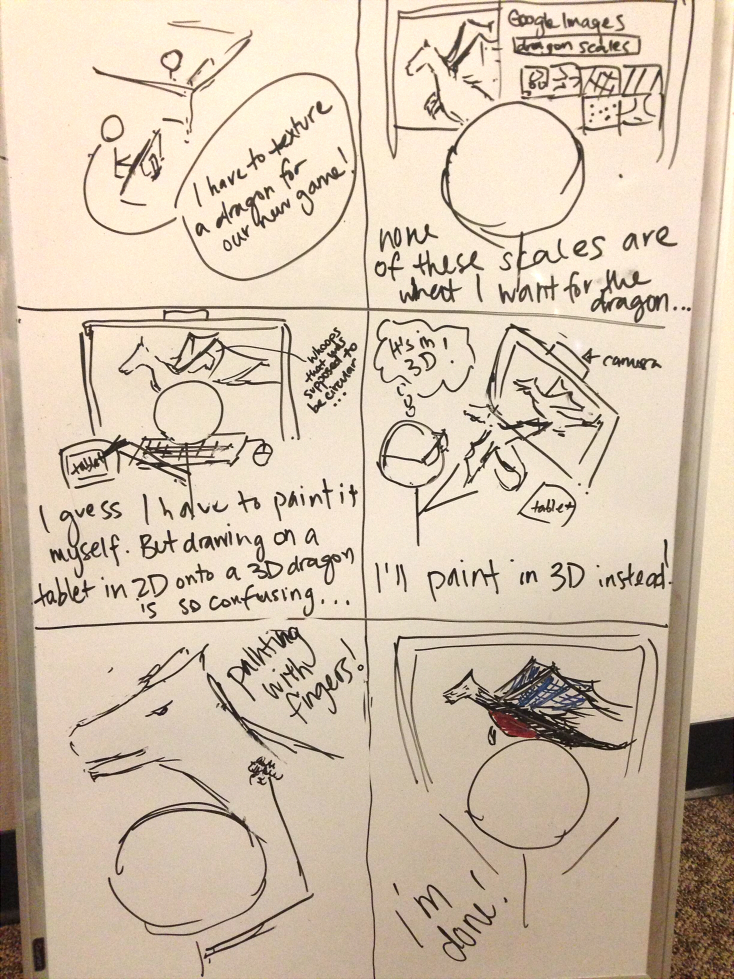

Task 3: Signal for help

- John has a lot of tasks on his plate

- He hits his help button

- Jane, who is currently free, sees that John needs help

- Jane finds John and sees how she can help him out

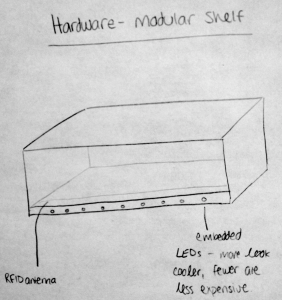

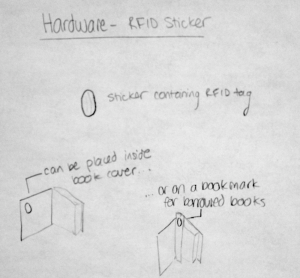

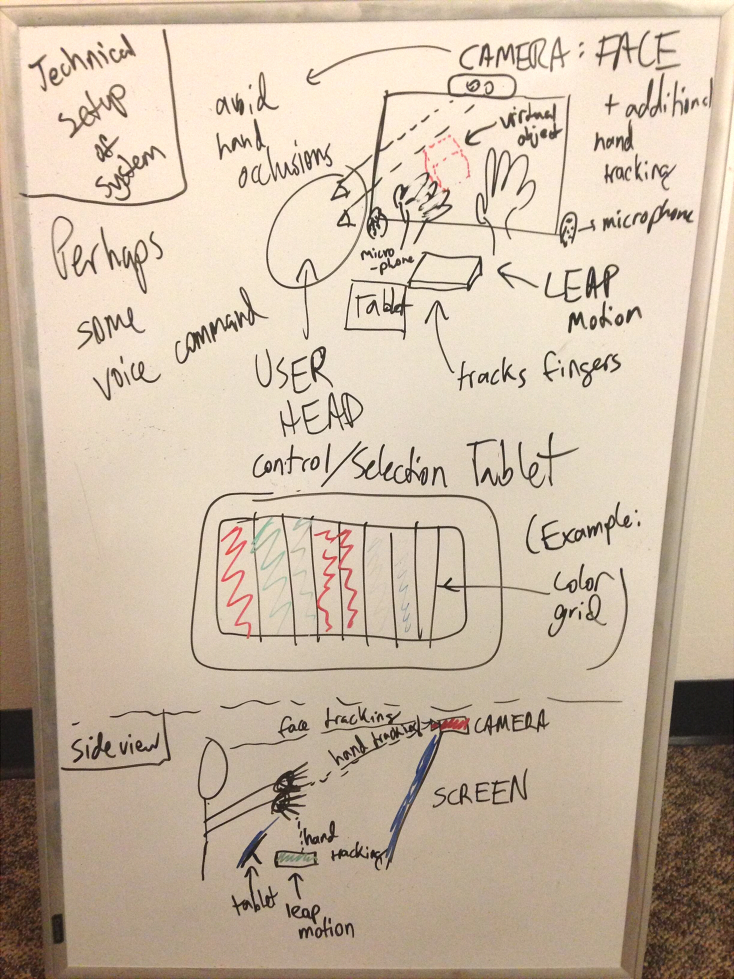

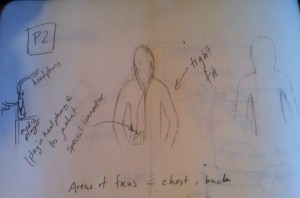

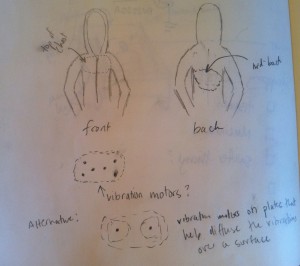

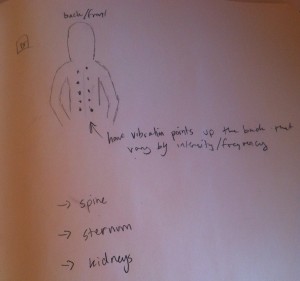

Sketches of System:

- Rough sketch of Motherboard

- More detailed sketch of Motherboard

- Close up on a single table

- Coasters that sense if a glass is running low and sends the information back to the Motherboard