Group 16 – Epple

Andrew, Brian, Kevin, Saswathi

Project Summary:

Our project is to use Kinect to make an intuitive interface for controlling web cameras through using body orientation.

Blog post links:

P1: https://blogs.princeton.edu/humancomputerinterface/2013/02/22/p1-epple/

P2: https://blogs.princeton.edu/humancomputerinterface/2013/03/11/p2-group-epple-16/

P3: https://blogs.princeton.edu/humancomputerinterface/2013/03/29/p3-epple-group-16/

P4: https://blogs.princeton.edu/humancomputerinterface/2013/04/08/p4-epple-portal/

P5: https://blogs.princeton.edu/humancomputerinterface/2013/04/22/p5-group-16-epple/

P6: https://blogs.princeton.edu/humancomputerinterface/2013/05/06/p6-epple/

Videos and Images:

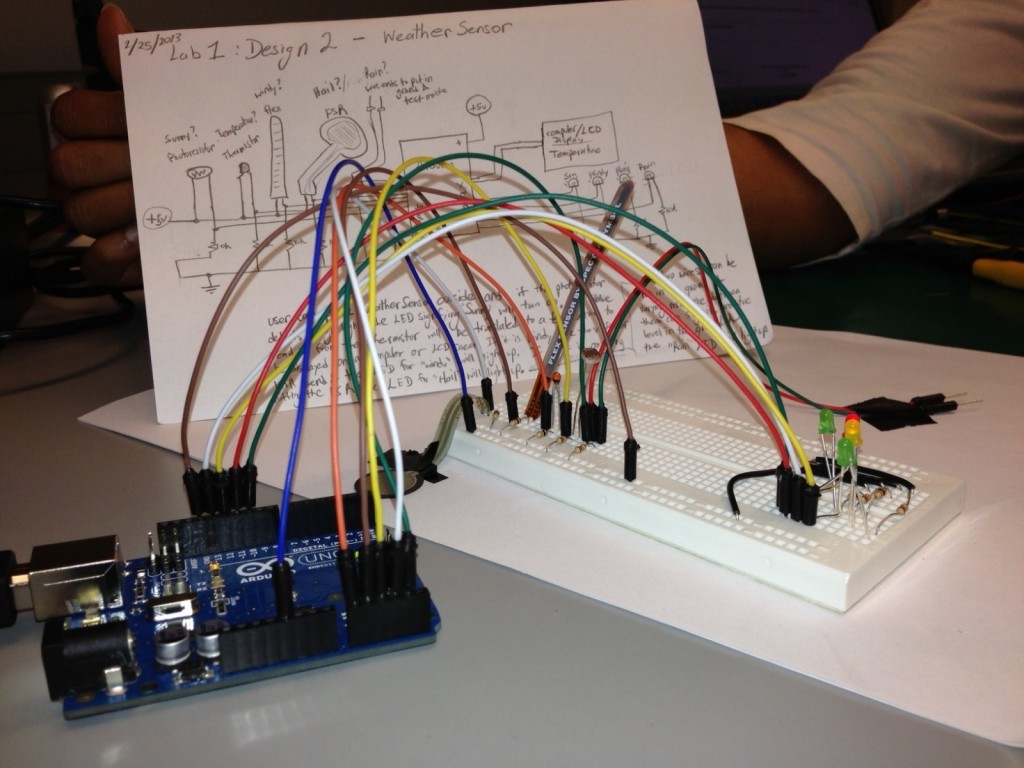

Remote webcam side of interface

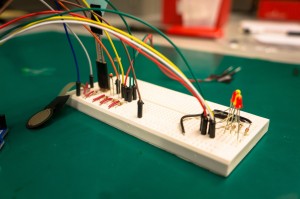

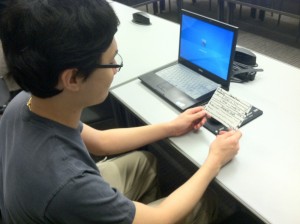

Arduino controlling webcam

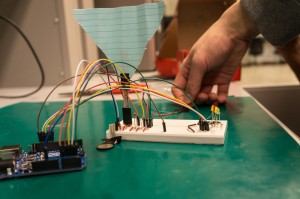

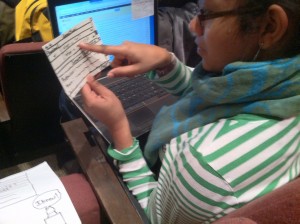

Team Epple with Kinect side of interface

Changes since P6:

- Added networking code to send face tracking data from the computer attached to the Kinect to the computer attached to the Arduino/webcam. This was necessary to allow our system to work with remote webcams.

- Mounted mobile screen on a swivel chair so that the user would not be required to hold it in front of them while changing their body orientation. This was due to comments during from P6 indicating that it was tiring and confusing to change body orientation while also moving the mobile screen into view.

Design Evolution:

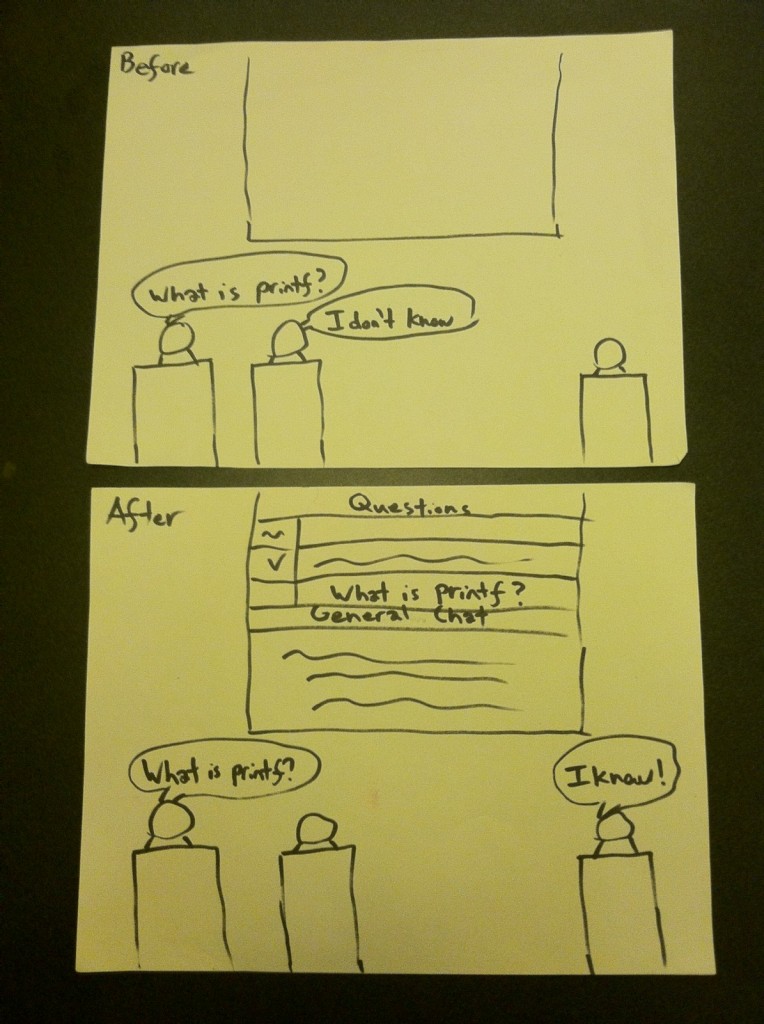

Over the course of the semester, both our design and project goals have been modified. We started with an idea for a type of camera that would help us scan a public place, such as the Frist Student Center. Our initial goal was to create something where a person could remain in their room and be able to check if a person of interest was in a certain remote area without having to physically walk there. From identification of other relevant tasks, we have now modified the goal of the project to improve the web chat experience, in addition to being able to find people in a remote room. We changed this goal because we found that the one function of searching for a distant friend was too narrow and that a rotating camera could allow for completion of many other unique tasks. These tasks include having a webchat user use our product to follow a chat partner that is moving around and talk to multiple people.

On the design side, we originally envisioned that the camera would be moved by turning one’s head instead of clicking buttons. This is intended to make the interface more intuitive. The main function of turning one’s head to rotate the camera has remained the same, but through user testing, we learned that users found the act of constantly keeping a mobile screen in front of them while changing their head orientation was confusing and tiring. Most would rather have some way to automatically have the mobile screen move into view as they changed their head orientation. For this reason, we have decided to mount the mobile screen on a swivel chair so that the user can swivel to change their body orientation, to control the remote camera, while having the mobile screen constantly mounted in front of them. Also, we initially intended to implement both horizontal and vertical motion, but we decided that for the prototype, implementing only the horizontal motion would be sufficient to show a working product. This simplified our design to only needing a single motor instead of two motors attached to each other, and we also did not have to implement the vertical head motion into our code. We chose to implement only horizontal motion instead of only vertical motion because horizontal motion gives the user more of a realistic experience of how the device will be used. The user can currently use our system to swivel left or right and turn a remote camera to scan a room at a single height, allowing the user to see different people spread around the room, or moving around at the same height. Vertical motion would have restricted users to only see one person or space from top to bottom which is not as useful or representative of the product’s intended function.

Critical Evaluation:

With further iteration, our product can definitely be turned into a useful real-world system. We believe that our product serves a purpose that is currently not implemented in the mainstream web camera and video chat userspace. We know that there are some cameras that are controlled remotely through use of keys, buttons, or some sort of hand control, but we have never encountered them in the mainstream market. We also believe that our product is more intuitive, as the user simply has to turn their head to control the camera. Based on user testing, we have observed that users are able to easily master use of our product. After a small initial learning curve, users are able to accomplish tasks involving rotating a remote camera almost as easily as if they were in the remote area, in person, and turning their heads. We thus strongly believe that our product will have a user base if we choose to develop and market it further.

As a result of our design, we have learned quite a bit about this specific type of application space of detecting the movement of one’s head as well as moving a camera. We found that, in the mechanical aspect of the design, it is difficult to implement both horizontal and vertical motion for the camera. We are still trying to figure out the most effective way to combine two servo motors and a webcam into a single mechanical device. On the other hand, we found that implementing the Kinect, Processing, and Arduino code necessary in this application space was fairly straightforward, as there is an abundance of tutorials and examples for these on the internet. From evaluation, we found that computer users are very used to statically sitting in front of their computers. Changing the way they webchat to accommodate our system thus involves a small learning curve, as there is quite simply nothing similar to our system in the application space aside from the still highly-experimental Google Glass and Oculus Rift. Users are particularly not accustomed to rotating their head while keeping a mobile screen in front of their head. They instead expect that the mobile screen will automatically move on its own to be in front of their heads. One user also, for some reason, would occasionally expect that the remote camera would turn on its own, and track people on the other end without the user turning his head at all. We suspect that this may be due to the way many video game interfaces work with regards to automatic locking in first person shooters. Based on user initial reactions, we realized that if we were to market our product, we would have to work very hard to make sure users understand the intended use of the product and not see it as a breach of privacy. Users usually don’t initially see that we are trying to make an intuitive web chat experience. Instead, they suspect our system is for controlling spy cameras and invading personal spaces. A lot of what we have learned comes from user testing and interviews, so we found that the evaluation process is just as important to the development of a product, if not more important than the physical design of the product.

Future Work:

There are quite a few things that we still need to do to if we were to move forward with this project and make it into a full fledged final product. One is the implementation challenge of adding support for rotating the camera vertically, as we currently only have one motor moving the camera horizontally. Another would be to create a custom swivel chair tailored for Portal that would have a movable arm on which you could attach the mobile screen. This would keep the screen in front of the user naturally, rather than our current implementation of taping the screen onto the back of the chair. If Google Glass or Oculus Rift ever become affordable, we intend intend to explore the space of incorporating such wearable screens into Portal as a replacement to our mobile iPad screen. We could also implement 3D sound so the user actually feels like they are in a space with directional audio cues, rather than just having normal, non-directional sound coming from speakers. This would be a great addition to our custom chair design, something like a surround sound that makes the user feel transported to a different place. We might also want to implement a function that suggests the direction for the user to turn based on where a sound is coming from. We also should make the packaging for the product much more robust.

In addition, not just at the design end, we also expect that further user testing would help us evaluate how users would react to living with a moving camera in their rooms and spaces. If necessary, we could implement a function for people on the remote side to prevent camera motion if they so choose to due to reasons such as privacy concerns. Most of the suggestions on this list for future work, such as the chair, sound system, and the screen were brought to our attention through user evaluations, and our future work would definitely benefit from more user evaluations. These user evaluations would be focused on how users react to actually living with the system, what they find inconvenient, unintuitive and how to improve these aspect of the system.

.zip File:

https://dl.dropboxusercontent.com/u/801068/HCI%20final%20code.zip

Third-Party Code:

- Microsoft Kinect SDK

- Microsoft Kinect Developer Toolkit 1.7.0 – Face Tracking Visualization

- Arduino UDPSendReceiveString tutorial http://arduino.cc/en/Tutorial/UDPSendReceiveString

Printed Materials:

https://www.dropbox.com/s/r9337w7ycnwu01r/FinalDocumentationPics.pdf