By Catherine Zandonella, Office of the Dean for Research

Roughly six feet of DNA are packed into every human cell, so it is not surprising that our genetic material occasionally folds into odd shapes such as hairpins, crosses and clover leafs. But these structures can block the copying of DNA during cell division, leading to gene mutations that could have implications in cancer and aging.

Now researchers based at Princeton University have uncovered evidence that cells contain a built-in system for eliminating one of the worst of these roadblocks, a structure known as a G-quadruplex. In a paper published earlier this month in Nature, a group of researchers led by Princeton’s Virginia Zakian reported that an enzyme known as the Pif1 helicase can unfold these structures both in test tubes and in cells, bringing DNA replication back on track.

Given that Pif1 mutations have been associated with an increased risk of breast cancer, Zakian said, the study of how Pif1 ensures proper DNA replication could be relevant to human health. Zakian is Princeton’s Harry C. Wiess Professor in the Life Sciences.

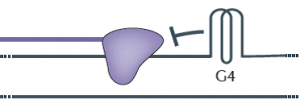

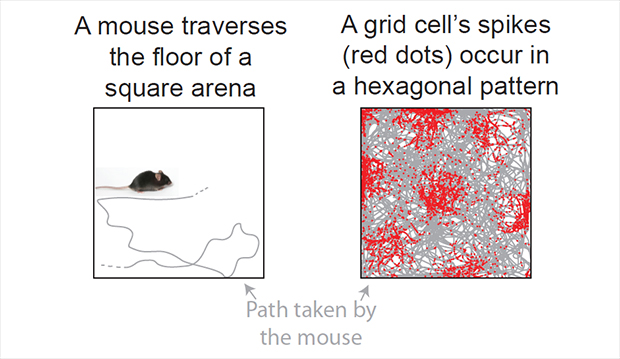

Most DNA is made of up of two strands twisted about each other in a way that resembles a spiral staircase. Every time a cell divides, each DNA molecule must be duplicated, a process that involves unwinding the staircase so that an enzyme known as DNA polymerase can work down each strand, copying each letter in the DNA code. During this exposed period, regions of the unwound single strands can fold into G-quadruplexes (see diagram).

Like a car that encounters a pile-up on an Interstate, the DNA polymerase halts when it encounters a G-quadruplex, explained Matthew Bochman, a postdoctoral researcher who was a co-first author with Katrin Paeschke, now an independent investigator at University of Würzburg in Germany. The work also included Princeton graduate student Daniela Garcia.

“The DNA that is folded into a G-quadruplex cannot be replicated, so essentially it is skipped,” Bochman said. “Failure to copy specific areas of DNA that you really need is a serious problem, especially in regions that control genes that either suppress or contribute to cancer,” Bochman said.

Last year, the Zakian group in collaboration with human geneticists at the University of Washington reported that a mutation in human Pif1 is associated with an increased risk of breast cancer, suggesting that the ability to unwind G-quadruplexes could be important for protecting against cancer. The finding was published in the journal PLoS One.

G-quadruplexes could also be implicated in the process of aging, according to the researchers. The structures are thought to form at the ends of chromosomes in regions called telomeres, said Zakian, an expert on telomere biology. Damaged or shortened telomeres are associated with premature aging and cancer.

To explore the role of Pif1 helicases in tackling G-quadruplexes, Bochman and Paeschke purified Pif1 helicases from yeast and bacteria and found that in test tubes, all of the Pif1 helicases unwind G-quadruplex structures extremely fast and very efficiently, much better than other helicases tested in the same way.

Next, these investigators set up an experiment to determine if Pif1 acts on G-quadruplexes inside cells. Using a system that could precisely evaluate the effects of G-quadruplex structures on the integrity of chromosomes, the researchers found that normal cells had no problem with the addition of a G-quadruplex structure, but when cells lack Pif1 helicases, the G-quadruplex induced a high amount of genome instability.

“To me, the most remarkable aspect of the study was the demonstration that Pif1-like helicases taken from species ranging from bacteria to humans and placed in yeast cells can suppress G-quadruplex-induced DNA damage,” Zakian said. “This finding suggests that resolving G-quadruplexes is an evolutionarily conserved function of Pif1 helicases.”

The Zakian lab also found that replicating through G-quadruplexes in the absence of Pif1 helicases results not only in mutations of the DNA at the site of the G-quadruplex but also in intriguing “epigenetic” effects on expression of nearby genes that were totally unexpected. Epigenetic events cause changes in gene expression that are inherited, yet they do not involve loss or mutation of DNA. Graduate student Daniela Garcia has proposed that the epigenetic silencing of gene expression that occurs near G-quadruplexes in the absence of Pif1 helicases is a result of the addition or removal of molecular tags on histones, which are proteins that bind DNA and regulate gene expression. This hypothesis is currently being studied.

The study involved contributions from Petr Cejka and Stephen C. Kowalczykowski of the University of California-Davis, and Katherine Friedman of Vanderbilt University.

Paeschke, Katrin, Matthew L. Bochman, P. Daniela Garcia, Petr Cejka, Katherine L. Friedman, Stephen C. Kowalczykowski & Virginia A. Zakian. Pif1 family helicases suppress genome instability at G-quadruplex motifs. Nature. 2013. doi:10.1038/nature12149.

This research was supported by the National Institutes of Health (V.A.Z., GM026938-34; S.C.K.GM041347), the National Science Foundation (K.L.F., MCB-0721595), the German Research Foundation (DFG), the New Jersey Commission on Cancer Research (K.P.) and the American Cancer Society (M.L.B., PF-10-145-02-01).

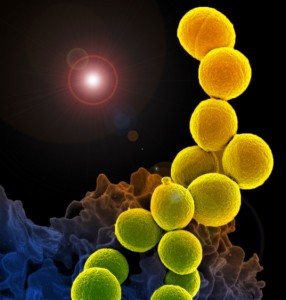

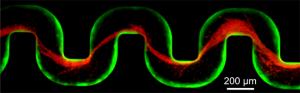

A new study has examined how bacteria clog medical devices, and the result isn’t pretty. The microbes join to create slimy ribbons that tangle and trap other passing bacteria, creating a full blockage in a startlingly short period of time.

A new study has examined how bacteria clog medical devices, and the result isn’t pretty. The microbes join to create slimy ribbons that tangle and trap other passing bacteria, creating a full blockage in a startlingly short period of time.

You must be logged in to post a comment.