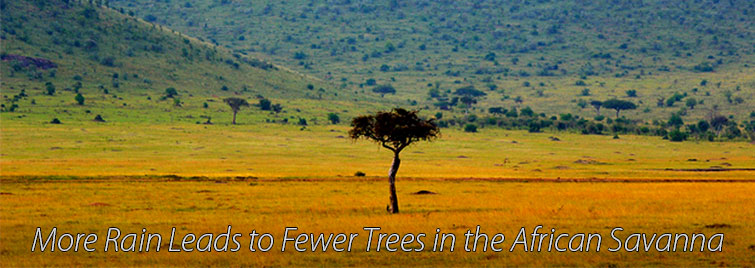

In 2011, an influx of remote sensing data from satellites scanning the African savannas revealed a mystery: these rolling grasslands, with their heavy rainfalls and spells of drought, were home to significantly fewer trees than researchers had previously expected given the biome’s high annual precipitation. In fact, the 2011 study found that the more instances of heavy rainfall a savanna received, the fewer trees it had.

This paradox may finally have a solution due to new work from Princeton University recently published in the Proceeding of the National Academy of Sciences. In the study, researchers use mathematical equations to show that physiological differences between trees and grasses are enough to explain the curious phenomenon.

“A simple way to view this is to think of rainfall as annual income,” said Xiangtao Xu, a doctoral candidate in David Medvigy’s lab and first author on the paper. “Trees and grasses are competing over the amount of money the savanna gets every year and it matters how they use their funds.” Xu explained that when the bank is full and there is a lot of rain, the grasses, which build relatively cheap structures, thrive. When there is a deficit, the trees suffer less than grasses and therefore win out.

To establish these findings, Xu and his Princeton collaborators Medvigy, assistant professor in geosciences, and Ignacio Rodriguez-Iturbe, professor of civil and environmental engineering, created a numerical model that mimics the actual mechanistic functions of the trees and grasses. “We put in equations for how they photosynthesize, how they absorb water, how they steal water from each other—and then we coupled it all with a stochastic rainfall generator,” said Xu.

Whereas former analyses only considered total annual or monthly rainfall, understanding how rainfall is distributed across the days is critical here, Xu said, because it determines who will win in a competition between grasses and trees for the finite resource of water availability.

The stochastic rainfall generator draws on rainfall parameters derived from station observations across the savanna. By coupling it with the mechanistic equations describing how the trees and grasses function, the team was able to observe how the plants would respond under different local climate conditions.

The research team found that under very wet conditions, grasses have an advantage because they can quickly absorb water and support high photosynthesis rates. Trees, with their tougher leaves and roots, are able to survive better in dry periods because of their ability to withstand water stress. But this amounts to a disadvantage for trees in periods of intense rainfall, as they are comparatively less effective at utilizing the newly abundant water.

“We put realistic rainfall schemes into the model, then generated corresponding grass or tree abundance, and compared the numerical results with real-world observations,” Xu said. If the model looked like the real-world data, then they could say it offered a viable explanation for the unexpected phenomenon, which is not supported by traditional models—and that is exactly what they found. They tested the model using both field measurements from a well-studied savanna in Nylsvley, South Africa and nine other sites along the Kalahari Transect, as well as remote sensing data across the whole continent. With each site, the model accurately predicted observed tree abundances in those locations.

The work rejects the long held theory of root niche separation, which predicts that trees will outcompete grasses under intense rainfall when the soil becomes saturated, because their heavy roots penetrate deeper into the ground. “But this ignores the fact that grasses and trees have different abilities for absorbing and utilizing water,” Xu said. “And that’s one of the most important parts of what we found. Grasses are more efficient at absorbing water, so in a big rainfall event, grasses win.”

“Models are developed to understand and predict the past and present state — they offer a perspective on future states given the shift in climatic conditions,” said Gaby Katul, a Professor of Hydrology and Micrometeorology in the Nicholas School of the Environment at Duke University, who was not involved in the research. “This work offers evidence of how shifts in rainfall affect the tree-grass interaction because rainfall variations are large. The approach can be used not only to ‘diagnose’ the present state where rainfall pattern variations dominate but also offers a ‘prognosis’ as to what may happen in the future.”

Several high profile papers over the last decade predict that periods of intense rainfall like those described in the paper will become more frequent around the globe, especially in tropical areas, Xu said. His work suggests that these global climate changes will eventually lead to diminished tree abundance on the savannas.

“Because the savanna takes up a large area, which is home to an abundance of both wild animals and livestock, this will influence many people who live in those areas,” Xu said. “It’s important to understand how the biome would change under global climate change.”

Furthermore, the study highlights the importance of understanding the structure and pattern of rainfall, not just the total annual precipitation—which is where most research in this area has traditionally focused. Fifty years from now, a region may still experience the same overall depth of precipitation, but if the intensity has changed, that will induce changes to the abundance of grasses and trees. This, in turn, will influence the herbivores that subsist on them, and other animals in the biome — essentially, affecting the entire complex ecosystem.

Xu said it would be difficult to predict whether such changes would have positive or negative impacts. But he did say that more grasses mean more support for cows and horses and other herbivores. On the other hand, fewer trees mean less CO2 is captured out of the atmosphere, as well as diminished habitat for birds and other animals that rely on the trees for survival.

What the model does offer is an entry point for better policies and decisions to help communities adapt to future changes. “It’s just like with the weather,” Xu said. “If you don’t read the weather report, you have to take what nature gives you. But if you know in advance that it will rain tomorrow, you know to bring an umbrella.”

This work was supported by the Princeton Environmental Institute and the Andlinger Center for Energy and the Environment at Princeton University.

Xiangtao Xua, David Medvigy, and Ignacio Rodriguez-Iturbe. Relation between rainfall intensity and savanna tree abundance explained by water use strategies. Published online September 29, 2015, doi: 10.1073/pnas.1517382112. PNAS October 5, 2015.

You must be logged in to post a comment.