By Staff

Researchers from Princeton University have identified genes important for age-related cognitive declines in memory in adult worm neurons, which had not been studied previously. The research, published in the journal Nature, could eventually point the way toward therapies to extend life and enhance health in aging human populations.

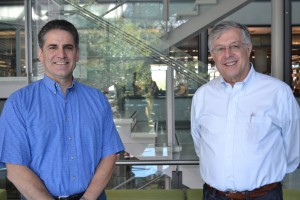

“The newly discovered genes regulate enhanced short-term memory as well as the ability to repair damaged neurons, two factors that play an important role in healthy aging,” said Coleen Murphy, a professor of Molecular Biology and the Lewis-Sigler Institute for Integrative Genomics, director of the Glenn Center for Quantitative Aging Research at Princeton, and senior author on the study. “Identifying the individual factors involved in neuron health in the worm is the first step to understanding human neuronal decline with age.”

The small soil-dwelling roundworm, Caenorhabditis elegans, contains genes that determine the rate of aging and overall health during aging. Mutations in one of these genetic pathways, the insulin/IGF-1 signaling (IIS) pathway, can double worm lifespan. Similar mutations in humans have been found in long-lived humans.

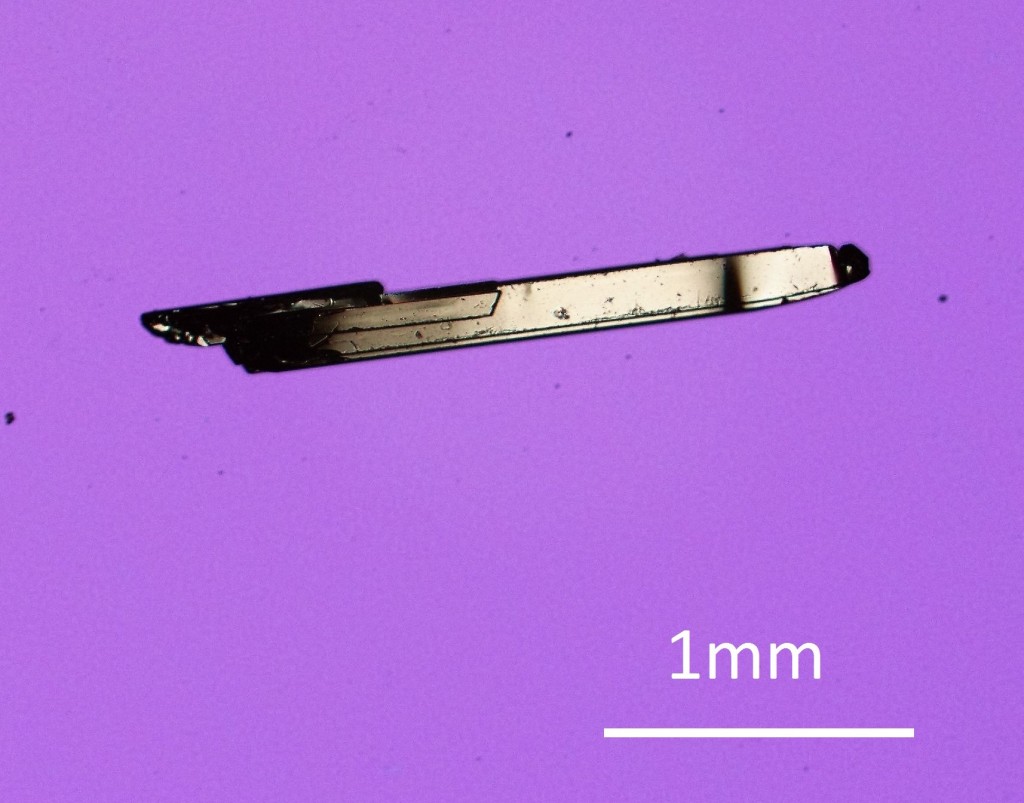

But studying the IIS mutation in adult worm neurons was difficult because the adults have a thick, durable covering that protects the neurons.

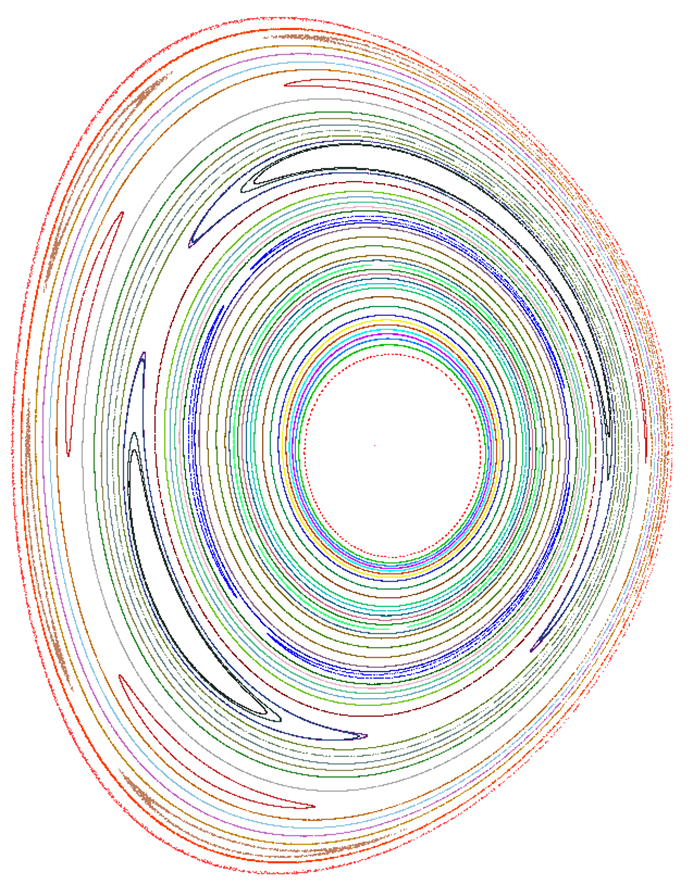

Using a new technique they developed to break up the tough outer covering, researchers at Princeton succeeded in isolating adult neurons, which enabled the detection of the new set of genes regulated by the insulin/IGF-1 signaling pathway.

“Our technique enabled us to study gene expression in adult neurons, which are the cells that govern cognitive aspects such as memory and nerve-regeneration,” said Murphy, whose research on aging is funded in part by the National Institutes of Health. “Prior to this work, researchers were only able to examine gene regulation either using adult worms or individual tissues from young worms.”

The work allowed co-first authors Rachel Kaletsky and Vanisha Lakhina to explore why long-lived IIS mutants maintain memory and neuron-regeneration abilities with age. Until now, the known targets of the insulin longevity pathway were located mostly in the intestine and skin of the worm rather than the neurons. Kaletsky is a postdoctoral research fellow and Lakhina is a postdoctoral research associate in the Lewis-Sigler Institute.

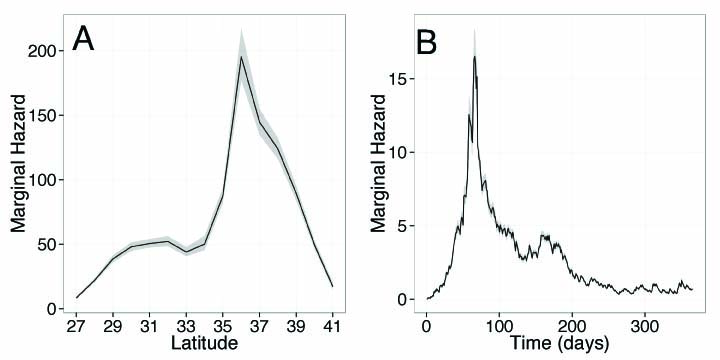

Kaletsky worked out the new way to isolate neurons from adult worms, and with Lakhina, proceeded to profile the gene activity in adult C. elegans neurons for the first time. They discovered that the IIS mutant worms express genes that keep neurons working longer, and that these genes are completely different from the previously known longevity targets. They also discovered a new factor that is responsible for nerve cell (axon) regeneration in adult worms, which could have implications for human traumatic brain injury.

“Kaletsky and Lakhina developed a new technique that is going to be used by the entire worm community, so it really opens up new avenues of research even beyond the discoveries we describe in the paper,” Murphy said.

One of the newly identified genes, fkh-9, regulates both enhanced memory and neuronal regeneration in IIS mutants. Previous studies have detected only one other gene that regulates neuronal regeneration in the mutants, demonstrating the power of the technique to identify new gene regulators. The researchers also found that fkh-9 gene expression is required for long lifespan in many IIS mutants, but it did not play that role in neurons, suggesting the gene governs multiple outcomes in the worm.

Murphy’s lab is now working to understand how fkh-9 works to influence memory, axon regeneration, and lifespan. The gene codes for a protein, FKH-9, that acts as a transcription factor, meaning it controls the expression of other genes and is likely part of a larger regulatory network. FKH-9 also appears to regulate different processes in different tissues: It is required in neurons for memory and axon repair, but not for lifespan. Murphy’s group is working to figure out how FKH-9 acts in distinct tissues to regulate such different processes.

The study provides a more complete picture of how IIS mutants control gene expression in different tissues to promote healthy aging, Murphy said.

“fkh-9 is likely only one of the exciting genes that will emerge from using this technique,” Murphy said. “By identifying the suite of IIS-regulated neuronal genes, there are many candidates for follow-up, only a fraction of which have been characterized in any great detail,” she said.

Other contributors to the study included Rachel Arey, a postdoctoral research fellow; former graduate students April Williams and Jessica Landis; and Jasmine Ashraf, a research specialist in the Lewis-Sigler Institute.

Additional funding for the study was provided by the Keck Foundation, the Ruth L. Kirschstein National Research Service Awards, the National Science Foundation and the New Jersey Commission on Brain Injury Research.

The article, The C. elegans adult neuronal IIS/FOXO transcriptome reveals adult phenotype regulators, by Rachel Kaletsky, Vanisha Lakhina, Rachel Arey, April Williams, Jessica Landis, Jasmine Ashraf and Coleen T. Murphy, was published in the journal Nature online ahead of print on December 14, 2015. doi:10.1038/nature16483.

You must be logged in to post a comment.