Group 24:

Bereket Abraham

Andrew Ferg

Lauren Berdick

Ryan Soussan

P2: Contextual Inquiry and Task Analysis

The Cereal Killers

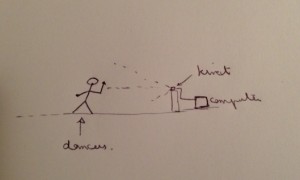

Brisq: A Gesture Control Device

Problem: Current WIMP and touchscreen interfaces force you to constantly use your hands in order to interact with a computer.

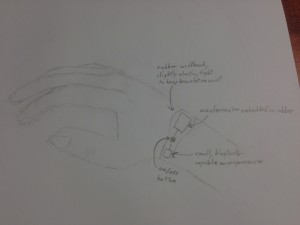

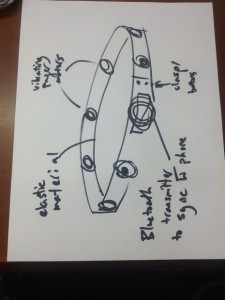

Solution: We want to create a wristband that allows you to map hand and arm motions to computer commands. We also want to create software that allows the user to create the commands/routines and map gestures on their own.

1. Interviews

We have a couple of target groups for the app, each that can benefit in a different way. The main ones we identified were housewives who would need to interact with their computer when their hands were dirty cooking, amputees who have trouble interacting with computers, heavy computer users who would like to make their computing experience more efficient, and DJ’s that would like to add a more fluid control of their music. Housewives are relevant since cooking can be messy, and often times they enjoy trying new recipes which nowadays are being accessed with digital devices. If they could scroll through their recipes without actually touching the device, it would keep them clean, out of danger, and would save paper. For amputees, we felt that gestures could enhance their computing experience, such as someone missing a hand could put on the bracelet and no longer have to rely solely on their one available hand. For heavy computer users, we felt there were many programs, games, and shortcuts that could be programmed with gestures that would save heavy computer users time and make them quicker with their computers. Repetitive tasks such as going to the desktop, opening a web browser, and change a song could be done with a gesture. Finally, we thought DJ’s could use the device since gestures would give them a new way to control their devices which could be more fluid and fun. We interviewed four people from 3 different target groups.

The first person we interviewed was a housewife. We interviewed her over the phone around 8:00 pm. She is 52 years old and lives with her husband and two children, aged 11 and 15. She enjoys being with her family. We asked her some questions about her daily routine before introducing our device to her. She is a stay at home mom, and runs errands and does chores until she has to pick up her kids from school. She starts cooking dinner around 5:30 so that they can eat as a family when her husband arrives home at 6:30. When asked about cooking, she said she has a set of meals she switches between for dinner. We asked if she ever tries new meals, and she said she does occasionally, maybe once every week or two. When asked where she gets her new recipes, she said the internet. She normally prints out the recipes and follows them then. She does have a laptop though, so when introduced of the idea of being able to scroll down with a gesture bracelet while cooking, she immediately thought it would be nice to save paper and eliminate the hassle of running out/low on paper and ink. She said she would consider using her laptop as a cooking aid if she had the bracelet, but wasn’t sure if she would like wearing a bracelet while cooking. She was also worried it would get damaged while she cooked. Overall, she had mixed feelings towards the idea, and wasn’t sure if she would use it.

The next person we interviewed was a DJ. We interviewed him while he was setting up for a party at an eating club. He works for various clubs, parties, and bars and we met him when he came to DJ for an eating club. He was concerned with getting more gigs, and having a very responsive turntable system. when we asked if he felt his controls could be helped with a gesture option, he was excited by the idea but was worried about the delay. He thought it wouldn’t be that helpful if he had to spend time initiating a gesture, and wasn’t sure what specifically it would be used for since his DJ equipment was analog so that it was respond quickly to his changes. He thought it might be useful as a novelty item, or in slower sections or when he was talking on the microphone.

The next target group we interviewed was heavy computer users that were more tech savy. We will call the first interviewee “Steve”.

We interview Steve in the Frist Gallery. Steve is a heavy computer user and multi-tasker. He really enjoys video games. He would definitely consider using our product. Steve once had carpal tunnel after using his computer way too often, and he had to stop using the mouse. He was left with no alternative which made it very difficult for him to use his computer and he had to limit his use of the machine. He feels he would have probably used a gesture controller like ours had it been available and it would have made his computer use much more painless and comfortable.

He says gesture control would make simple shortcuts for him – he would love to see anything with multiple steps reduced into one thing. When he first gets up, he has a routine with his computer, opening his favourite tabs and websites like Facebook, mail, YouTube. It would be great for him if with one swipe of his hand from his bed he could start those up. If he could just open the browser and then swipe his hand to get his favourites that would make his life easier. Conversely, it would also work for a shutdown procedure: if with one hand gesture he could save all his documents instead of having to go through all the windows and then turn off the computer.

Steve would like to see in the future the hand gesture extended into finger motion as well, to make more precise movements. If sensitivity could spread to the fingers without making the device bulky, it would definitely make the product even more useful.

Most importantly, he states that you need a way to activate the gesture control device. He would like to see us put some kind of switch or button on the gesture bracelet so that normal actions are not confused with gestures.

Last, we interviewed “Michael”.

We interviewed Michael in Frist at a first floor table. Michael is also a heavy computer user. He considers himself a multi-tasker. He thinks this would be a product that he would use, because he thinks the idea of being able to control the computer even when he is not right there would be useful. His pet peeve with the mouse is clicking, dragging and resizing windows. He really gets annoyed with the little pointer. He feels that gesture control would just make that part of his computer use a lot easier.

Michael has also had to use Smart Boards from some of his many presentations. But he has had to be right next to these boards. Even though the board is quite advanced, he still feels being able to be away from the board would make presentations more effortless and would also add grandeur to it, if he could swipe along the slides. He says this idea reminds him of Iron Man movies.

There are also more common tasks for which he would want to use gesture control. For example, skipping songs when you are not on the computer. Perhaps you are playing music with your computer on your desk, but you are all set up in your bed studying. Being able to manipulate the computer from far away is the main point that he likes about this idea.

He thinks it would be interesting if this could be used for art projects. If gestures could control a design program, and the hand could be used to trace or paint something. He would also find it useful if there could be a gesture for each website that he generally visits.

To conduct the interviews we asked interviewees first to describe themselves, their daily routines, and their interactions with computers. Based on those questions or how they were sought out, we then asked more specifically about a task that was specific to their target group. We asked them about the task, and if they felt gestures would improve the task. We observed people in various environments: over the phone, before an eating club party, and in Frist.

Common themes we noted we people excited by the idea of a gesture device and immediately had an idea or two of where it could be useful. There was a lot of talk about using it to aid home media, for computer actions that get tedious, and for video games. People often did not understand the limitations of the device, and sometimes proposed suggestions such as moving workout equipment with a gesture or controlling sports balls that were not feasible with a simple computer program. We noticed that heavy computer users seemed to have more ideas for its application, and the housewife and DJ were more concerned with it for their main objective. This is probably since computers are a significant part of heavy computer user’s lives, so they had already been annoyed with some of their shortcomings. We really liked some of the ideas Steve had, including an aid for carpal tunnel syndrome and gestures for opening and switching between common programs.

2. Task Analysis

1. The main users for our gesture bracelet fall into specific groups for specific tasks and a more general range for people seeking to add gesture functionality to computer programs by their choice. Our target groups are housewives, heavy computer users, DJ’s, amputees, and computer users that rely on their computers for home media.

2. Housewives currently cook while following online recipes. Heavy computer users are always interacting with their computers. DJ’s use turntables to play music at parties and amputees use computers for everyday functions.

3. Housewives would like to flip through their online recipes without getting their computer dirty, For general use, computer users would like to add gestures to programs where they make the action more natural, or enable them to interact with a computer at a distance. These interactions include giving a slideshow presentation or watching a movie with your computer hooked up to the TV. TV controls could include changing the volume, pausing, playing etc. without getting up from the couch. Heavy computer users would like to reduce repetitive mouse clicks to relieve carpal tunnel syndrome. Finally, amputees would like a better computing experience and the ability to type emails, browse the web, chat, and more faster.

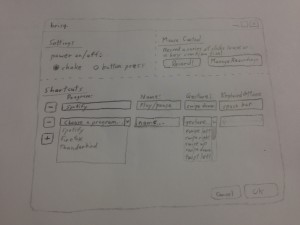

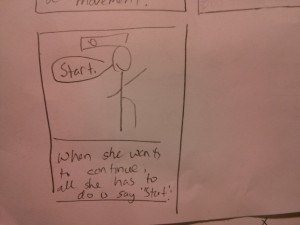

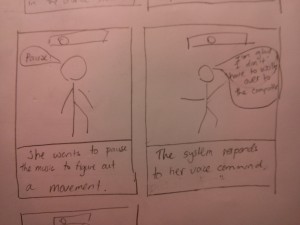

4. Users set the tasks themselves, and use buttons and a computer program to pair them. The user turns the bracelet to a “make gesture” setting and performs the gesture with the bracelet on their wrist. To exit the “make gesture” mode, the user will either perform another gesture or wait a few seconds. The user then uses the computer program to listen to the following keyboard inputs and perform the input sequence. Both of these are repeated, and the user can then pair gestures with computer shortcuts. For example, the housewife could move her wrist up and down, record the gesture, then with her web browser and gesture program open she would press listen and then scroll down in the browser. Last, she would go to a gesture list and drag the up and down movement to the scroll command. Now when she wants to scroll while she is cooking, all she has to do is shake the bracelet, moves her hand up and down, and shake the bracelet again. Without having to move or touch anything, the page will have scrolled down.

5. Wherever the person happens to be – in the kitchen, bedroom, living room, etc. The bracelet frees the user from the need to be physically close to the computer. All they have to do is stay within range of Bluetooth / WiFi.

6. Because users set the gestures to fit their specific needs, the only data we need is the shape and form of their hand and arm gestures. An important problem we need to solve is how to recognize these gestures based on sensor data from the bracelet. Thus, we will need to monitor their arm / hand movements and compare them to a history or previous such movements in some sort of algorithm.

The only time users will have to interact directly with their data is when they have to initiate set up and record the hand gestures. After that, the system should operate smoothly, with minimal input from the user.

7. Within our system, users have the bracelet and attached computer program to work with. Outside of our system, people can use traditional methods to interact with their computers, such as a mouse and keyboard (like with pc gamers). Media center enthusiasts often have a complicated remote or a set of remotes to control their system. Office workers giving presentations can use special clickers or simply the arrow keys on their computer. Amputees, especially those with part or all of their arm, could probably still navigate a computer. However, their experience will be slow and difficult, because almost all input devices (mouse, keyboard, touchpad, joystick) are designed for skilled and continual hand use. Finally, people trying to look at recipes while cooking don’t really have a good alternative tool. The next best thing would probably be voice activated commands, which are notoriously unreliable.

8. Users could chat in an online forum. There, they could help each other or swap ideas for cool applications.

9. For people within a specific usage group, Brisq will be used almost continuously for a short period of time. For example, while cooking a meal you will be flipping to the next instruction fairly often. Amputees and computer gamers will probably be the highest frequency users, continually clicking on a link or shooting the next alien. Casual users in engage the system a lot less often but over a much wider time scale. While watching a movie, you may change the volume only once or twice. But, being able to do that with the wave or your hand will save a lot of effort.

10. For any task, the bracelet will have to respond accurately and with no noticeable delay in order to avoid annoying the customer. While cooking, you usually have some leeway but there are times when 20 seconds is the difference between browned and burnt. Slideshow presentations are a prime example of need for accurate, reliable technology. Beforehand, you don’t want to keep your audience waiting while you set up. During the presentation, a few misinterpreted clicks can completely disrupt your flow and distract audience members. For amputees and pc gamers, speed is absolutely essential. A lot of games are reaction based, where you have to outdraw your opponents. In our software’s gesture recognition algorithm, we will have to make tradeoffs between accuracy and speed. Because of the different needs, it might make sense to allow users to choose how much of one they want, at the expense of the other.

11. When things go wrong, either a false positive or a false negative is triggered. A false positive is when the user engages in an arbitrary hand-arm motion that is incorrectly interpreted as a gesture. Thus, things would happen for seemingly no reason. We try to counteract this by creating a “make gesture” mode that you would have to enter by shaking or twisting the bracelet. This is hopefully a unique gesture that would not happen normally. A false negative is when you attempt to perform a gesture but it is not recognized a part of your library of gestures. Or the wrong gesture would be recognized and trigger the wrong command. This also needs to be avoided, because it will lead to user frustration and eventually abandonment of our product. We will fight this threat by improving our gesture recognition algorithm and by continuing to train it with every new gesture formed.

3. Three Tasks

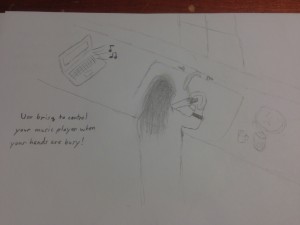

1. People in the kitchen

People listen to music and use online recipes on their computer while cooking. They will wear the bracelet on their wrist, engage it for a gesture and gesture to scroll down or change the song, all without having to clean their hands. This is an easier task since there is only one gesture to recognize, so there will be a practically zero chance of misinterpreting the gesture.

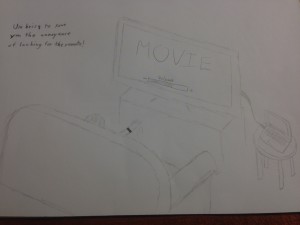

2. Couch Control

User will change volume, play, pause, and change the volume on a computer movie which is hooked up to a tv, all without leaving the couch. We will need around 4-6 gestures to recognize here, for increasing and decreasing volume, playing and pausing, etc. This will make the task moderately difficulty, but 4-6 gestures should have a high success rate (i.e. over 95% based on research papers).

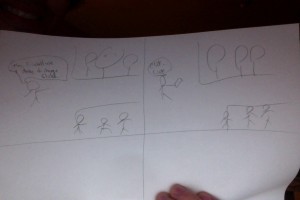

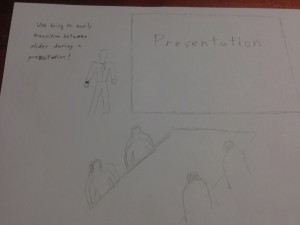

3. Giving Presentations

Users can use the bracelet during presentation for a variety of tasks. Swipe left and right to change slides, twist to switch programs and start playing a short video that you wanted to include. twist the other way to pause it and return to your slideshow, and more. This task could range from a simple 1 or 2 gesture interaction (changing slides) to potentially something much more diverse and complex (maybe even 8 gestures) for a long and interactive presentation.

Interface Design:

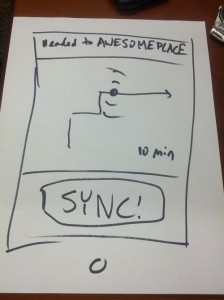

Brisq aims to make common secondary computer tasks simple and streamlined. People listen to music while cleaning the house, look up recipes on an on-screen cookbook while cooking, stream movies from laptops to their TVs (because who still uses DVDs?), and integrate their computers into their lives in all sorts of ways. But no one wants to have to dry off their hands just to change the volume on their computer, no one wants to wash their hands just to flip the page of their e-cookbook. Our users will be anyone and everyone who regularly uses their computers to complement their day to day lives. Enable Bluetooth on your computer, and use our program to easily map a sequence of keyboard presses or mouse clicks to one of 8 pre-programmed gestures that brisq can recognize; then put the brisq bracelet on your wrist and go! The small, stylish bracelet will communicate with your computer whenever it is in Bluetooth range. Shake brisq to turn it on, then swipe right to skip a song while you’re barbecuing; twist to crank up the bass at your party when theres a dancing crowd around your speakers; change the volume up on your movie without leaving the couch, or even looking for the remote. Simplify your life. Be brisq.

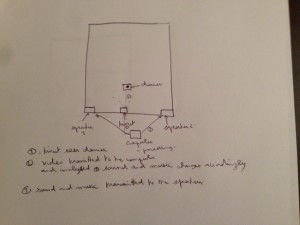

Users can program gestures and map them to computer inputs. They will do a predefined gesture, and brisq will send the signal captured from an accelerometer to a computer via bluetooth. On the accompanying GUI, users can enter and save keyboard and mouse inputs for specific computer programs, and match them up with gestures. Later, they just shake brisq (or press the small on/off button), then do a gesture to have your computer execute the command saved for the open program. Users can thus substitute simple and intuitive movements for keyboard and mouse inputs that may be long, repetitive, inconvenient, and more. Existing “smart” bracelets monitor heart rate, act as pedometers, and perform other simple functions but do not serve as a universal, customizable interface you your computer. Additionally, we are not aware of programs to set shortcuts by “listening” to keyboard and mouse inputs and saving them in a bank; we believe this we make the act of setting shortcuts very simple and intuitive for everyone.