Group Number: 20

Members:

Brian conducted one interview on his own and partnered for another interview and helped write the paragraphs on the interviews.

Matt conducted one interview on his own and partnered for another interview and helped write the paragraphs on the interviews.

Josh answered the 11 task analysis questions and helped Ed write the three storyboards and answer the interface design questions.

Ed helped Josh answer the 11 task analysis questions and wrote the three storyboards and answered the interface design questions.

Problem and Solution Overview

Currently, there is very little teaching of computer science fundamentals taught in middle school and high schools. Often, schools are prevented from teaching CS because they have insufficient computer resources to provide each student with a computing environment. Even in college, some students have difficulty learning the basic concepts such as binary number representation, memory, and recursion. The barrier of entry to learning CS is just too high. We are hoping to create an interactive method for teaching the fundamentals of computer science that does not rely on coding software on a computer. We wish to extend the idea of whiteboard programming. Using computer vision and a projector, we want to let users place “blocks” of code on the board and our tool will return visual feedback. Our solution could provide a low cost and interactive interface that could revolution technology teaching to the masses.

Description of Users Observed

Our target user group is divided into two main groups: teachers using our tool for pedagogy and students learning from our tool. We decided to select three users across both of these groups to get the most balanced perspective.

- The first user that we observed created and teaches an introductory CS course targeted at students with almost no technical background (often students from humanities departments). We think that he was a good choice for a user group not only because he has had years of teaching experience, but also because he is often recognized by his students as being an extremely effective and engaging educator. He is extremely interested in CS education although his background and interest tend to be focused on a slightly older age group than we are targeting.

- The second user that we interviewed was a student who took the above course. He had no technical background for the course and had a heavily humanities background. We thought he was a good user to interview because we were able to talk to him about the frustrations and confusions of learning the most simple CS. Also, we were able to compare his interview with the above interview readily.

- Our final interviewee was a member of the school board of a large suburban district near Los Angeles. He is been extremely interested and involved in education reform. We thought he would provide an interesting insight into the backend of education. He helped us to understand how teachers might adopt and use this technology from an institutional standpoint.

CI Interview Description

Our first interview was conducted in the office of the user. We started out by explaining a little about who we were and what the goals of our project was. We then asked him to walk us through a couple of his extremely early lessons to help us get a picture of what current teaching practices are. Our second interview was conducted in a classroom with a student who had taken the aforementioned introductory computer science course. The student is concentrating in a nontechnical field but took the computer science course to fill in a distribution requirement and learn some fundamental computer concepts. We asked the student to walk through some of his previous code in the course, explaining what he did in the assignment. Our final interview took place in a common space. This interview was tricky to take the perspective of contextual interview. As we will discuss later, professional development for teachers is extremely individual and therefore hard to observe. We therefore made sure to talk about specifics from a more distant perspective.

Our first user, the professor, provided us with an outline of the first few weeks of lectures, and discussed how he explains various concepts ranging from binary to memory to logical loops. Our second user, the student, talked briefly about what he liked and disliked about the introductory computer science class he took. In particular, he liked learning about internet security issues as well as computer coding. However, the coding portion of the class was the most difficult for this student to learn. As part of the interview, I asked the student to go through some of his code from the computer science class that he took. When going through the HTML code that he written, he noted in many places where he had to fix syntactical errors. Most of his errors in this particular program came from omitting the ‘/’ character from the end tags. Similarly, his JavaScript errors were almost entirely syntactical, usual because of a missing or extra ‘}’ character. Both the professor and the student agreed that binary was a very hard concept to teach/learn. In particular, the student suggested that most students struggle with the concept of how many decimal numbers can be represented by n bits. Both also agreed that a very difficult concept in computer science is how all computers are actually equivalent, and how languages can all do the same computation. The professor also mentioned that most students have an incredibly difficult time understanding the abstractions of the computer process. In particular, the student had trouble understanding compiling, assembling, and linking because the concepts were too abstract. However, the professor and student disagreed on some issues. Firstly, the professor believed that students struggled most on the idea of memory and more specifically the actual holding of memory. While the student admitted that memory was difficult, he believed that more students had trouble with binary representations of numbers. The student also emphasized that he was most frustrated by the syntax problems that he encountered while coding.

After having looked into a teaching lesson itself, we wanted to take a step back and gain more perspective on what it’s like to be a teacher. This is where we turn to our last interview. Our final interviewee, as a member of a school board, has a big picture knowledge of what fundamentally it is like to learn how to teach. The main takeaway we got from the interview is that current pedagogical education is extremely individual. He walked us through all of the professional development activities that a teacher does in a given year (including professional development conferences and school events). They were very few of them (~5 days per year) and individually focused. Teachers make their own lesson plans and learn new technological tools on their own. He talked us through the process of integrating iPads into his district and how poorly they are used by many teachers. This individuality contradicts our first interview slightly. Our first user actually published the notes of his class in a book so other teachers could use them to shape their lessons. I would explain that difference as being the difference between K-12 and college education. Ultimately, I think that for the context of our project, we have to understand that K-12 teachers wouldn’t have much support in learning how to use our tool. It must be self-contained, self-teaching, and extremely foolproof if we hope to gain traction with this audience.

Task Analysis Questions

1. Who is going to use the system?

Our target audience is the typical classroom. We envision the product being used with about 20 students and a single teacher. The students don’t need to have any background in CS or experience coding.

2. What tasks do they now perform?

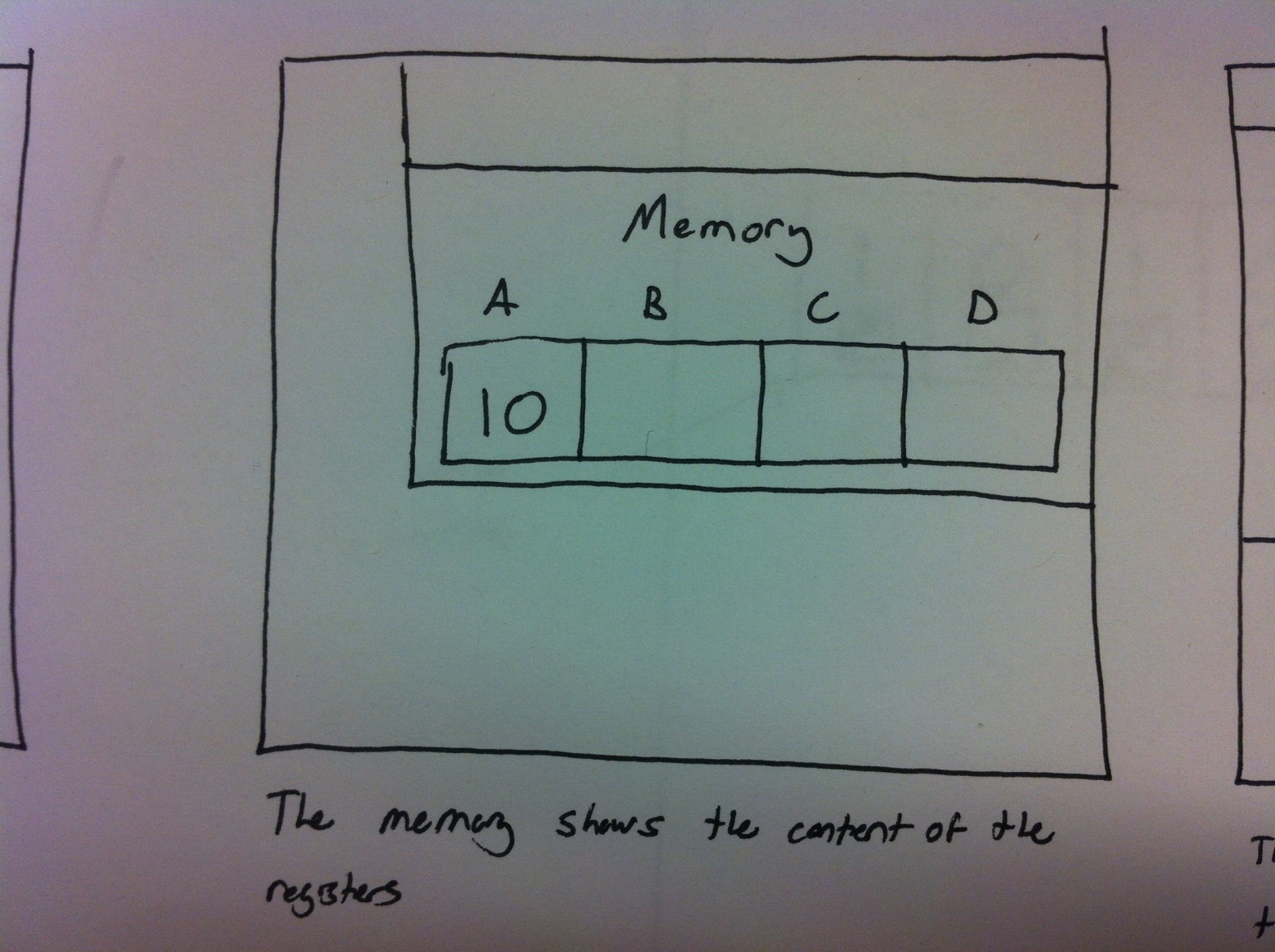

Current CS education tends to be done with “whiteboard coding.” This is the practice of writing code on a whiteboard to demonstrate the features. Other practices commonly used is simple lecture style and for certain concepts, teachers use abstract drawings. For example, when students first learn about memory, it is often represented with a box to be filled with data. These abstractions of data are very important, as students can quickly get confused when exposed to the more complicated principles that lie under them.

3. What tasks are desired?

Typically, educators are looking for a tool to help boost the understanding of their students in a classroom setting. Having the ability to present basic concepts of CS to students in a way that is easy to visualize, grasp, understand, and interact with is very desirable. Additionally, having a teaching tool that students can use and get feedback from without need to have the teacher on hand to always give direct instructions is very useful as it allows students to continue to learn on their own and at their own pace.

4. How are the tasks learned?

Currently, teaching is an extremely self-taught process. Teachers individually come up with their own lesson plans. In our system, tasks could be learned through a simple tutorial lesson that would introduce the students to the basic operations and principles behind our system. Concepts would be introduced one at a time and the interactions between could be demonstrated as students make progress.

5. Where are the tasks performed?

Classrooms with a projector and whiteboard. Only a few computers for a lot of students. Our system could potentially operate on any smooth surface to which ID tags could be easily attached and moved around.

6. What’s the relationship between user & data?

Users could potentially have personal profiles that track their progress throughout the lessons. When using the system in a group setting, the system could controlled by the teacher to set up lessons and track the progress of the class as a whole.

7. What other tools does the user have?

Textbooks, the internet, Toy programming languages (e.g. Scratch)

8. How do users communicate with each other?

Teachers communicate with their students in a classroom setting. The Teachers can give their students guidance and instructions as they use the system, and the students can freely ask questions about the current lesson.

9. How often are the tasks performed?

Students would go through a lesson about 2-3 times a week. Multiple lessons could potentially be completed in one class session if they are short.

10. What are the time constraints on the tasks?

Class session usually last about an hour. This should be plenty of time to go through most lessons. However, more difficult and complex tasks may need to be broken up into multiple lessons spanning multiple days.

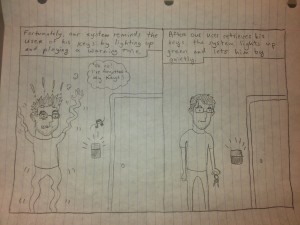

11. What happens when things go wrong?

Ideally, any errors encountered would be a result of improper set up by the user, not flaws in the code itself. If something does go wrong such as the project getting out of alignment in the whiteboard or the camera has trouble reading the ID tags, users could simply tell the system to recalibrate. All data and current lesson progress would be saved. Data should be saved periodically regardless to ensure that if the system fails completely, the users’ current progress is still preserved.

Description of Four Tasks

Tutorial – How to Teach a Lesson (Storyboard not included)

This purpose of this lesson would be to provided a ground work for the teacher and students to build and to teach them the basic principles of using our system. This lesson could include walk throughs of specific lessons they should be teaching. Highlighting blocks and stepping them through each part of the process. Currently, this is an individual learning task done by teachers. They read textbooks and compile a lesson plan. We would hope to significantly reduce the difficulty of this process.

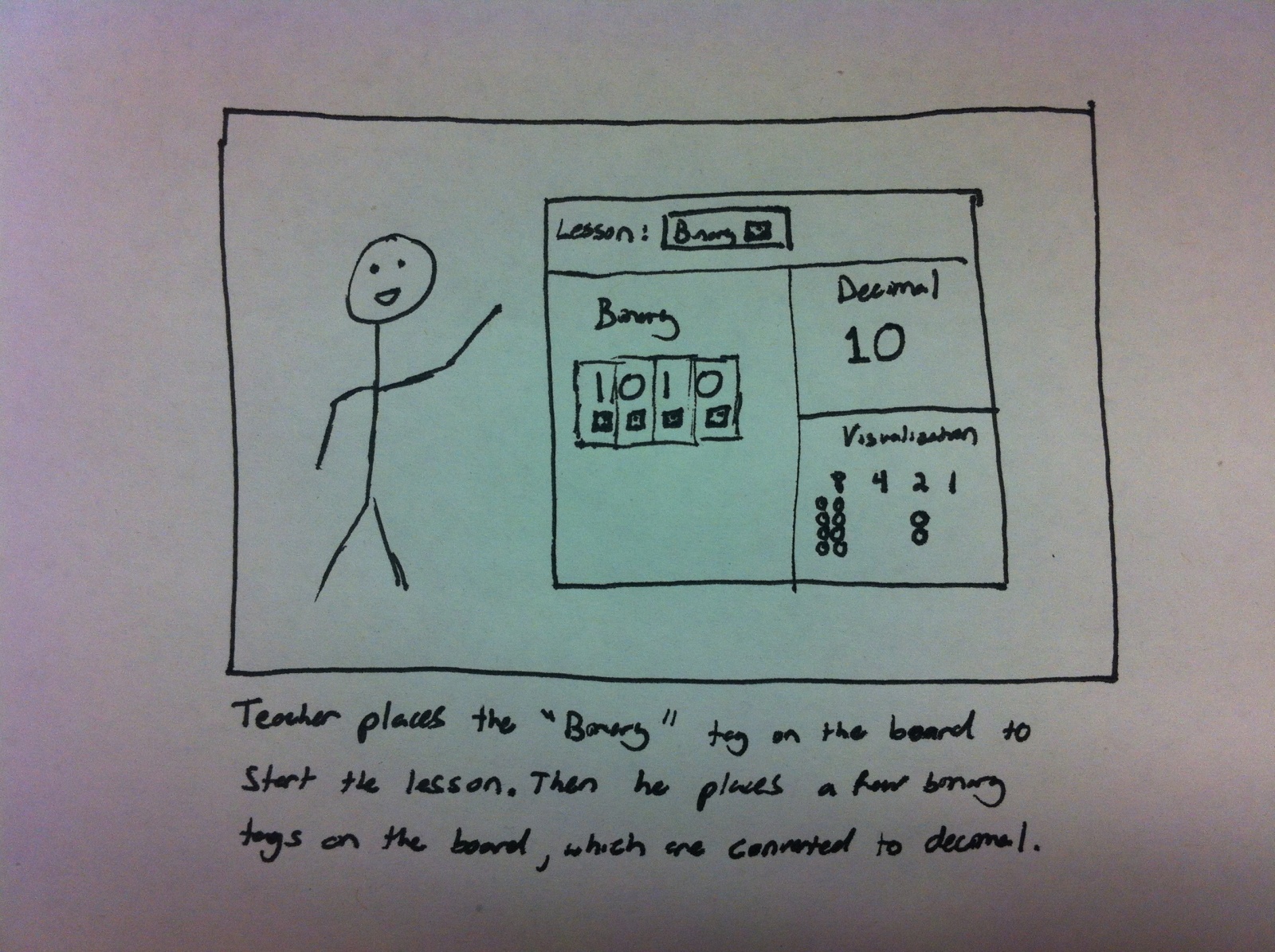

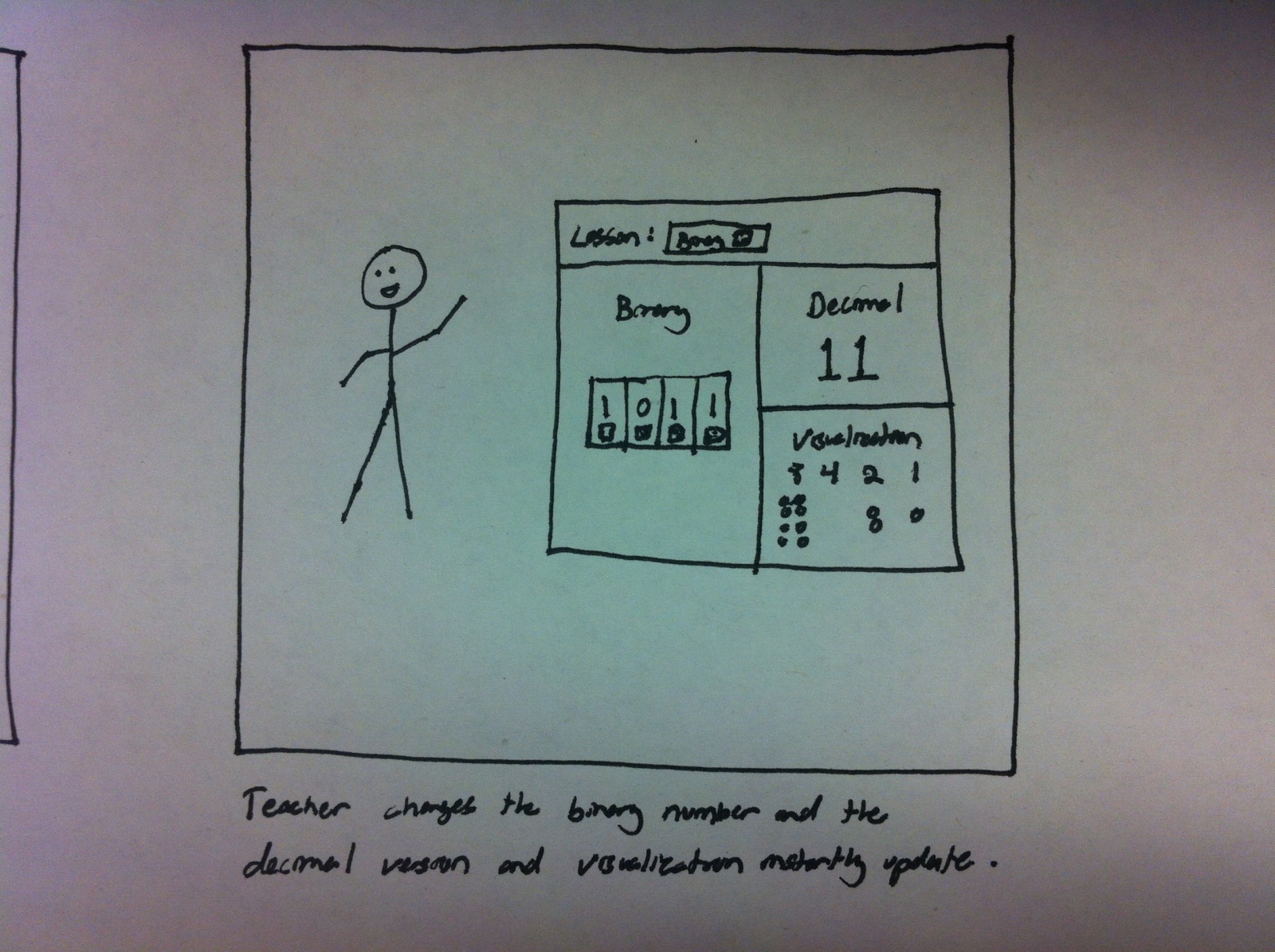

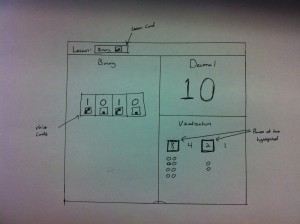

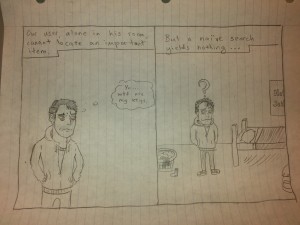

Teaching Number Base (e.g. Decimal, Binary, Octal, Hex)

Our first two interviews both said that one of the hardest things for early tech students to learn is binary. This is something that is fundamental to CS but can be conceptually very challenging to students. We hope to help the students to better understand number base through visualization. We imagine they would place Binary/Octal/Hex/etc. tiles on the board. They would then see how different number systems represent numbers. This would be a moderately difficult task to perform with our system but one that is currently very hard.

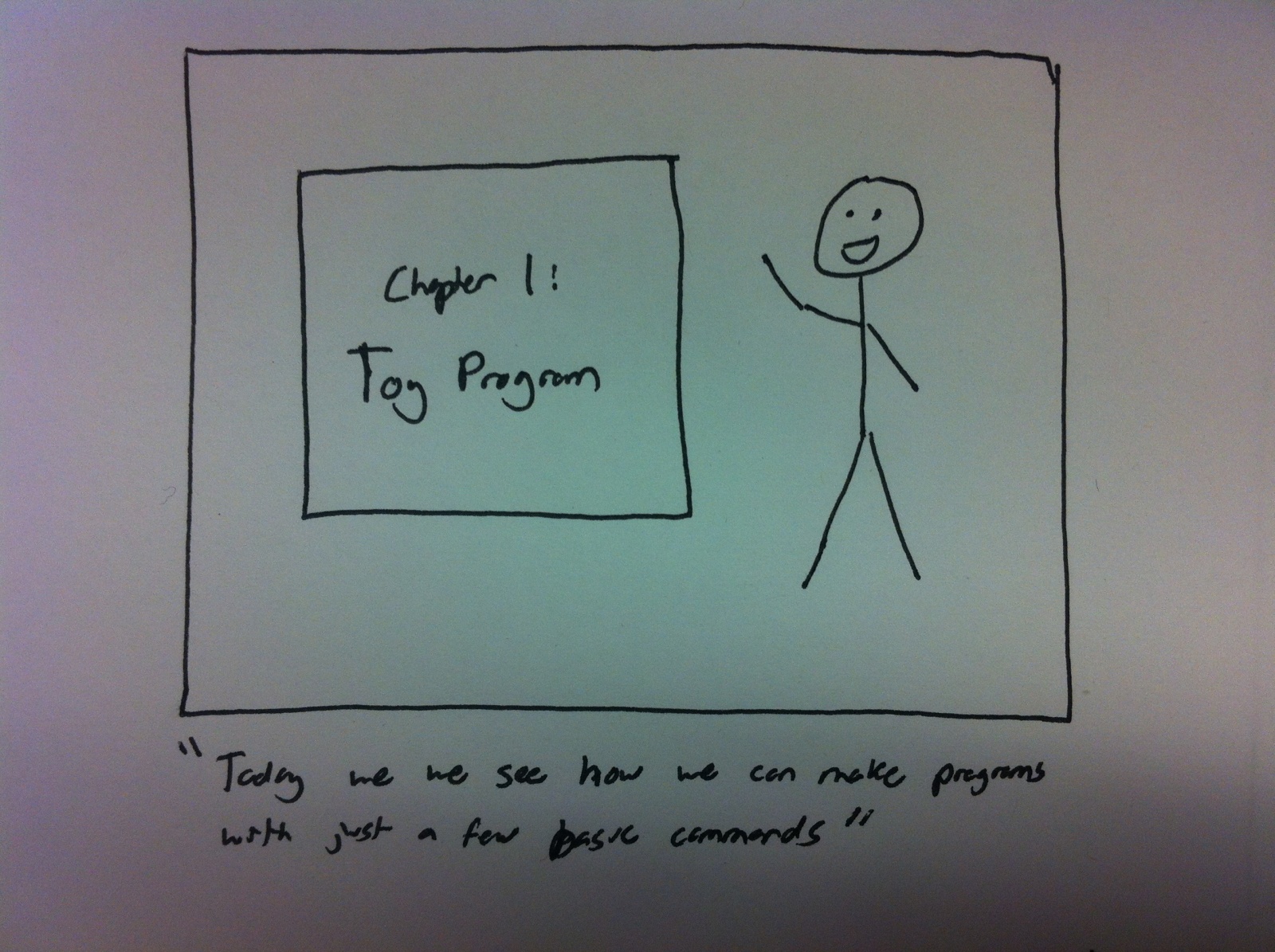

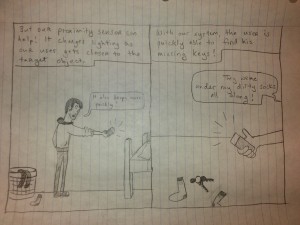

Teach First Toy Program (I/O and intro looping)

Another suggested teaching example from our interviews was an extremely simple toy programing lesson. The lesson essentially works through each of the fundamental parts of a computer and provides a simplified example:

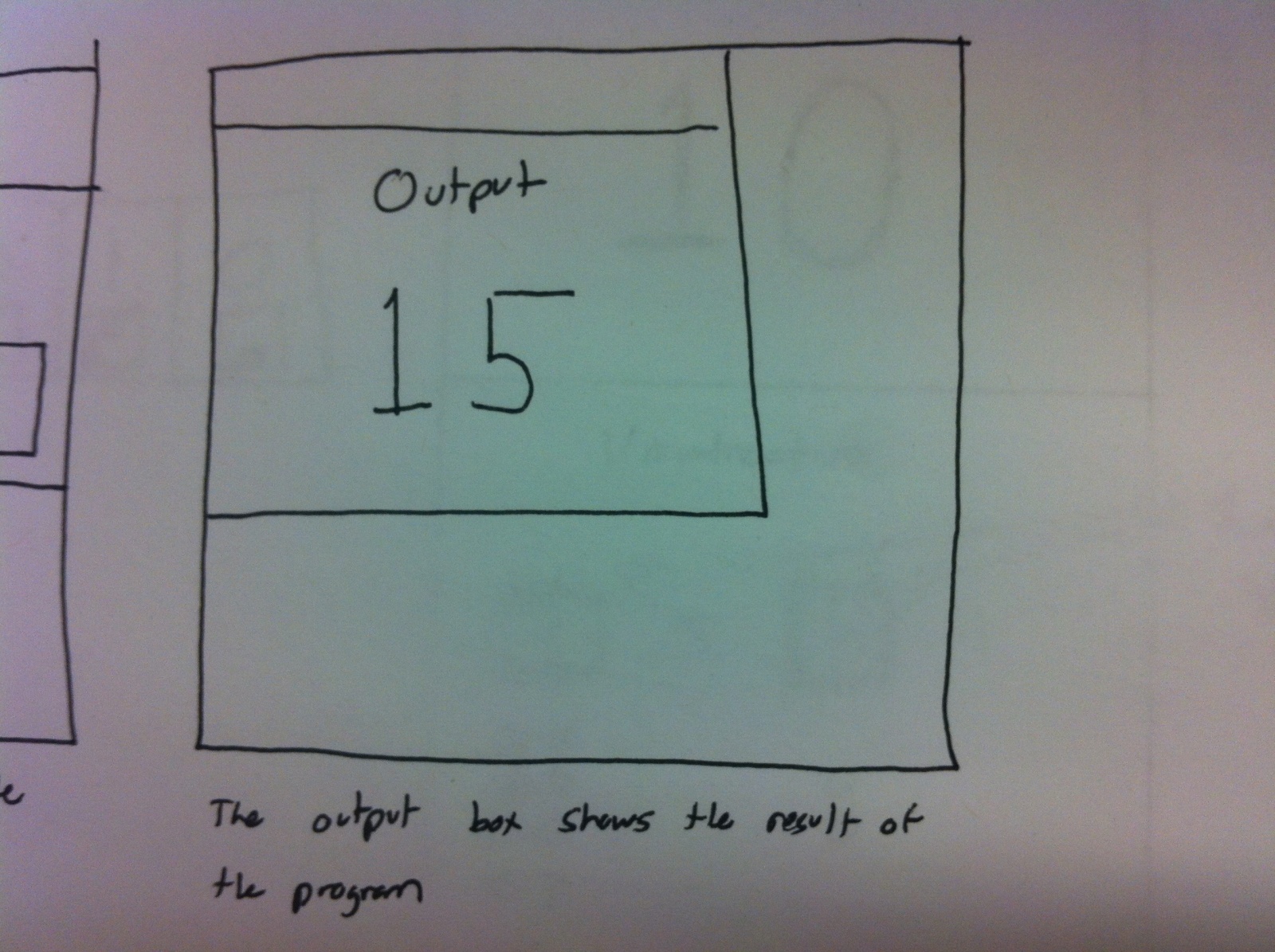

Read in a number from input and print (intro. to I/O)

Read in a number from input, do an operation, and print (intro. to processing)

Read in two numbers from input, print the product (intro. to memory)

Read in numbers from input until a 0 is given. Calculate the sum and print (intro. to loops)

We would create these programs with simple code put on the board and inputs placed on the board as well. This lesson (especially memory) is something that is currently difficult to teach and learn but we hope to reduce that difficulty.

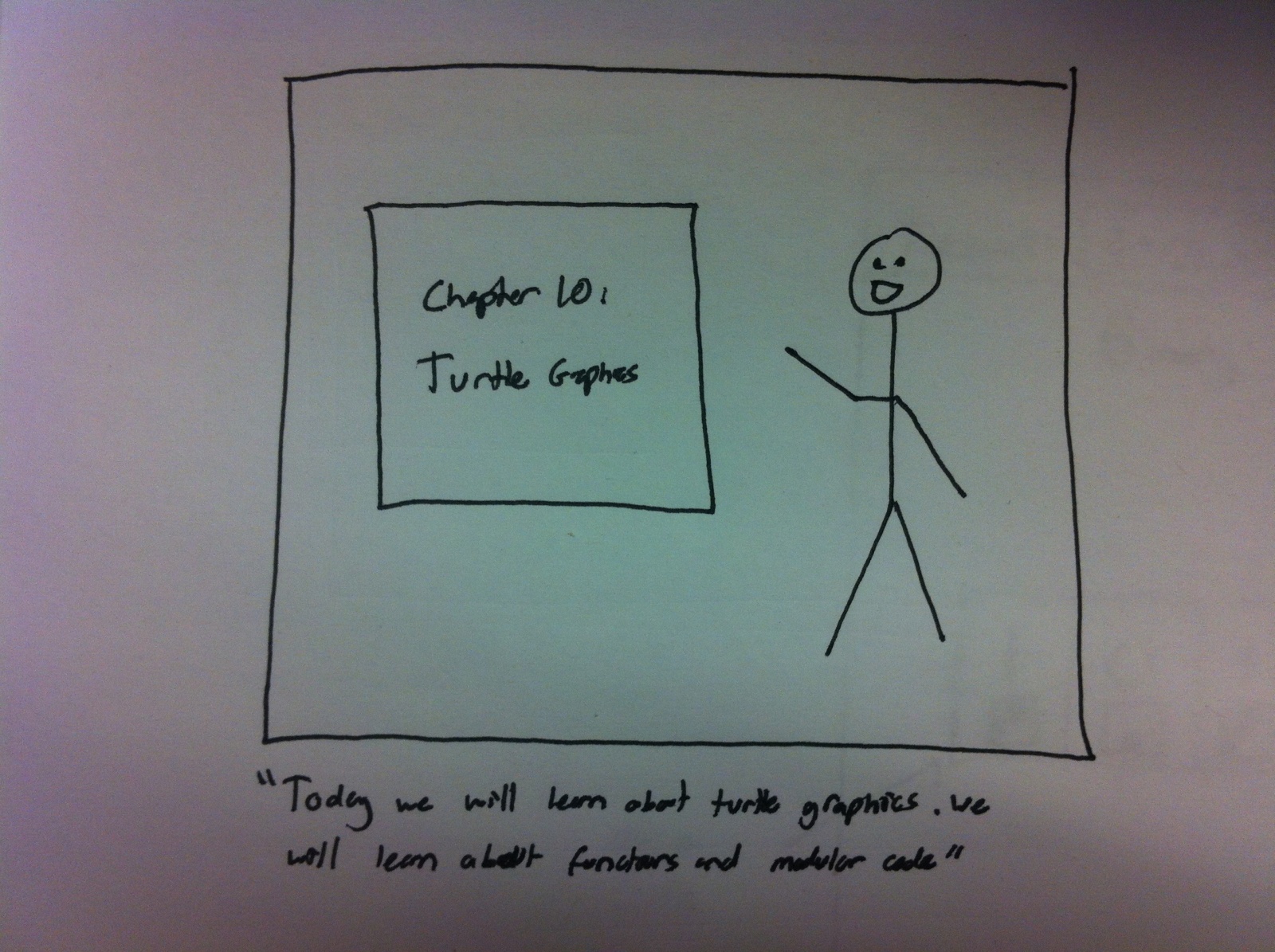

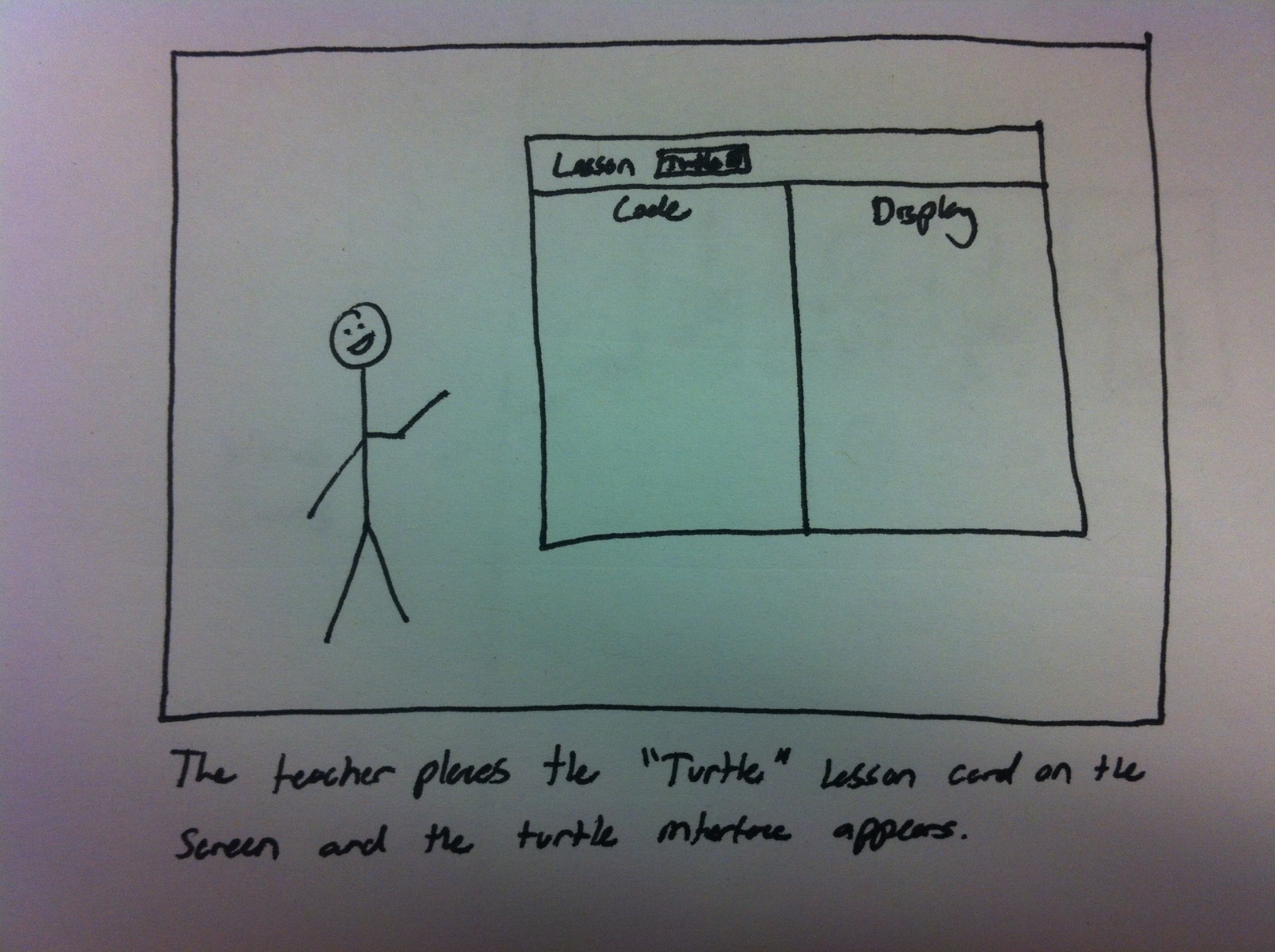

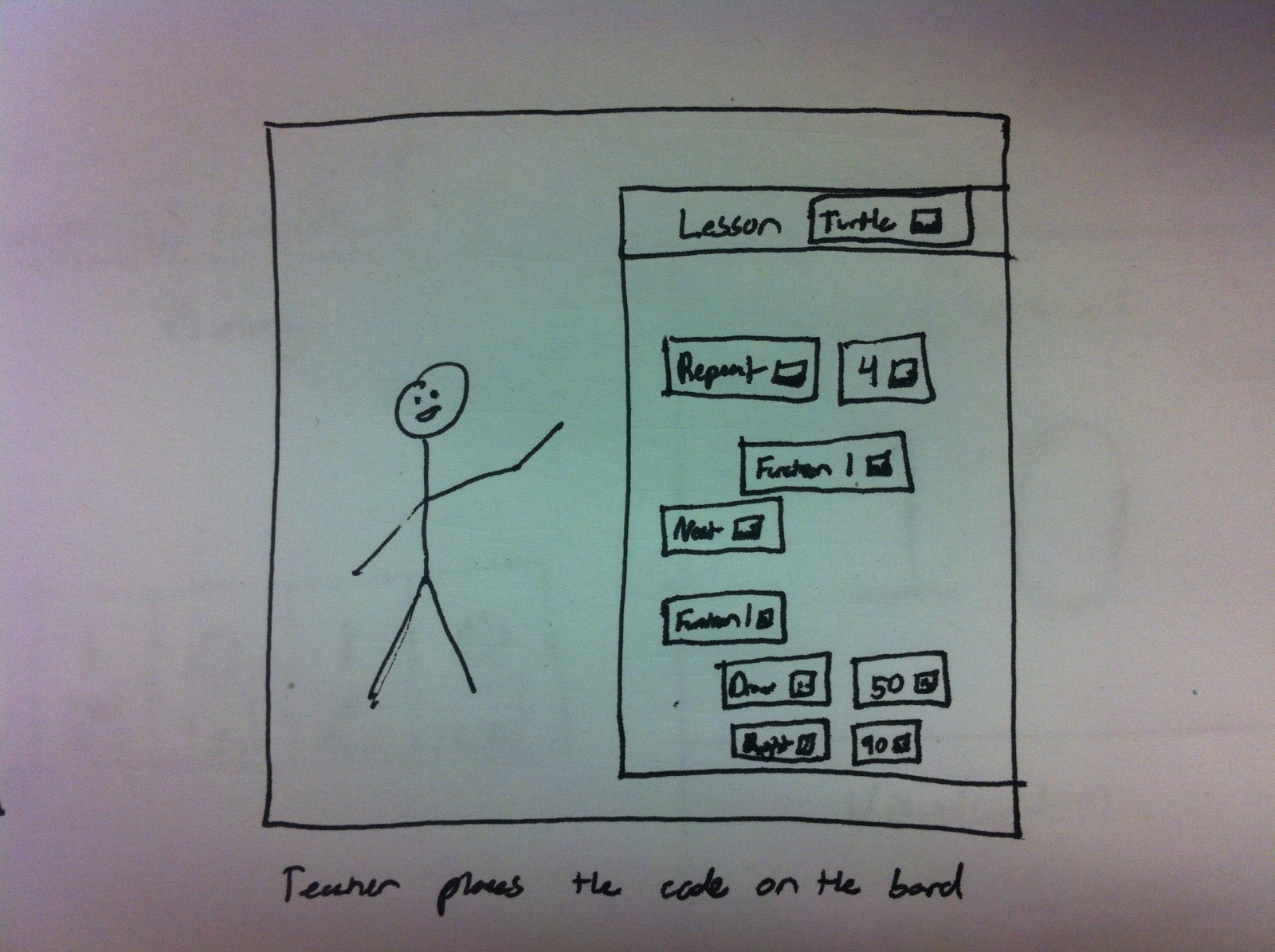

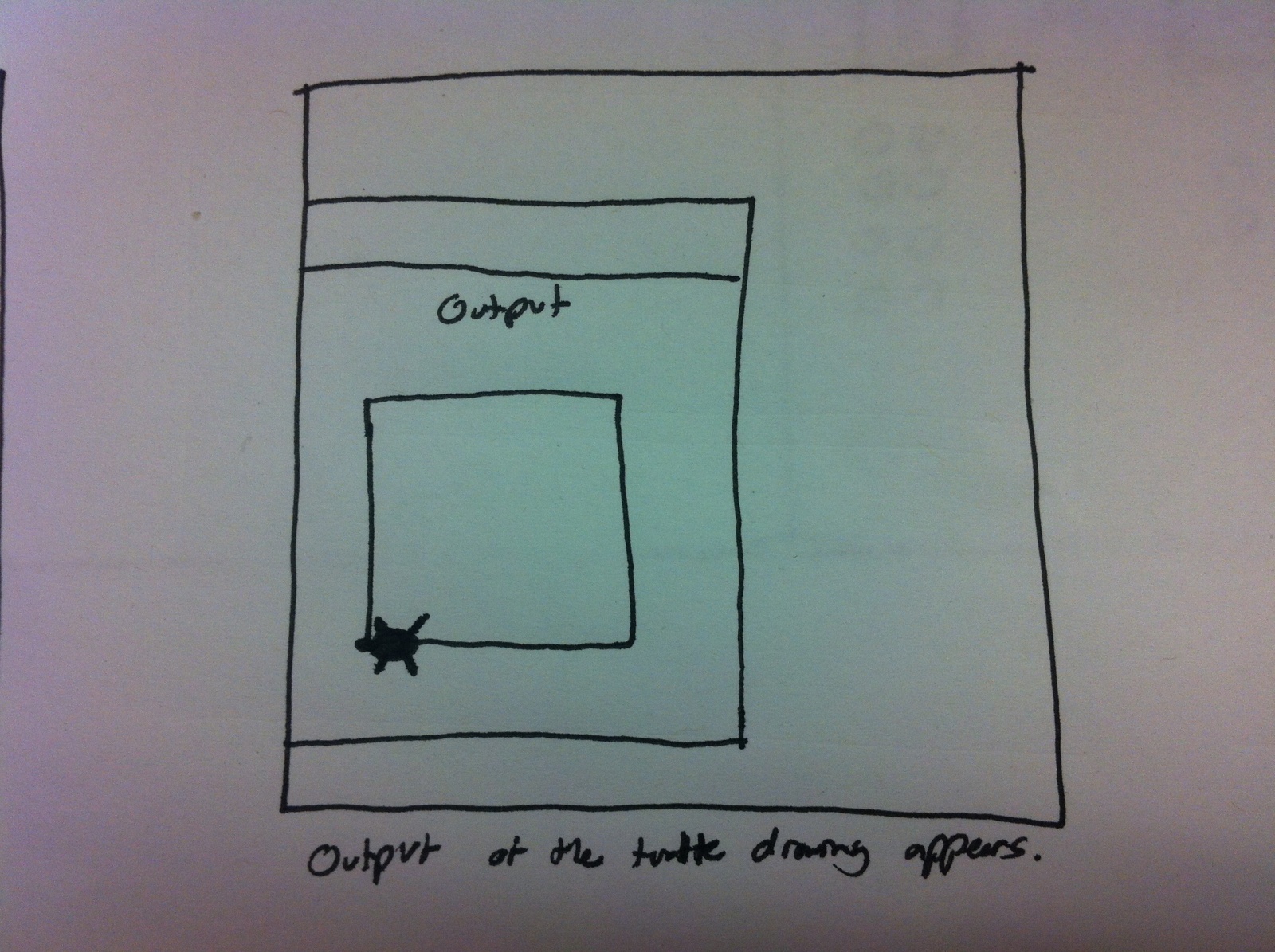

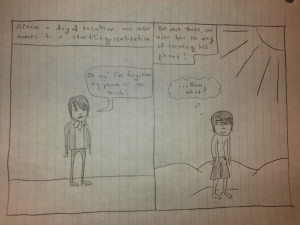

Simplified Turtle Graphics

Our final lesson is the most complex. We want to create a tactile version of the Logo programming language library. Users could control the movement of a virtual turtle and see how his path changes as they change various part of code on the board. This would be a great way to introduce functions and show the execution of the program in a visual manner. It would use:

Create “Function” cards that would allow users to define custom operations

Only uses a basic set of commands, such as forward, turn, and pen color.

This language is well established a good way to teach new students about programming but the difficulty is it often requires a computer for each student. We plan to reduce that difficulty by adapting the program to our tool, requiring only one computer per classroom.

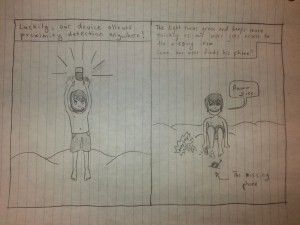

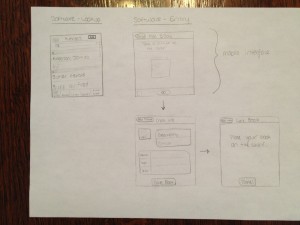

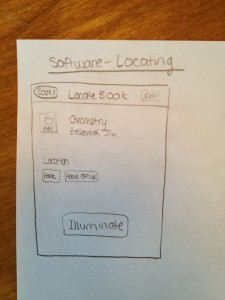

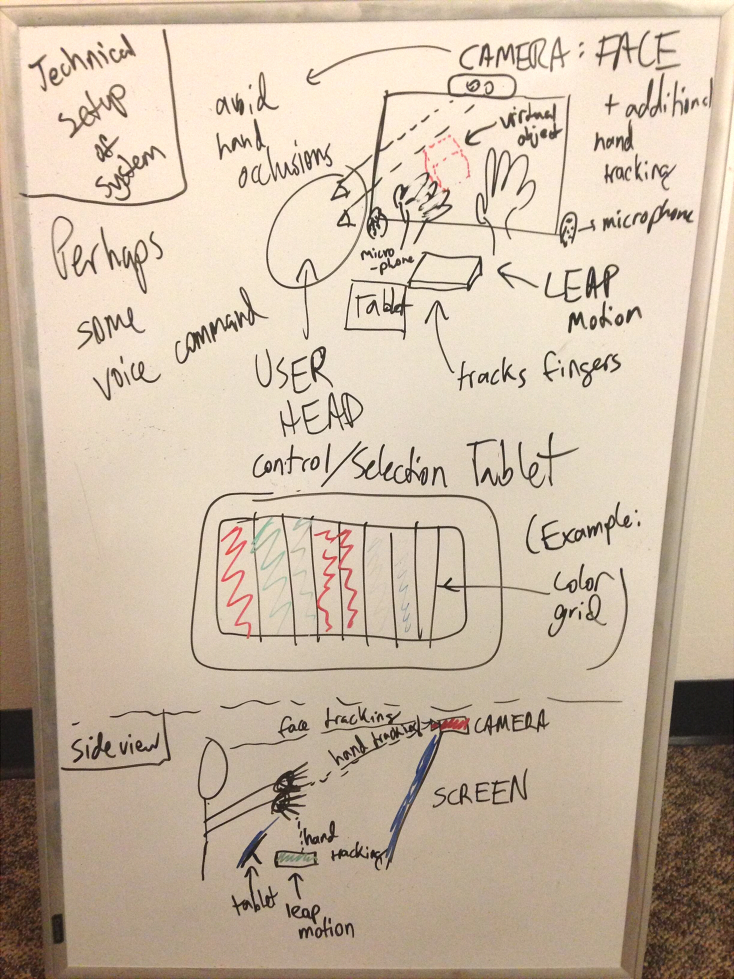

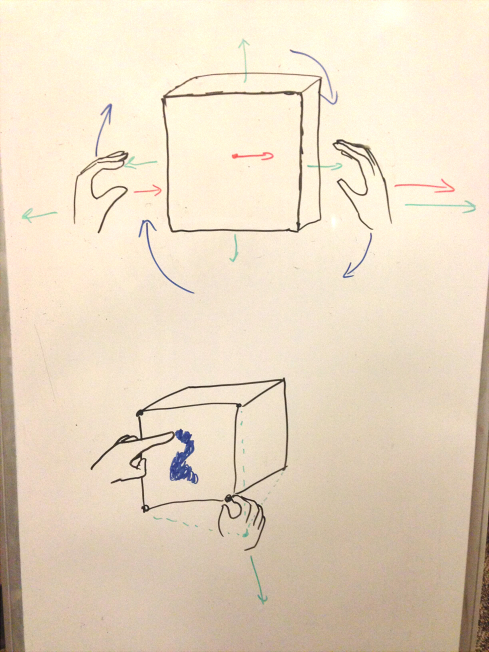

Interface Design

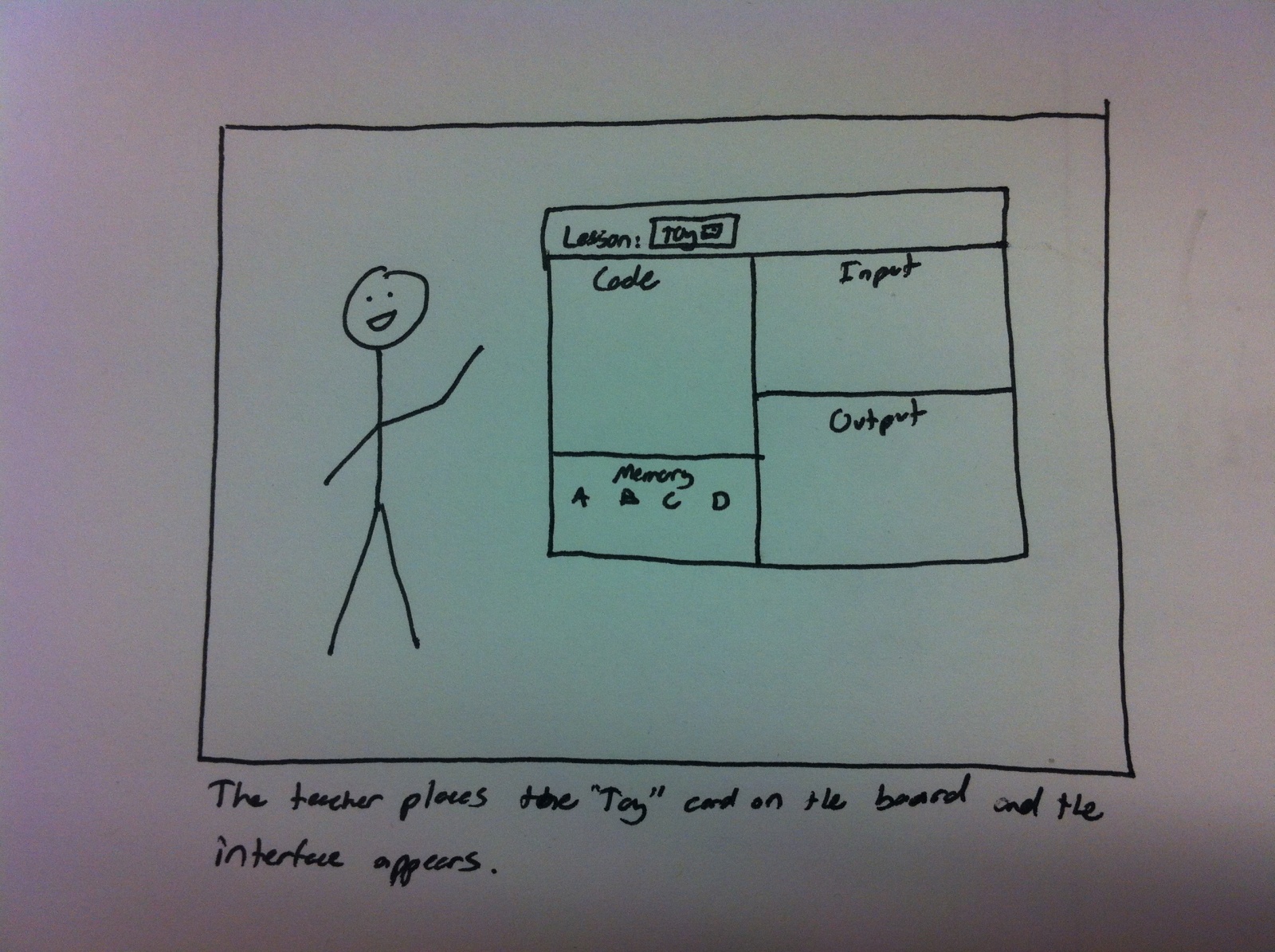

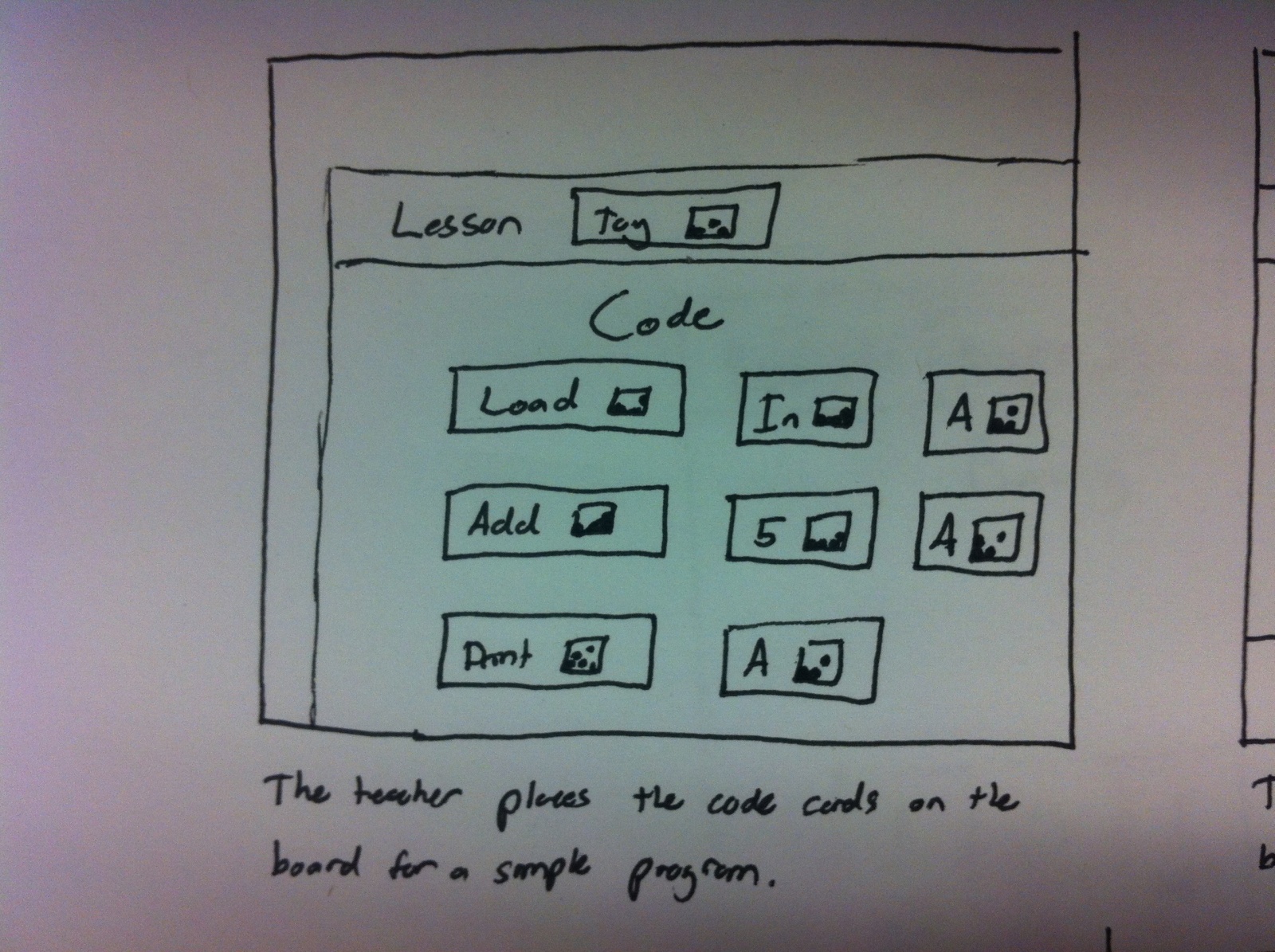

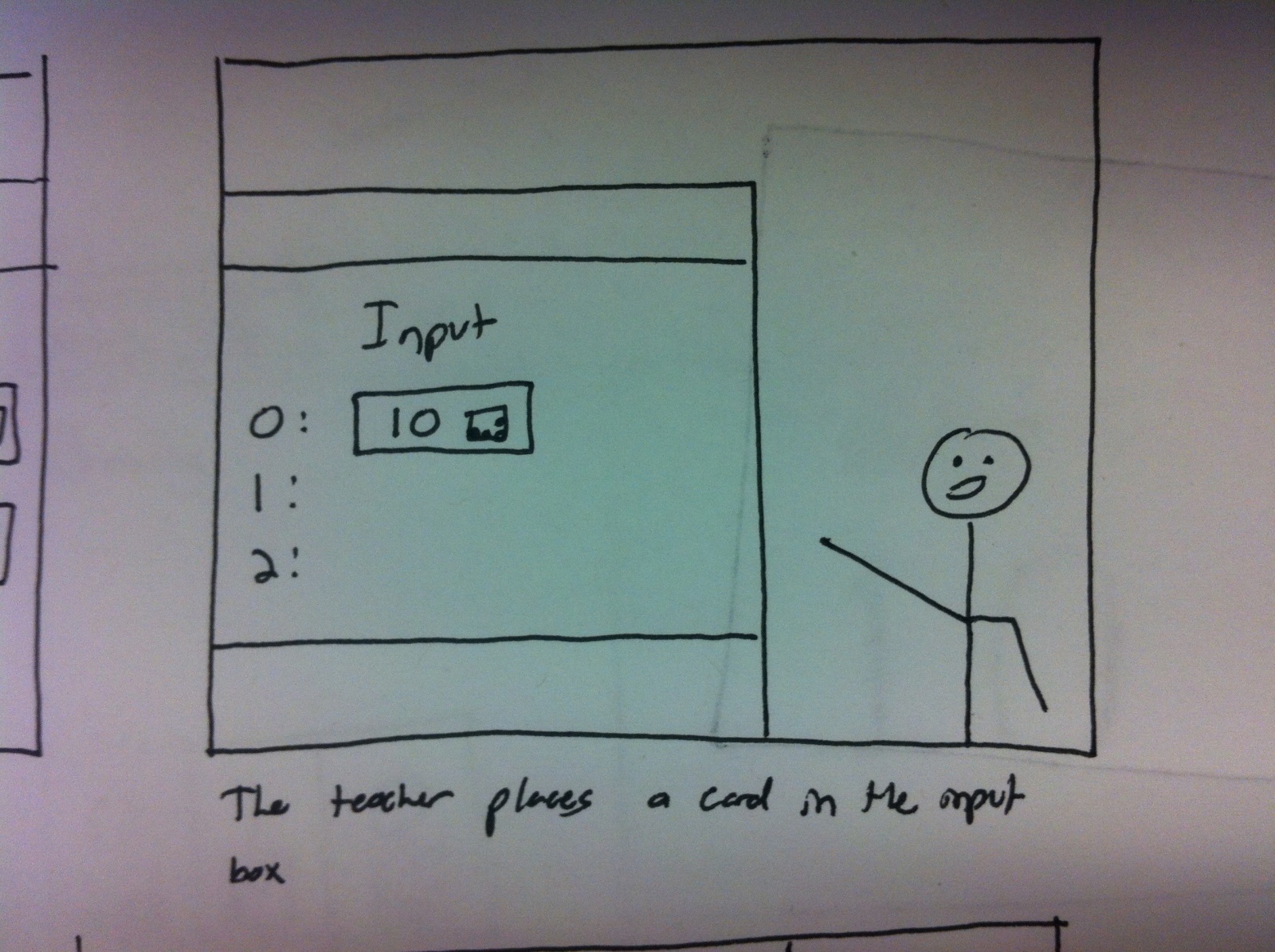

The user can teach and interact with a variety of lessons on computer science topics. By placing a “lesson” card on the board, the system reacts and projects the interface for that lesson. Then, the user can add physical cards to the board in the relevant areas (eg. Binary values, Code, Input, etc..) and the system will react by showing the execution and output of the program. Unlike any current solution, our system allows a teacher or professor to visually demonstrate to an entire class these computer science topics. The interactivity of our system allows the teacher to make quick, visual changes to the program or input to demonstrate how the output will change. We believe that this will be an engaging, fun, and flexible way to teach students important topics in CS.

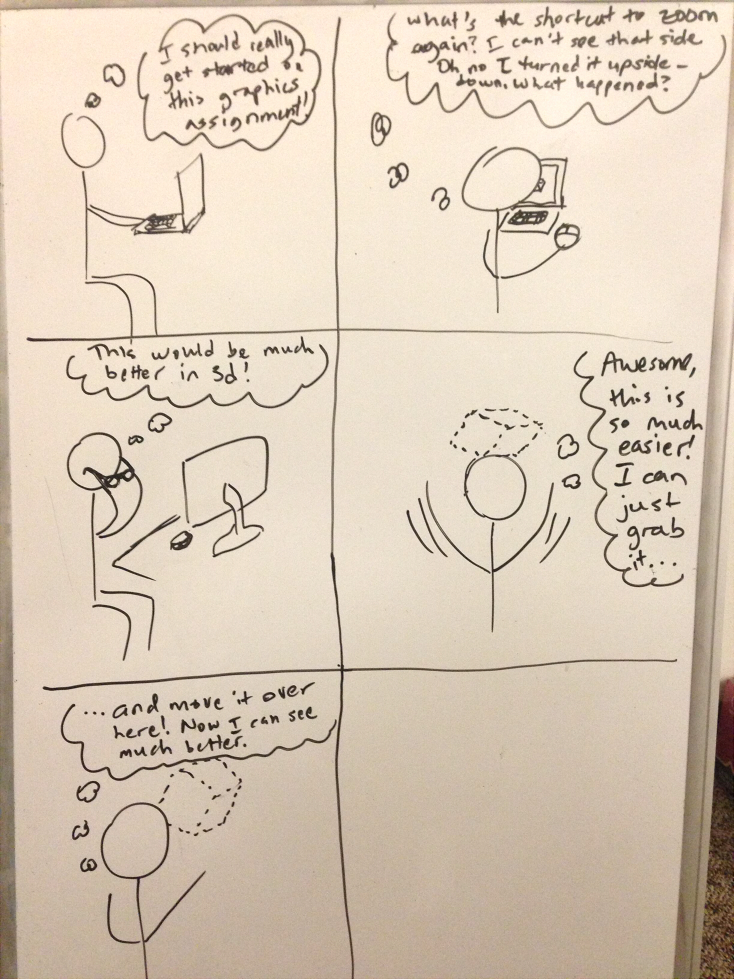

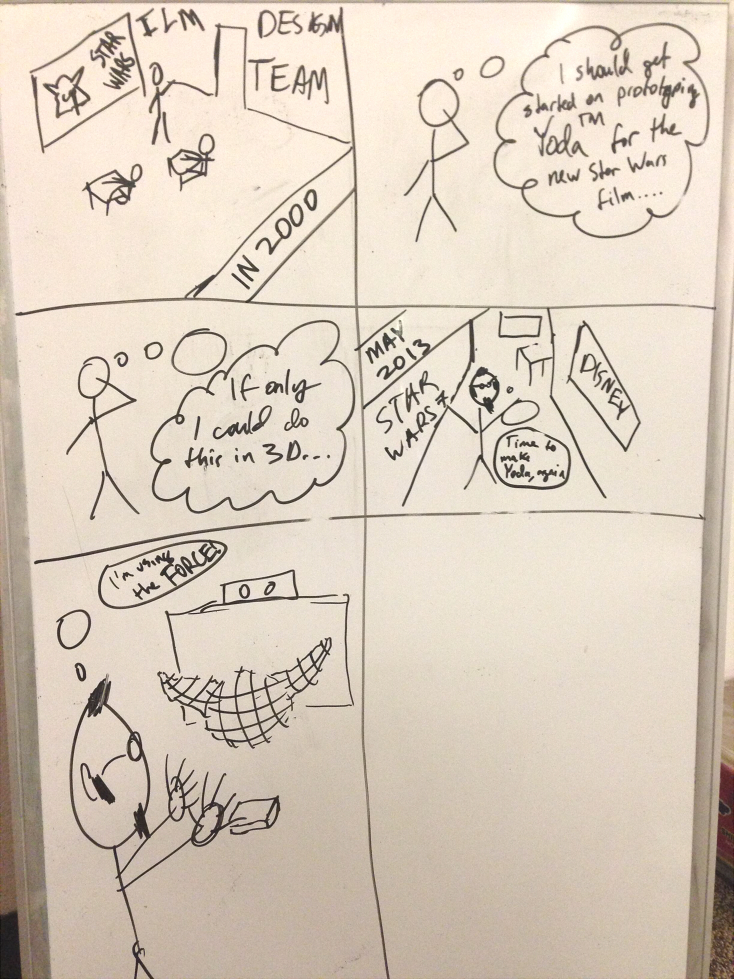

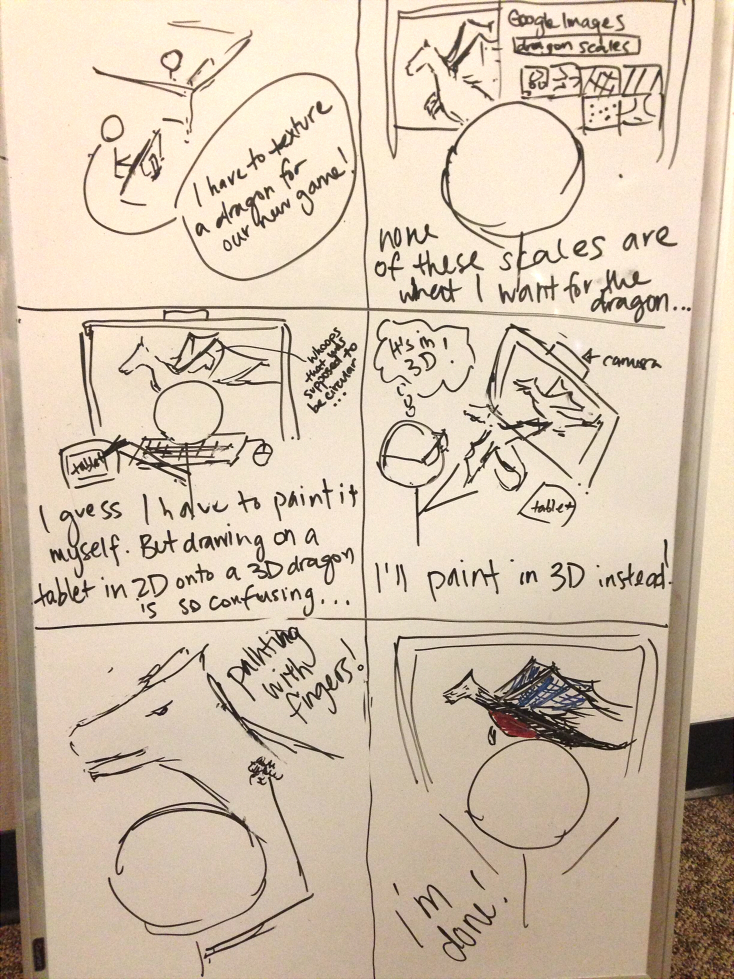

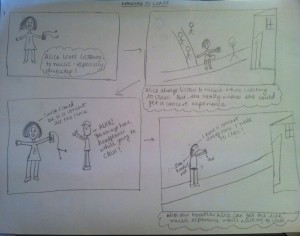

Storyboards

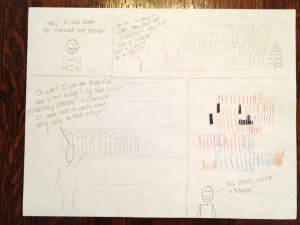

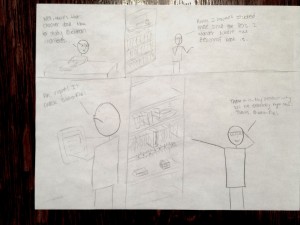

Teaching Number Base

Teach First TOY Program (I/O and intro looping)

Simplified Turtle Graphics

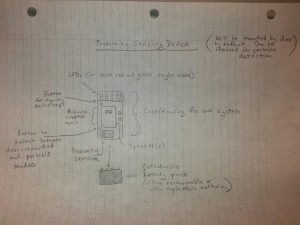

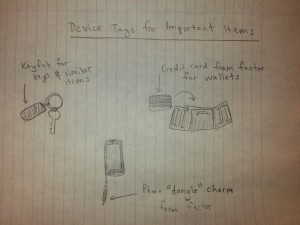

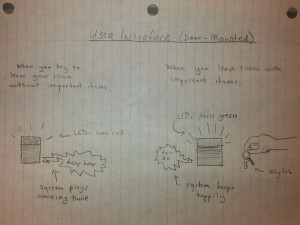

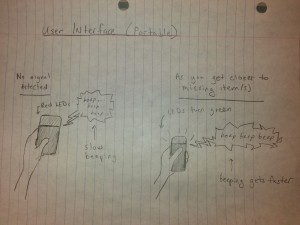

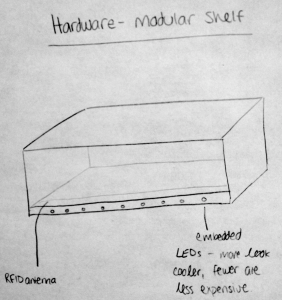

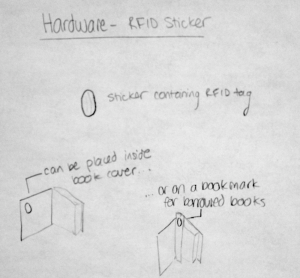

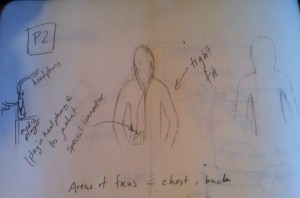

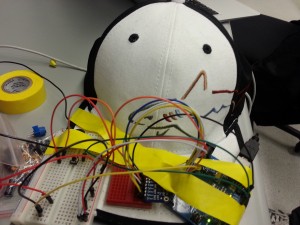

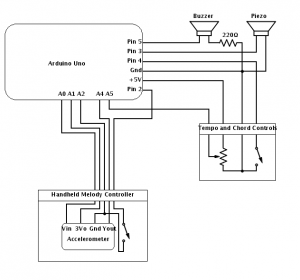

Sketch of the System