Group 17 Members: Evan, Jacob, Joseph, Xin Yang

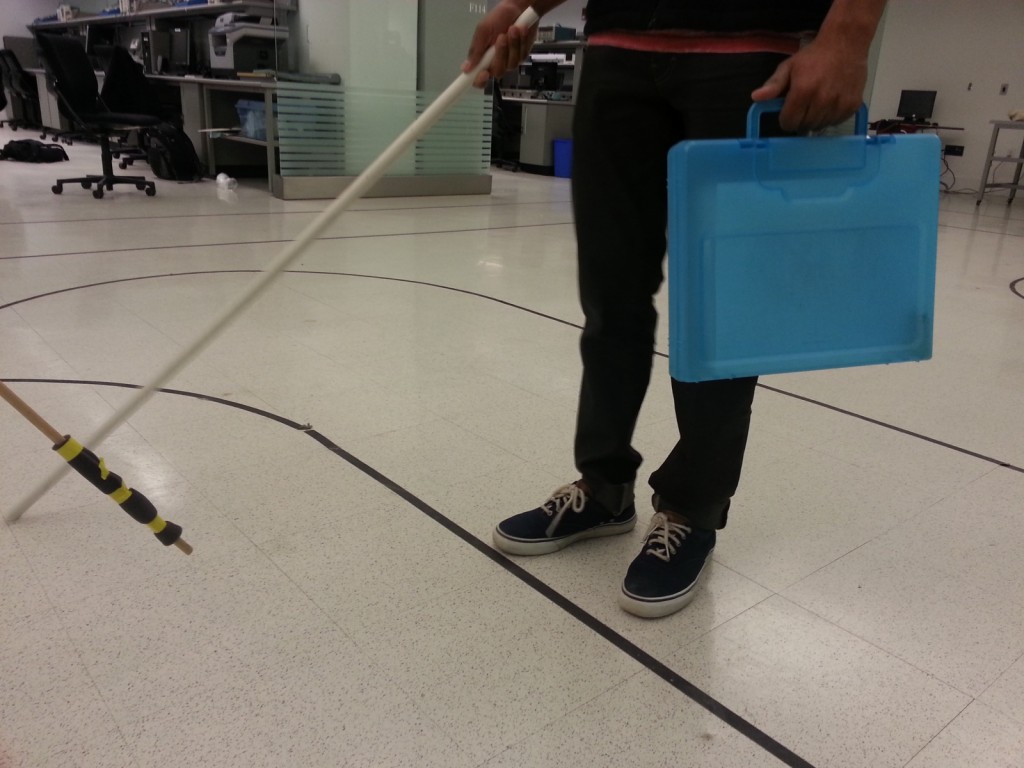

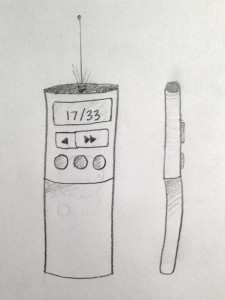

Project Summary: Our prototype is a navigational device for blind users that enables them to receive route-guided instructions via Bluetooth and cardinal directions using haptic feedback.

Previous Project Posts

P1 — Brainstorming

P2 — Interviews and Storyboards

P3 — Prototyping!

P4 — User Testing

P5 — Working Prototype!

P6 — Testing with Target Users

Video

Changes to the Prototype

- Added accelerometer: In order to get more accurate compass readings, we made use of an accelerometer to compensate for tilt of the magnetometer. With these changes, we are able to provide a more predictable experience for the user in the compass and navigation modes.

Evolution

The drastic evolution of our designs reveals a humbling lesson in the importance of truly understanding one’s users and their tasks before attempting to propose a solution. Our first design took the form of a “sonar glove” which would use distance detection to help the blind navigate their environments. Though we guessed that asking the blind to set aside their familiar canes to adopt such a system would require a fair amount of adjustment on their parts, we hardly began to understand the actual problems that they faced. Thus, when we finally got the chance to study cane travel and speak with blind users, we realized that the few advantages sonar could provide paled in comparison to the benefits that the standard cane already provided. Once we better understood the all issues that new technologies could address, we poured over our options and eventually to focus on enhancing cane travel both with and without GPS navigation.

Surprisingly, though the form and function of our design changed a great deal in a short time, our overall goal did not. We chose our first design idea because it seemed like a “cool” project, yet once we delved into user interviews and task analysis, we found that we had instead grown attached to the possibility of helping this group of people who so desperately needed better technologies to improve their quality of life. Thus, even when it seemed like we needed to throw out all of our ideas and start over, we remained true to the original intent of the project.

Critical Evaluation

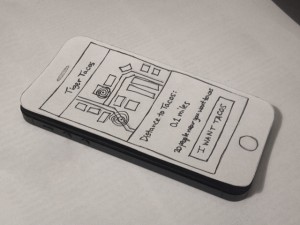

Through the process of prototyping and receiving feedback on our device this semester, we definitely learned a lot about the problem we set out to solve. After talking with blind people in the community, it became clear that there’s room for improvement in the space of blind navigation technology. The people with whom we talked were especially concerned about affordable devices, since most existing technology comes in the form of highly-priced standalone hardware or software—little to none of which is covered by health insurance. On the other hand, we were surprised to learn that many blind people have taken to using smartphone apps. In its ideal form, our product would interact with the user’s phone and accomplish what neither an app nor a standalone piece of hardware could do by itself. In this sense, we believe our prototype could lead to a real-world, practical product and fill a substantial gap in the current market.

That being said, we have concerns about how accurately the prototype could deliver navigation instructions in its present form, and whether the current interface between phone and cane can be improved. Given that the iPhone and similar devices have built-in accelerometers, magnetometers, and vibration capability, we could feasibly develop a cane that attaches and holds the user’s phone instead of creating a redundant piece of hardware. This would be more affordable and provide a more reliable connection than Bluetooth has shown to provide. It would also allow the user to receive audible feedback in addition to tactile directions, which some users indicated that they would prefer. If we were to continue developing the product, we would explore these alternative designs and form factors to create the most flexible and accessible device for blind users.

Moving Forward

Moving forward, we’d focus on further refining the way information is transmitted through the ridges on the cane. Much of our feedback indicates that the two ridges the cane currently has are inconveniently located, making it easy to miss a turn signal. While we feel that ridges or a similar touch-based system can be an effective navigation aide, more work is required to determine an arrangement that is both ergonomic and informative. Reaching this configuration would require further testing with users, since we’d need an accurate indication of how the average blind user holds their cane. We’d need a larger number of test subjects to get an accurate cross-section of the blind population, and would likely need to test several different arrangements in parallel.

We’d also work on developing an intuitive GPS interface for the iPhone that would use our cane, and eventually an API that could be used by third party application and GPS developers. These could largely be based on existing GPS apps, but would still require some amount of user testing. Mechanically, we’d need to research further whether the device would work better as an integrated component of a full cane or as an attachment to other cane devices. Business and distribution models would probably play prominently in this decision, but we’d also need feedback from users to determine what they would prefer. This would likely take a form similar to our testing so far, but on a larger scale.

Codebase

Libraries Used

• ADXL345 Library-used to communicate with accelerometer: https://github.com/jenschr/Arduino-libraries/tree/master/ADXL345

• Arduino Servo Library-used to command servo for ridges: http://arduino.cc/en/Reference/Servo

• Magnetometer example code-used to communicate with magnetometer: http://dlnmh9ip6v2uc.cloudfront.net/datasheets/BreakoutBoards/Mag3110_v10.pde

• Arduino Wire Library-used to communicate with magnetometer: http://arduino.cc/de/Reference/Wire

• Bluetooth Shield example code-used to communicate with Bluetooth shield: http://www.seeedstudio.com/wiki/Bluetooth_Shield

• Arduino SoftwareSerial Library-used to communicate with Bluetooth shield: http://arduino.cc/en/Reference/SoftwareSerial

• Tilt compensation example code-used in tilt compensation calculations for compass: https://www.loveelectronics.co.uk/Tutorials/13/tilt-compensated-compass-arduino-tutorial

• Arduino Math Library-used in tilt compensation calculations for compass: http://arduino.cc/en/Math/H?from=Reference.Mathh

Poster Material

Dropbox Link