Group Name: The Elite Four

Members:

Clay Whetung (cwhetung@)

Jae Young Lee (jyltwo@)

Jeff Snyder (jasnyder@)

Michael Newman (menewman@)

Brainstorming Ideas:

1. Create an interface for Magic: The Gathering or a similar game that maintains an internal representation of the game, enforces rules, and provides graphical feedback on game state to users.

2. Use a Kinect to train users in martial arts, dance, yoga, tai chi, etc. at a low cost by tracking the user’s skeleton and comparing their forms to those of expert users. The application can give them feedback on exactly where they’re going wrong.

3. Use pitch detection and score following to help musicians/singers know which notes they play out of tune while practicing and give them graphical feedback including direction and pitch distance.

4. An automated metronome with score following for practicing — when a musician plays a section correctly, the metronome automatically increases speed. If they play it incorrectly, the metronome shows them their mistakes and slows speed down.

5. A transparent device that can be overlaid on computer monitors (or televisions) of multiple sizes and transforms them into a pseudo-tablet so that artists using the system in Photoshop etc. get immediate visual feedback.

6. A drum pad for percussion practice in low-noise situations that uses a practice pad with piezo sensors inside to trigger drum sounds through headphones. The device would be similar to a practice mute for brass instruments and could be customized for varying sensitivities and with a range of sounds.

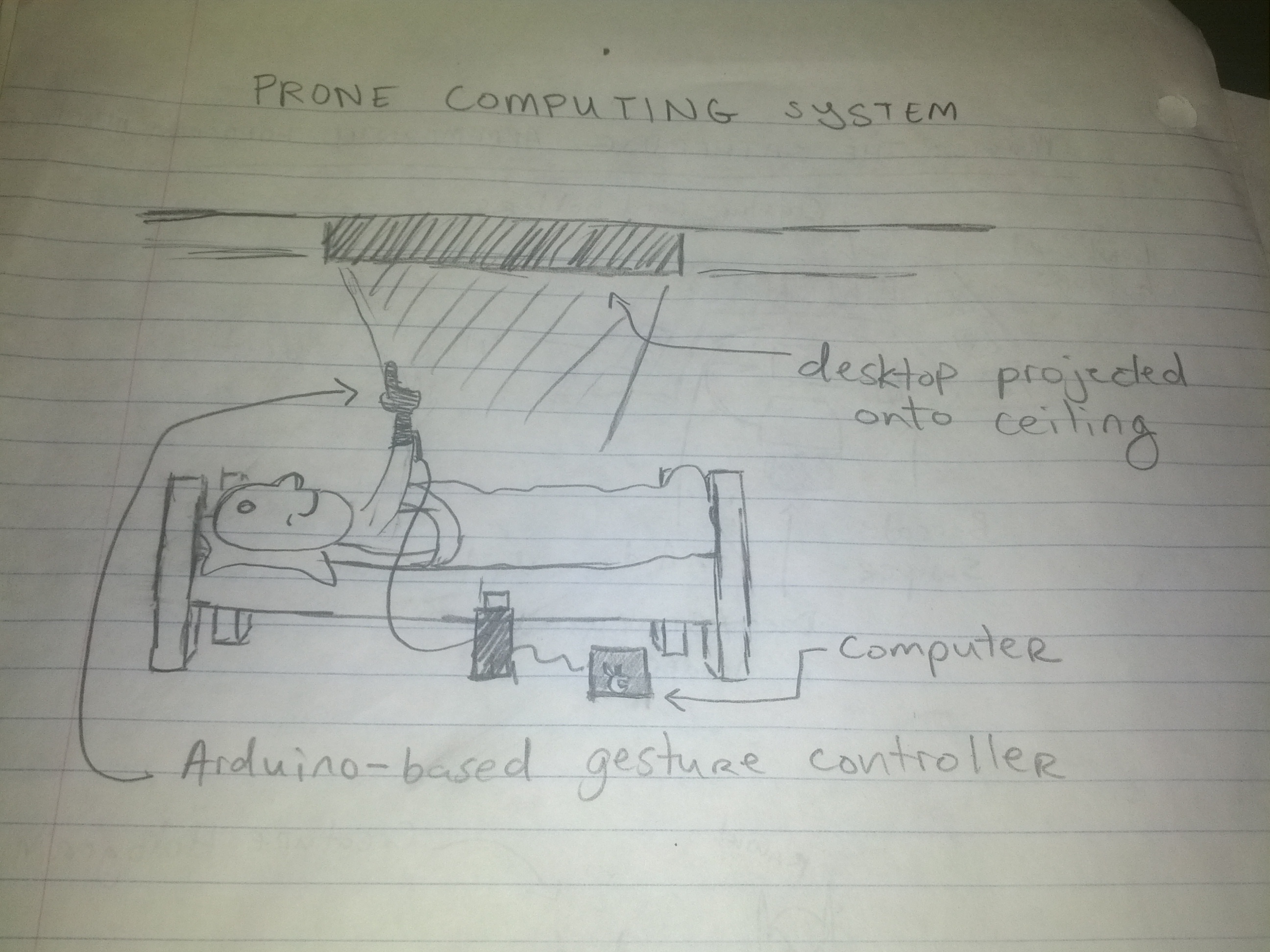

7. Interface to use basic functions of a computer while prone (i.e. in bed) — the device would project the interface onto the ceiling or wall, and use gestural control via Kinect or Wiimote. Avoids the common problems of using a laptop in bed – neck strain, hot surfaces, sharp corners, no surface to mouse on, etc.

8. A silent alarm clock that raises your shade for those who prefer to wake up with natural light, but want to do so later than the sun rises.

9. Voice recognition system to open doors. Allows for secure access by multiple people.

10. Make a self-control type device using the Kinect that locks users out of banned applications (i.e. World of Warcraft) or specific websites until they perform some physical task, for example a specific number of push-ups or a yoga routine.

11. A frisbee-throwing robot to help users practice Ultimate Frisbee skills. This allows users to practice catching when another human isn’t available, and it can throw consistently at specific speeds, heights, etc.

12. A system that actuates a laser or other toy to exercise your cat or another pet in your absence.

13. A similar device could use a treadmill to exercise your dog or other larger pet. For motivation, the animal could be receive a treat for running for a certain time or distance.

14. A voice-controlled kitchen helper that can automatically measure and dispense frequently-used ingredients.

15. A system that listens on a certain phone/Google Voice number for text messages and calls and allows you to remotely start arbitrary electronic appliances by connecting them to mains, for example to start coffee brewing 30 mins before you arrive home.

16. A device that aids disabled users in basic computer use by automatically scrolling based on the position of your eyes and having activated eye gestures for basic commands.

17. An Arduino-based system that makes the entire bathroom process hands-free (turning on the sink, dispensing soap, opening the door, etc.) — people don’t want to get their hands dirty again.

18. Go-to-sleep button: Press it when you want to sleep and it does everything you should before bed (i.e. hibernates computer, turns lights off, sets alarm clock). Then when your alarm goes off everything turns back on!

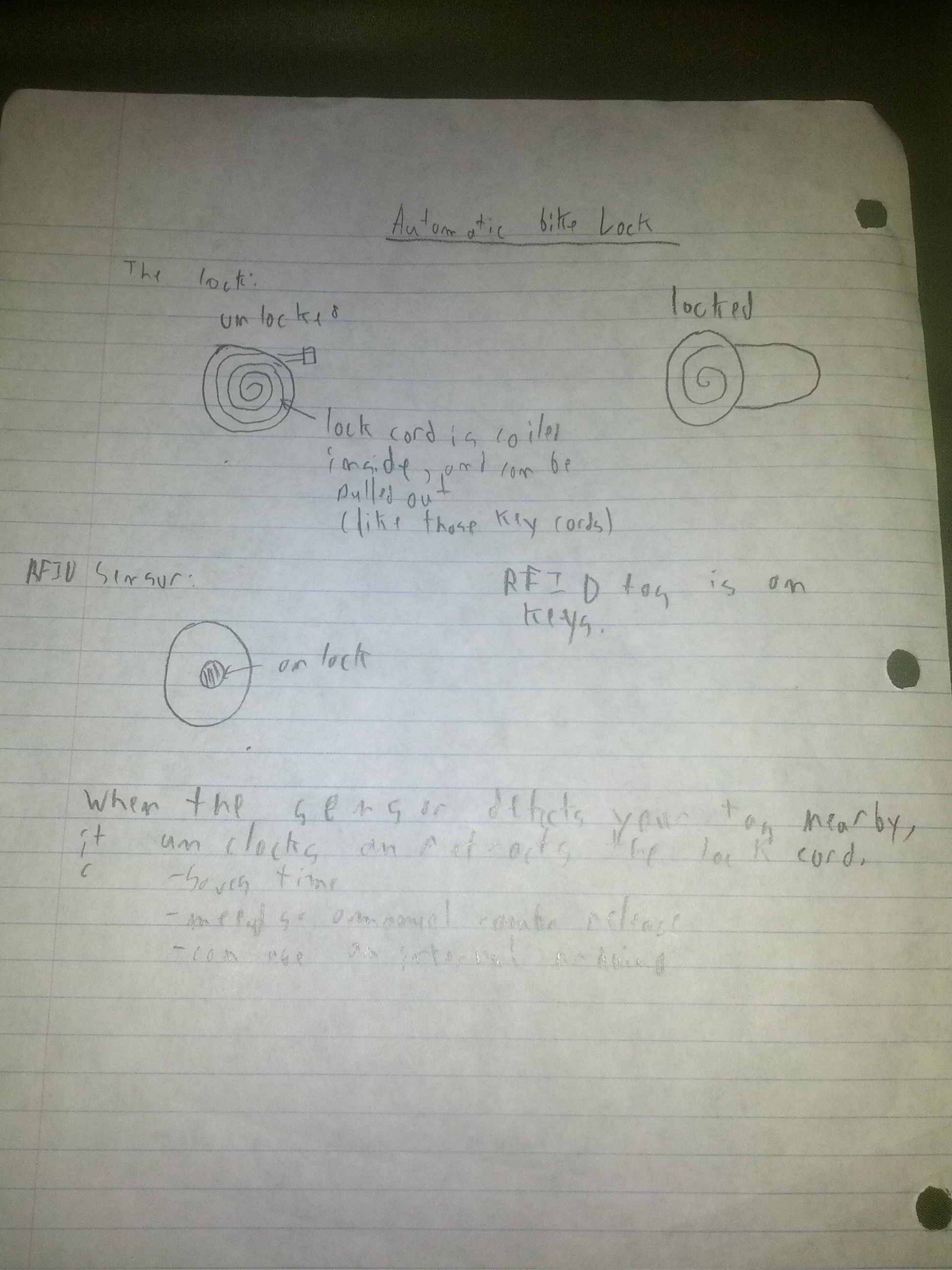

19. Automatic bike lock — when you put the kickstand down, the bike automatically locks itself. Alternatively, a coiled lock system that automatically retracts the lock when you get close to it.

20. Mixology robot: When you go up to it and place an order verbally, it mixes the drink for you (makes much more complicated drinks for parties without hiring a bartender).

21. Heart rate sensor that controls the speed of a treadmill and plays ambient music with appropriate (and motivational) BPM.

22. Proximity sensor that can be attached to objects, so if you lose it then you can use the range sensor to find them.

23. Automatic transcription of music — you sing something and the device outputs the pitches and rhythms that you sang.

24. An interface that personalizes your entire house — scan your fingerprint and it does things like set the temperature, turn on certain lights in the house, etc.

25. System that uses RFID tags to track small informal transactions between friends so that money is kept even (i.e. Joe buys Frank a coffee and it gets logged, so maybe next time Frank will know to pay for Joe).

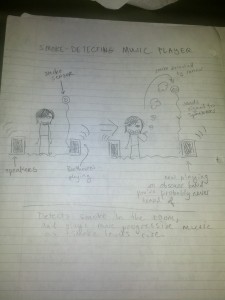

26. Smoke-controlled music player that plays progressively more progressive/alternative music as the amount of smoke in the room increases.

27. A weather sensor that detects brightness or dryness of the outside and dispenses sunscreen if it is very bright or lotion if it is very dry.

28. Alarm clock that syncs with your Google Calendar and wakes you up before your first appointment of the day, even if you forget to manually set an alarm. Also detects if you are awake already and won’t wake you up.

29. Freestyle rapping/poetry companion device that automatically performs voice recognition (very quickly) and suggests rhymes and/or insults based on previous words.

30. Musical routing system that automatically routes instrument inputs to amplifiers based on a performance schedule without the need to plug/unplug.

31. Silent band practice system with individual headphone mixes.

32. Party robot – allows attendees to vote by text on which of a few songs to play next, displays live tallies and automatically beat-matches and crossfades between songs to ensure that there are never silent moments.

33. Biometric sensor that detects which finger is being held to the sensor and opens certain applications and/or performs particular actions associated with each finger (e.g., different workspaces, a “gaming” finger, a multimedia finger…)

34. Alarm clock that is somehow synced with your sleep cycle (through movement, for example) in order to wake you up at the end of a sleep cycle before a certain time.

35. Fingerprint system that protects food/resources from roommates. Can also be used to “book” shared appliances like the oven or washing machine.

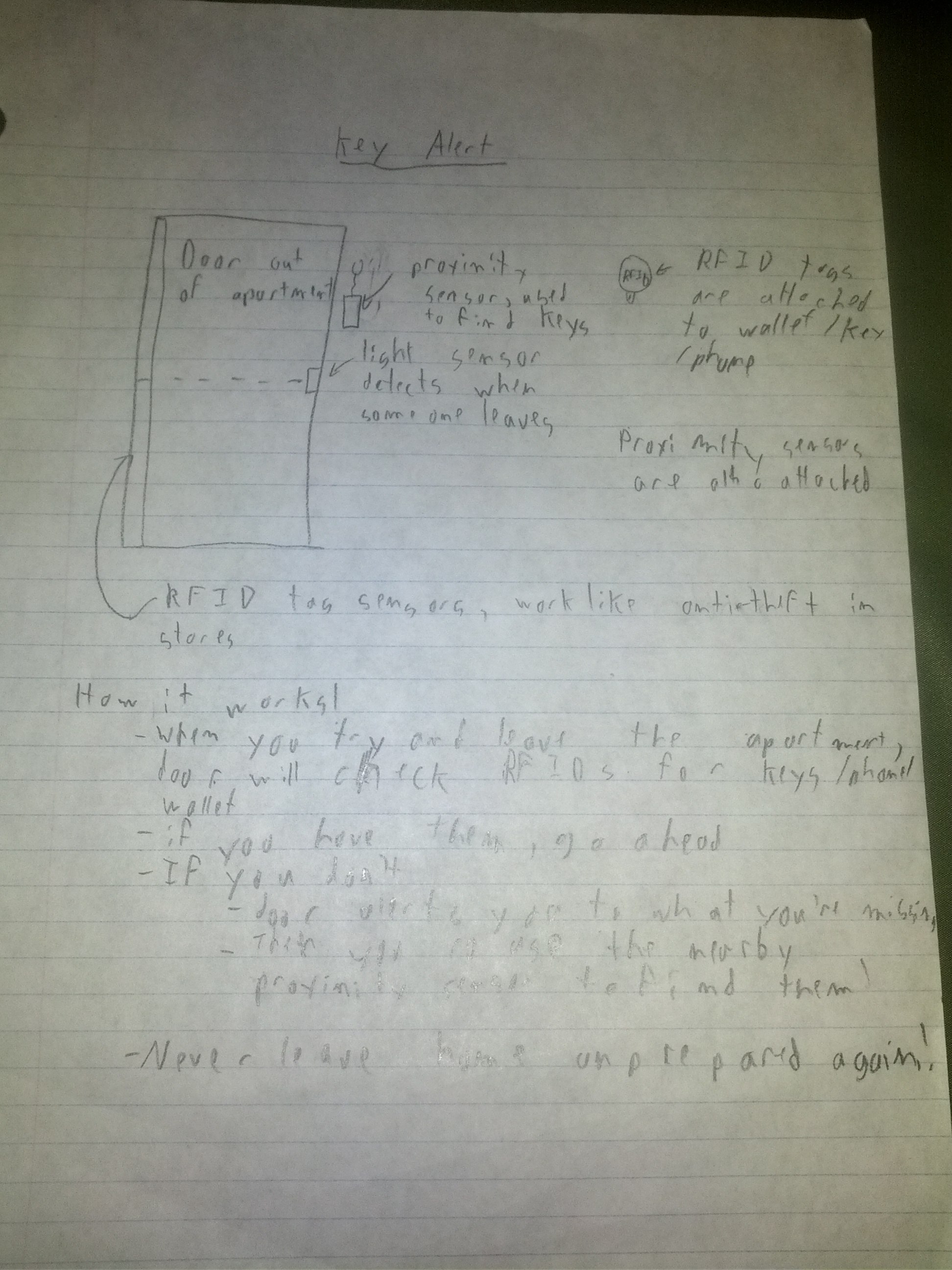

36. A credit-card or key fob form-factor sensor system that detects if you try to leave your room without your keys, wallet, or phone. If you forget them, it beeps and/or flashes a light to alert you.

37. A better way to flip through channels. Could use a Kinect and certain gestures, or a trackpad to scroll or detect blinking. Much easier than pressing the channel up/down buttons on a remote.

38. Chair that detects your posture (e.g., how much you’re slouching, where your shoulders are, how much of your back is in contact with your chair) and automatically adjusts itself (with motorized cushions) to accommodate you.

39. Use a Kinect and projector to make an interactive desktop (like Jarvis from Iron Man)

40. Fingerprint scanning bike lock.

41. Shoes that inflate (or let in more air, like in those Nike air pockets) when you’re higher up in the air (when you jump) to cushion your landing.

42. Smartphone keyboard that adjusts based on how you’re holding it — if you’re holding it with one hand, for example, then the keyboard will automatically adjust to make it easier to type.

43. Television device that can sense when a laptop or other device with a screen is in front of it, then gives you the option of projecting that screen onto the TV, almost like a wireless external monitor.

44. External monitors that have a sense of physical location relative to other monitors that they are hooked up to — so if you have one monitor on the left and one on the right, you can swap their position and the mouse would still move correctly from the left screen to the right screen.

45. A device that enables any screen to be projected into a bigger size while maintaining any special properties it has (such as a touch screen) — a smartphone screen could be projected onto a table and essentially turned into a tabletop tablet.

46. A device in your shoes that measure how straight your steps are and sends the results to your phone — might be helpful for detecting when you’ve had too much to drink.

47. A glove that that can measure body temperature and control the room temperature accordingly. Additionally, gestures can be used to turn on speakers, turn lights off, etc.

48. Glasses/goggles with an infrared sensor that provide a heads-up display identifying living (and other warm) things in your field of view, classifying them based on their general shape and size (could be useful for detecting wildlife or stalkers).

49. Toilet that keeps track of the size/consistency/color of your stools, rating them on the Bristol stool scale and letting you know of potential health problems.

50. Either sensing gloves or a flat keyboard without a display that can be used to perform typing motions in the absence of a laptop, display, or proper keyboard — useful for situations where it’s not feasible to have a laptop out, or (for example) in a class where laptops are banned.

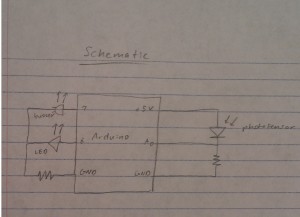

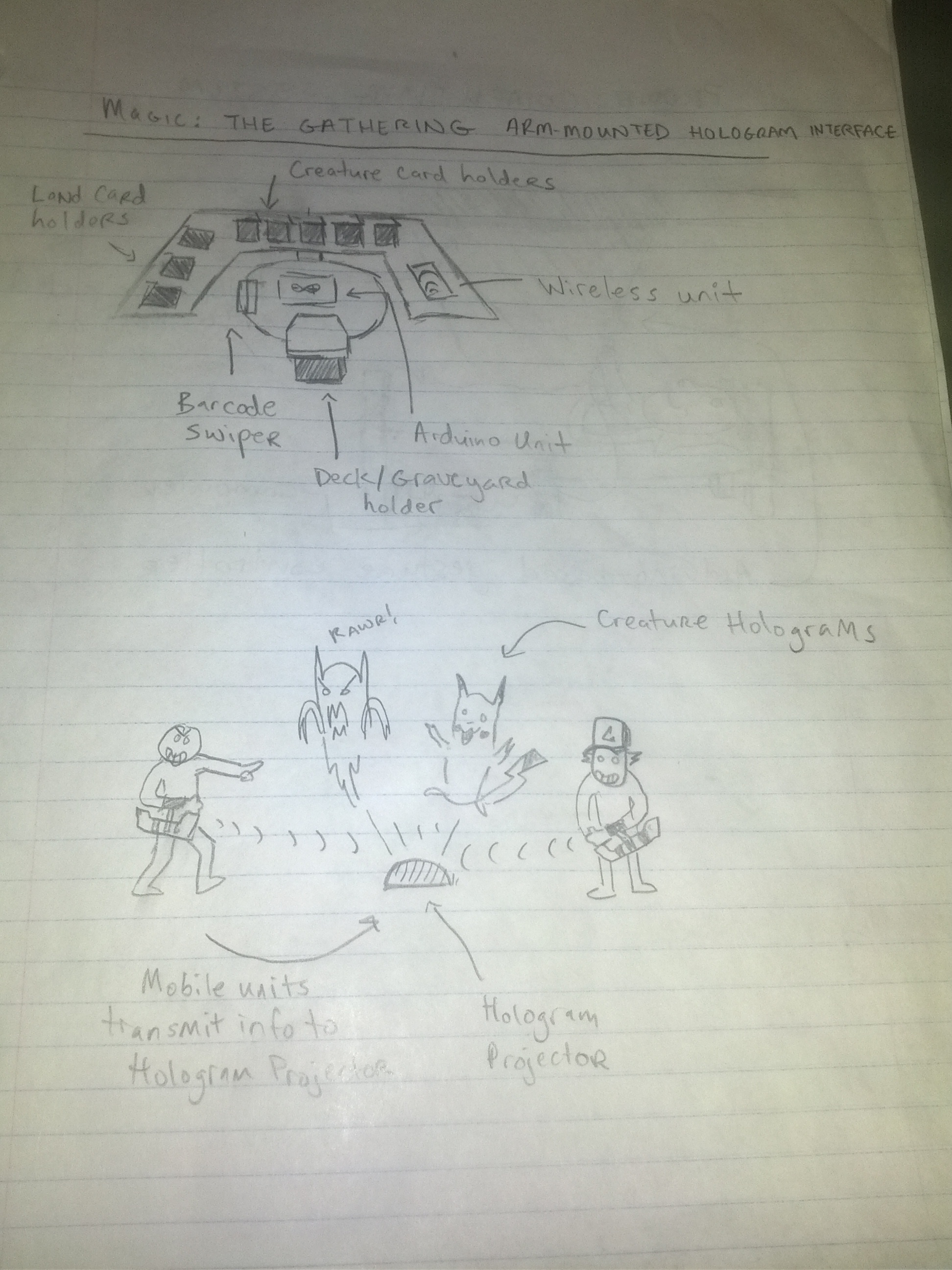

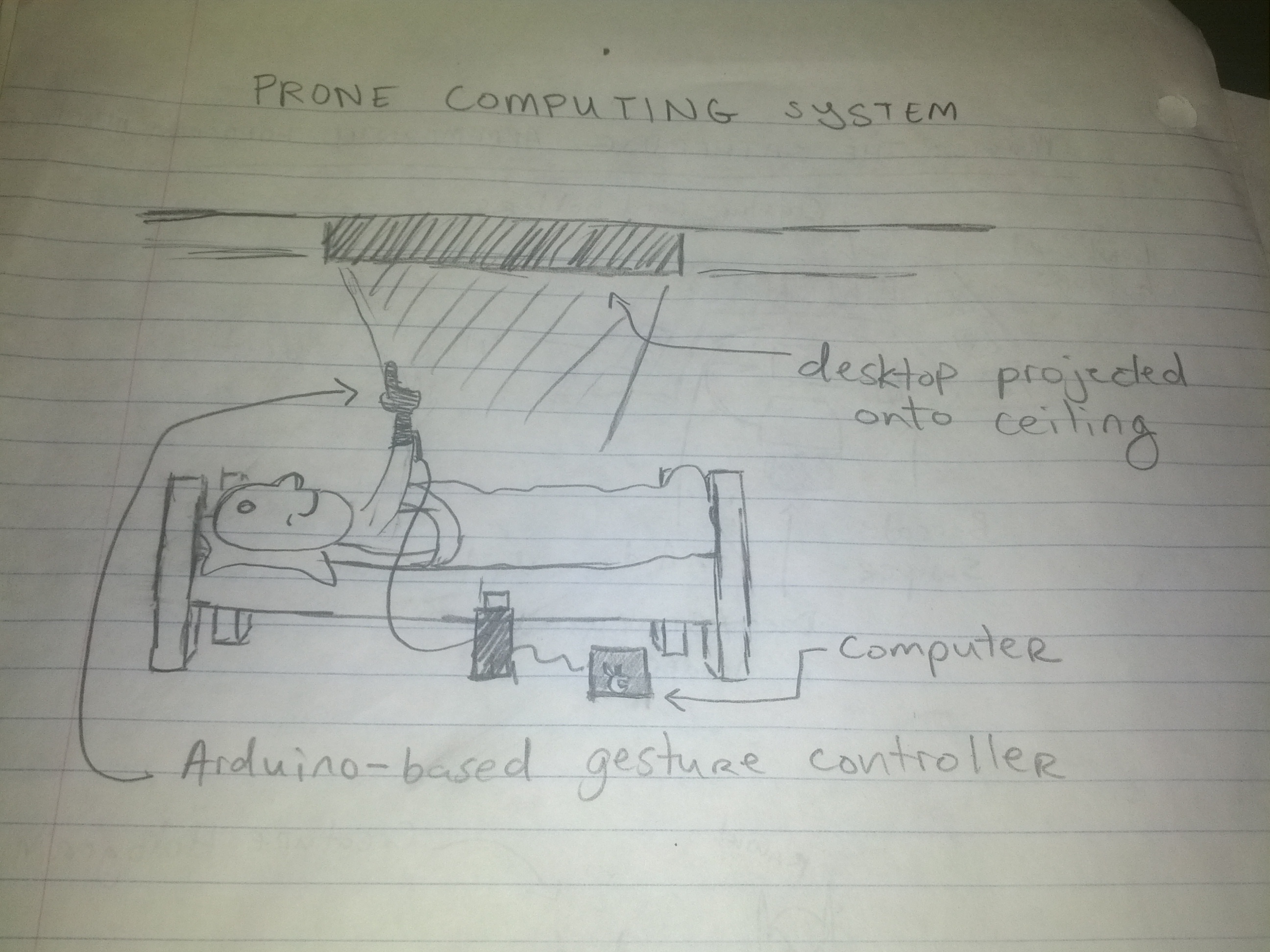

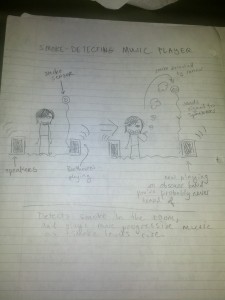

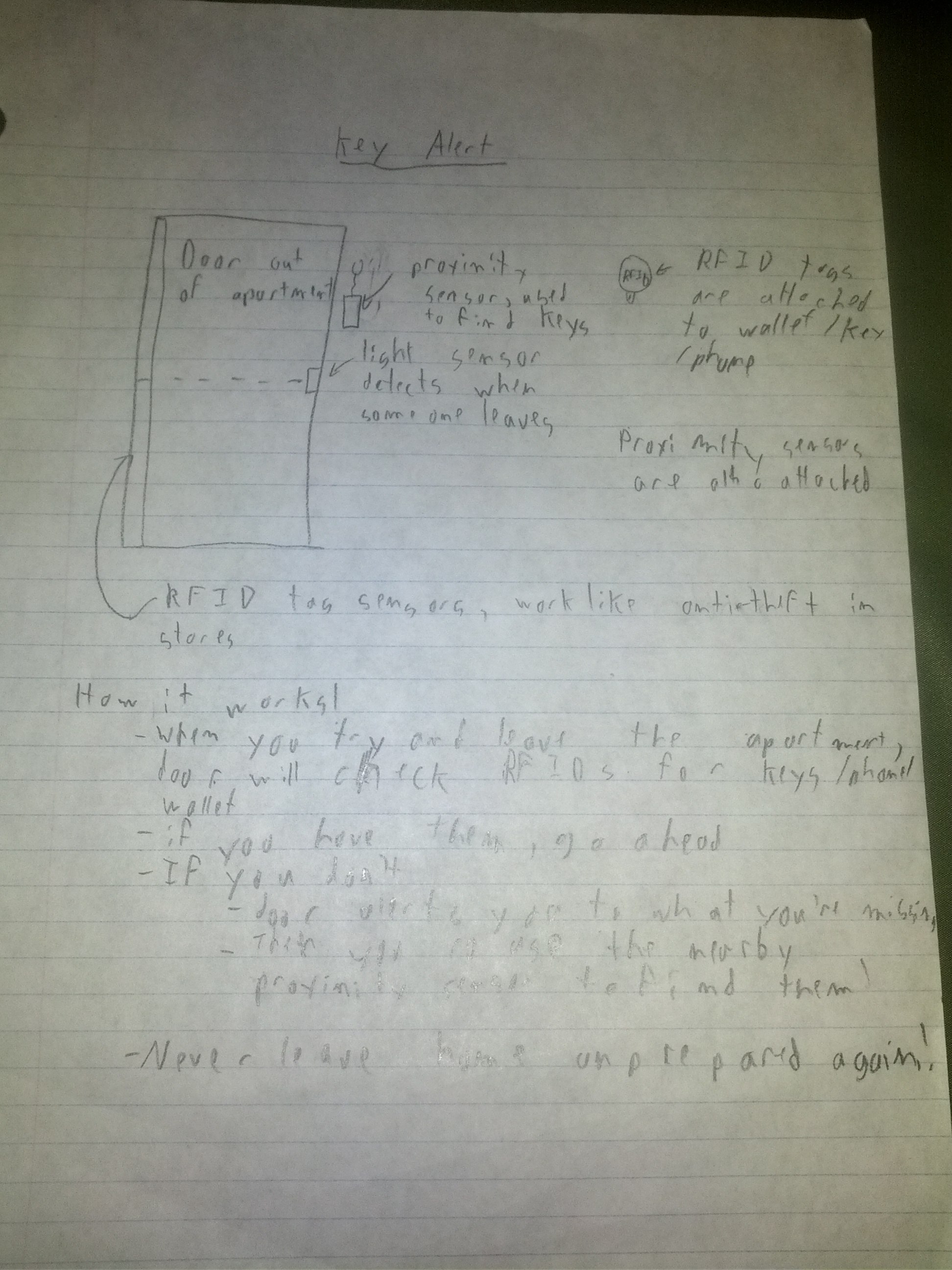

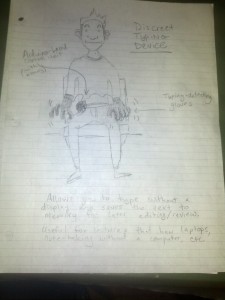

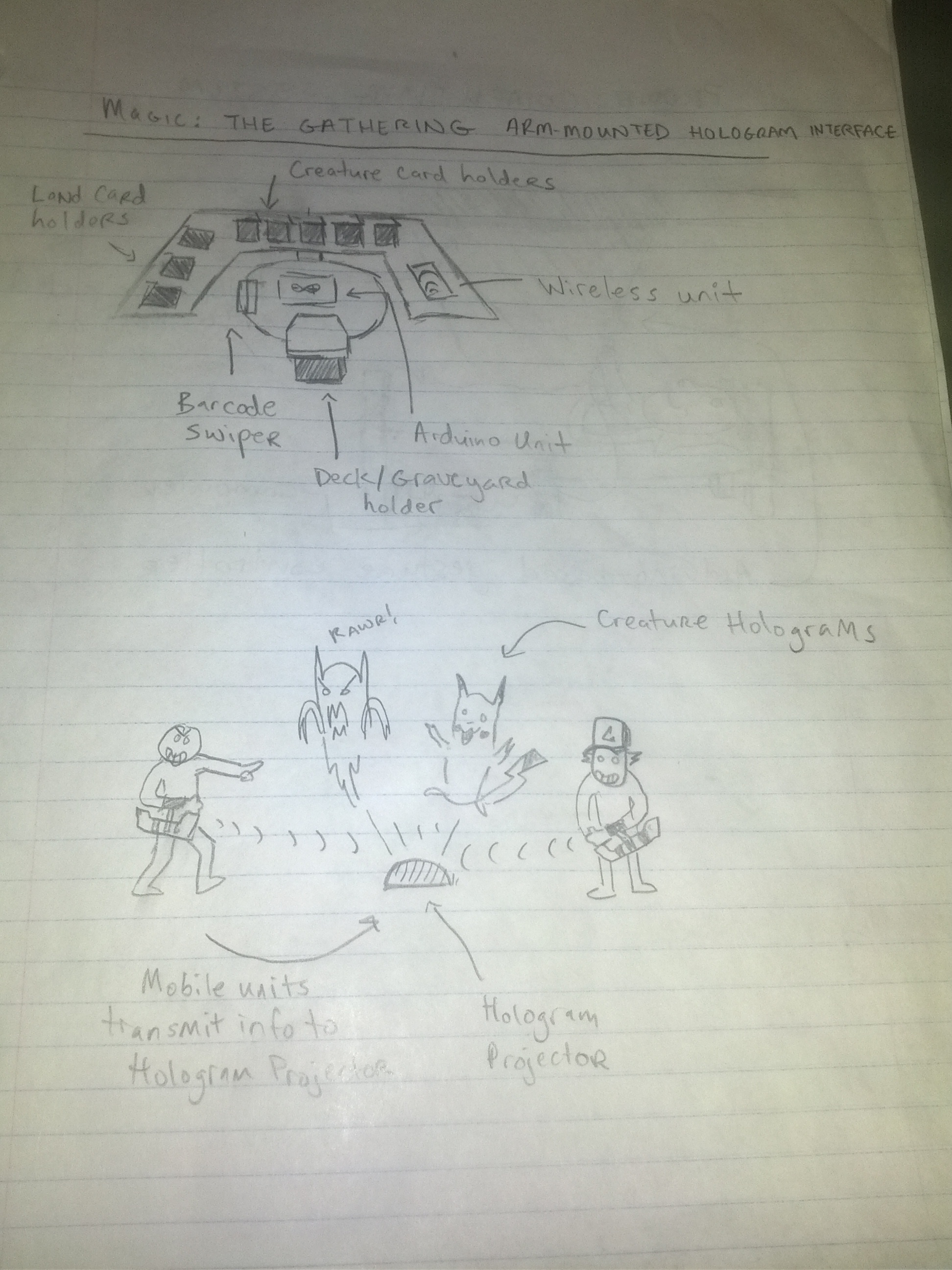

Brainstorming Sketches:

1) Interface for Magic: The Gathering or a similar game that maintains an internal representation of the game, enforces rules, and provides graphical feedback on game state to users.

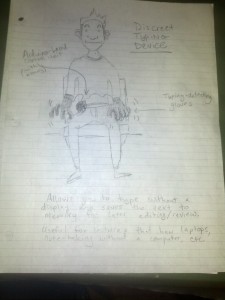

7) Interface to use basic functions of a computer while prone.

10) Make a self-control type device using the Kinect that locks users out of banned applications or specific websites until they perform some physical task.

19) Automatic bike lock — A coiled lock system that automatically retracts the lock when you get close to it.

26) Smoke-controlled music player.

32) Party robot – allows attendees to vote by text on which of a few songs to play next.

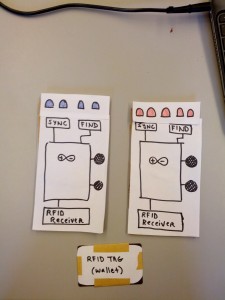

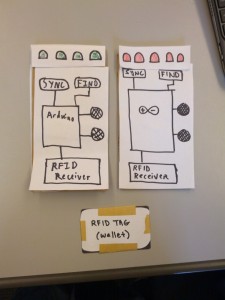

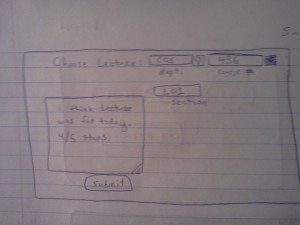

36) A credit-card or key fob form-factor sensor system that detects if you try to leave your room without your keys, wallet, or phone.

50) Sensing gloves that can be used to perform typing motions in the absence of a laptop, display, or proper keyboard.

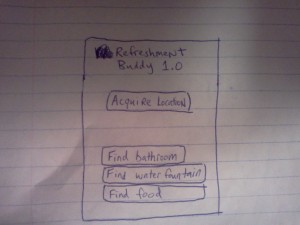

Our idea:

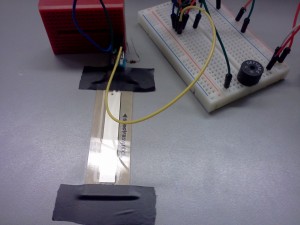

We chose to work on the key alert system that will alert users if they try to leave their house/room without their keys/wallet/phone. We chose this idea because it solves a common problem: leaving home unprepared and getting locked out or worse. The proximity sensor will also allow users to find their lost necessities with ease. Usually this is done only for cell phones through a desktop or web app that will make the phone ring or give its GPS coordinates. With our system, however, all a user needs to do is grab the proximity finder that is hanging nearby and walk through her house until she finds the missing item(s). This project is somewhat open-ended; for example, the proximity technology could be carried outside of one’s domicile and used to find lost objects anywhere. This project also allows us to explore making user interfaces that have minimal use of traditional interfaces.

Target User Group:

This project is aimed primarily at people who have self-locking doors — a type of lock extremely common on college campuses. The primary issue with these doors is that if you leave your room without your key, you will promptly be locked out, often requiring a call to security and having to wait for their arrival. This is a massive inconvenience that costs the user time, comfort, and — in some cases — money. With our system, students (or even the university itself) would be able to install a very simple system and feel secure in their preparedness. This user group can quite often be disorganized, hurried, and stressed; these issues can compound to make remembering the little things, such as one’s keys, quite difficult.

Problem Description & Context:

The high level goal of this project is to ensure that users can feel confident that they haven’t forgotten anything when they leave the house — in particular, we are addressing the problem of forgetful students getting locked out by self-locking doors. A technical solution could improve the situation by alerting students when they try to leave their room without their keys. This is superior to a non-technical solution, such as simply leaving a reminder note, because the automated system will never “forget” to alert the user, whereas a student might forget to leave/read a note. Thus, it is important that the system be as automatic and simple as possible — after all, the goal of this project is to make users’ lives easier, not more difficult. In addition, we need to consider that these projects will be implemented in dorms or other places where the user does not have actual ownership of the building. As such, our solution must not require a destructive installation. Rather, it should be simple to install and remove. Since our target user group lives in a busy, high-stress environment, we do not want the solution to require much maintenance on their part. Instead, it should be more of an “install-once-and-forget-about-it” solution. A similar problem has been solved by using a beeper system that helps users find keys. But our goal here is not just to help users keep track of their keys, but to protect them from absentmindedness in general.

Platform:

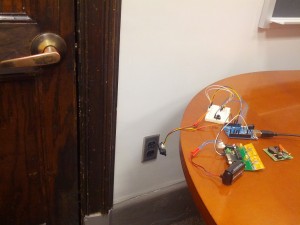

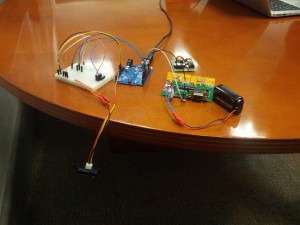

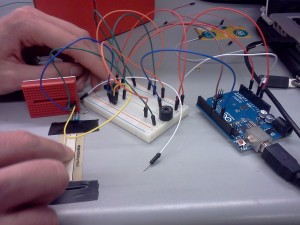

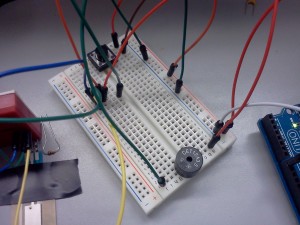

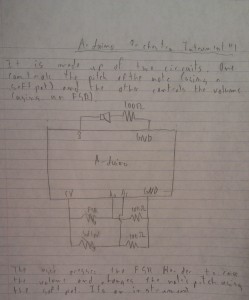

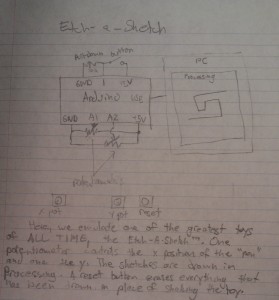

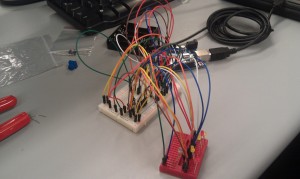

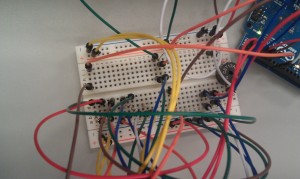

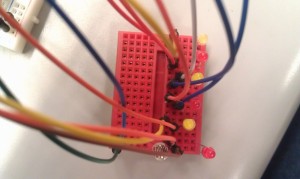

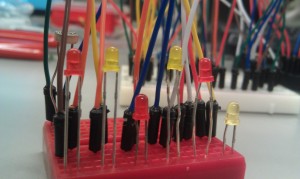

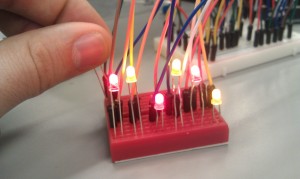

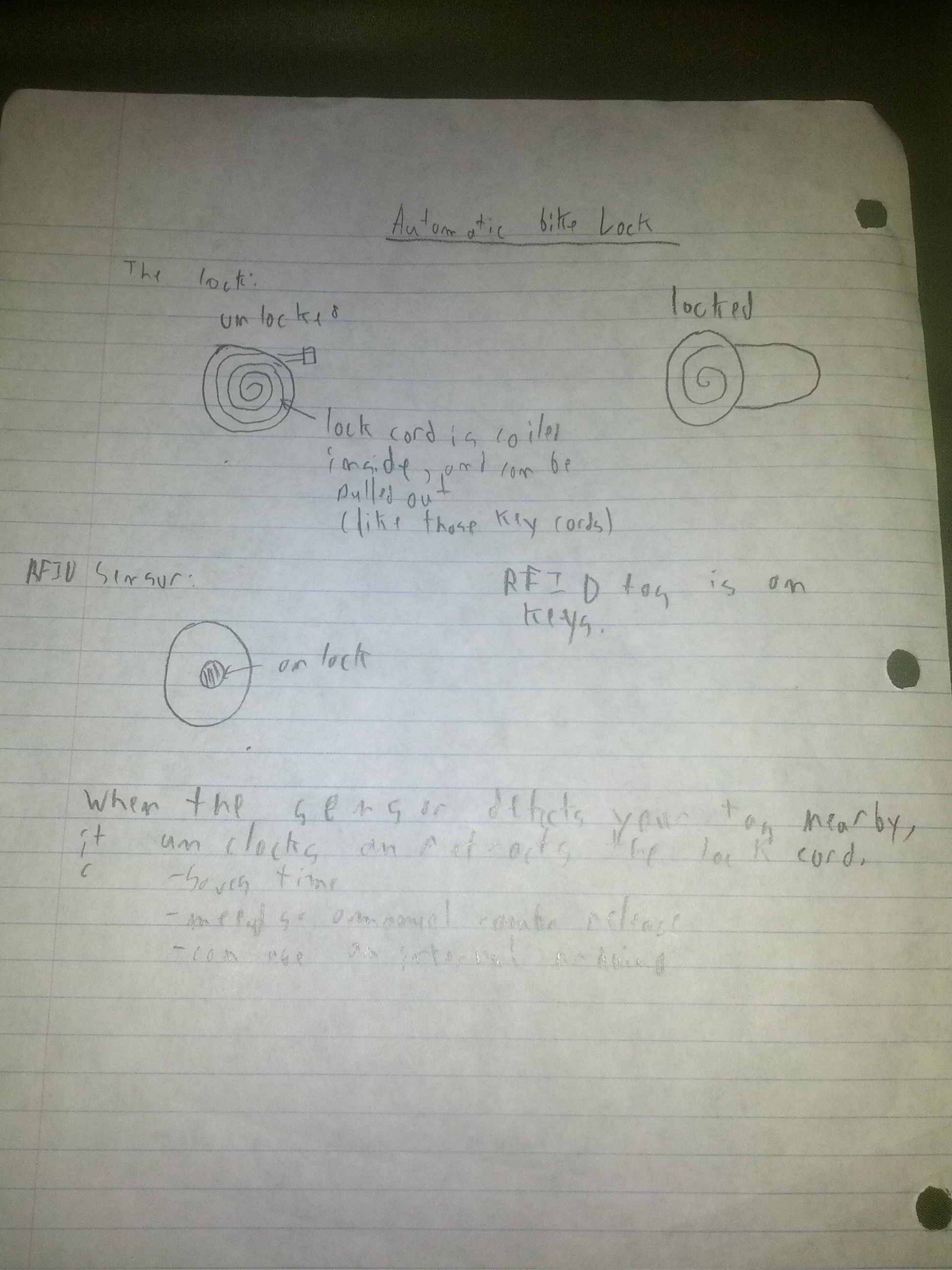

We intend to build our device around the Arduino platform. This platform seems appropriate for an automated system that is meant to be small, simple, and generally unobtrusive. Relying on a desktop/laptop/mobile app would make less sense, since such devices would be useless whenever powered down or (in the case of mobile devices) lost or left elsewhere. For sensors, RFID tags or similar devices may be suitable for short-range proximity detection. For example, the system could detect when the door is opened but an RFID tag (associated with keys/wallet) is not present.

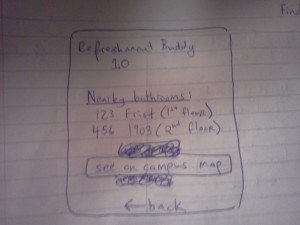

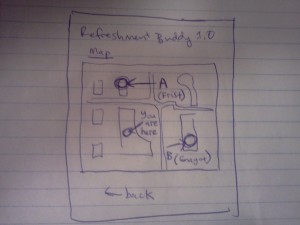

More Sketches: