Group # 21

Group Members & Contributions

- Shubhro Saha — Blog Post, Interviews

- Andrew Cheong — Blog Post, Interviews

- Miles Yucht — Blog Post

- David Dohan — Blog Post

Problem & Solution Overview

The problem we aim to solve is that of computer user authentication: verifying credentials when logging into a database, a web application, or a touch-screen cash register, for example. The prevailing solution has been to prompt users for a username/password combination. But such a solution, while dominant, is limited to keyboard-based human-machine interaction. As interfaces gradually migrate to touch-screen and voice-based interactions, the keyboard is becoming less important as an input device. From an accessibility point of view, some individuals find learning to type on a keyboard extremely difficult. Security-wise, passwords can be cracked by brute force input methods. Our solution is to authenticate by means of a combination of hand gestures performed in a “black box”, detected by LEAP motion. This solution is more difficult to hack by algorithmic methods because it requires a human hand, and is more natural and potentially a faster method of authenticating than typing into a keyboard.

Description of Contextual Inquiry Users

Our target user group would be composed of individuals are are required to sign in and out of accounts on a regular basis. Usually, signing in and out requires the user to type in their username or swipe a card to verify their id and then they follow that up with typing in a password or a providing a user PIN number. The student uses her laptop for many different reasons such as academic studies or social purposes. Being at a university, the student tends to carry her laptop around in public. While she expressed irritability for complex passwords for internet accounts that she visits less frequently, she appears to be content with her current passwords. She expressed concern for public acceptance of carrying a box for verification or to provide hand gestures at a computer. This provides insight into why HandShake might be appropriate for stationary computers. For a cashier, they use an id card to swipe into a cash register. Her primary concern was to ensure the security of the register and that only people allowed can access the register. She expressed concern about keeping track of her id card since it is so valuable for her work. HandShake removes the necessity of maintaining a physical key/card while ensuring the security by identifying one’s hand as well as the gestures. Even from a librarian standpoint, who must access the library network frequently when checking in/out books as well as including new resources to the library, they must provide long passwords to authenticate themselves each time. Handshake helps remove the necessity to memorize long passwords and eases the tasks in hand for the librarian.

Contextual Inquiry Interview Descriptions

Procedure. In pairs, we scouted out interviewees in their “natural habitats”. Generally, we asked them all the following questions to understand their experiences and openness to the idea of alternative authentication schemes:

- On a day-to-day basis, how often do you login into a computer system?

- Do you find keyboards logins annoying?

- Do you find it annoying that passwords require so many special characters?

- Would you consider an alternative approach to logging in?

- In the ideal world, how would YOU like to login to such a system

- Would you appreciate coming up to the computer system and it logging in for you automatically?

- Go into talking about our product being a derivative of that

- Do you see problems in using our product?

- Would you feel comfortable using the product?

- Would you find a handshake easier to remember than a text password?

Common themes. Most of our interviewees found text passwords in the status quo to be frustrating when they require a set number of letters and symbols. They all value speed of authentication, and were all willing to consider alternative methods like HandShake. However, some common concerns included uniqueness of the handshakes generated.

Student: The most common purpose for the student to sign in or out of an account is when she uses her laptop and when signing into internet accounts such as Facebook. She estimates that she signs into her laptop approximately three times a day and signs into a total of three different internet accounts. When asked about current day username/password approach and the complexities of special characters, numbers, and cases, she did mention that it annoying especially when she signs up for accounts that she enters less frequently and often forgets the password. She also explained that she would be totally open and willing to try a simpler technique to signing into an account. When describing the hand gesture approach, she initially expressed concern about the unusualness to make gestures at one’s computer but was comforted that the individual would do these gestures inside a box thus providing more security as well as not being out of place. While transportability and use of HandShake while on the go proves to be a problem for a student, she believes that this could be very appropriate for stationary desktop accounts such as home computers.

Cashier : She has her own ID card that she can use to swipe into her cash register. She does it at least three times a day during her 8-hour daily shift. Her manager gave permissions for her to access that register and no other registers in Frist. She feels “50-50” about the responsibility of having to carry a card. While she understands the security protocol, she sometimes worries about losing it and being “written up” for a replacement. When asked about her openness to alternative authentication schemes, she gave a positive response. With regards to gesturing to a cash register to authenticate, she was OK with it as long as the hand could be recognized specifically. Specifically regarding the idea of HandShake, she liked the idea as long as the system identifies individuals reliably. Reliability seems to be a dominant theme. When asked about other concerns regarding HandShake, she stated she said she had none and that she would find hand gestures easier.

Librarian. We spoke to biological sciences librarian. She purchases science materials, speaks to students one-on-one and primarily to support research and learning. In this effort, she does find herself having to login to resources often, but anything that the library owns or the university subscribes to are automatically authenticated based on access through the Princeton University network. She finds it annoying to find passwords of a longer length, as they are harder to remember. She would definitely consider alternative methods of authentication. Anything not requiring numbers or symbols is great– she loves using a phrase in her textual passwords. When presented with the idea of HandShake, she was open to the idea, but had concerns about the uniqueness of passwords created. From her perspective, there’s “only so many gestures you can make”.

Answers to 11 Task Analysis Questions

-

Who is going to use system?

-

What tasks do they now perform?

-

What tasks are desired?

-

We want to device an approach that allows users of this product to sign in and out with less time, less effort, and more security. After identifying that the user is the correct user (either through facial recognition or selecting an option) HandShake allows the user to present different hand gestures inside a blackbox as his/her password. This requires no typing of a password, clicking, just simple hand motions. Hand gestures are so primal and innate that humans had such gestures since way back when. This innate behavior may facilitate a common practice such as signing in and out of an account.

-

How are the tasks learned?

-

When HandShake is adopted, rather than simply presenting the username and password text file, they can be prompted to identify themselves by either selecting from a list of ids and prompted to insert their hand inside HandShake and provide the necessary gestures to verify themselves. A simple tutorial can be provided for first time users and the tutorial will no longer appear for users who are comfortable with the tasks.

-

Where are the tasks performed?

-

The tasks are performed in front of systems where authentication is required. This depends on the user, but for our focused cases: a librarian might authenticate at a computer to access a database, a cashier would authenticate at a register, and a student would authenticate at a computer cluster terminal.

-

What’s the relationship between user & data?

-

Anyone with potential access to the system should be able to submit a handshake (ie, they have a valid username). The username can be selected by tapping on-screen (works well for a list of recent users on the same computer) or facial recognition can identify the individual when he/she approaches HandShake, and issue a handshake prompt to authenticate.

-

The data the users access after authenticating is outside the scope of our problem. We’re concerned up to the point of successful authentication. Indeed, much of the data and privileges obtained after authentication may be sensitive and/or personal.

-

What other tools does the user have?

-

How do users communicate with each other?

-

How often are the tasks performed?

-

Users might perform the same tasks multiple times a day, depending on how often he/she authenticates with the systems concerned. For example, a cashier needs to authenticate with his/her employee credentials every time he/she changes registers. On the other hand, a student logs into Facebook much less frequently because the system leaves the user authenticated for some period by default.

-

What are the time constraints on the tasks?

-

Usually, users are authenticating a system to obtain privileged access to data and actions. Authentication should take no more than 10 seconds– ideally, performing a handshake is faster than typing in a username and password

-

What happens when things go wrong?

-

If the user forgets his/her handshake, the system provides a means of “resetting” the handshake after authenticating a different way (Mother’s maiden name, text message confirmation, etc.)

-

If the correct handshake is performed, but the system does not recognize it, the user should reset their handshake to a clearer one.

Description of Three Tasks

The three tasks users might perform are the following (in ascending order of difficulty):

Current method for first two tasks: users currently authenticate by typing a username/password combination. The current difficulty level of this varies widely by individual and application device. For example, new computer users find difficulty typing into a keyboard, so authentication takes some time. On the other hand, most mobile phone users could probably relate to the annoying experience of authenticating into mobile apps and web sites with a tiny keyboard.

-

User Profile Selection / Handshake Authentication — In this scheme, most applicable to students at a university computer cluster, the user approaches the system and selects the user profile he/she wishes to authenticate into. This can happen in one of two different ways: (a) the profile is automatically detected by facial recognition, or (b) the profile is selected from a list of possible/recent users on the screen. Then, the user proceeds to perform his/her secret handshake sequence in a “black box” of sorts that contains a LEAP motion detector. If the handshake is correct, the system will login. Otherwise, the user will be given another try. We anticipate that performing a secret handshake will be easier and faster for users, especially for new computer users and individuals on mobile devices.

-

Card Swipe / Handshake Authentication — As an alternative to user profile selection from the screen, some contexts might find it appropriate to select user profiles by swiping an identification card. This is especially true and supermarkets and convenience stores where users already have such cards to perform common authentication tasks around the store. As a means of confirming the cardholder’s identity, the user can proceed to perform a secret handshake as described in Task #1 above. From the cashier’s perspective, we anticipate the authentication process will be faster with a handshake– time is of the essence when serving other customers in this context.

-

Handshake Reset — In this task, the user reset his/her secret handshake sequence for one of usually two reasons: (1) they forgot their previous handshake or (2) they seem to remember the handshake, but the system is not recognizing it correctly. For both of these cases, the user must proceed to reset the handshake by verifying their identity through other means. For example, the user might receive a text message containing a secret code they should type into the system. Or, the user will be asked for personal information previously set during the user creation process (mother’s maiden name). A combination of these secondary authentication schemes would be the best solution. Though seemingly cumbersome, we want this reset process to be as robust as possible. These procedures are something users are already familiar with from using other web applications.

Interface Design

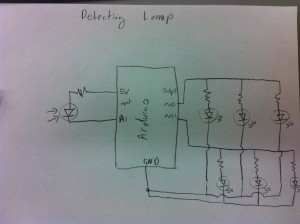

Text Description of System Functionality

When the user uses the system, it will be able detect the identity of the user who approached the system by facial recognition. Then, it will confirm this identity by presenting the propose the identity, offering the user to change it, and asking the user to enter his/her handshake. If the handshake is correct, the system authenticates. Otherwise, the user can try another handshake for a limited number of times. This idea differs from existing systems because, for many people, a hand gesture can be easier to remember, and it’s also more secure than existing text passwords because it cannot be broken by brute-force algorithms. Other security systems have different modes of verification such as inserting a physical key, using biometrics, or providing a password of some sorts. By allowing a sequence of hand gestures, it combines the concept of a physical key as well as incorporating one’s biometrics. Physical keys are often difficult to manage because one must always carry it around, while it can be safely assumed that most people will have hands. Password have become difficult to manage with increasing safety precautions requiring more complex passwords with special characters, both cases, and numbers.

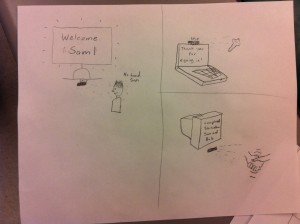

Three Storyboard for Our User Tasks

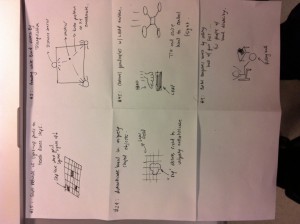

Sketches of System Itself