Observations:

The bulk of my observations were made in various classrooms before and after class, although I did devote some time to observing students in transit between classes. For the most part, the activities conducted by students were indicative of idleness, such as socializing and resting: which activity in particular depended primarily on the size of the group the student was in. In larger groups (3 or more students), students most often were chatting with friends. At one point or another, nearly every student that I observed took out materials to take notes for class beforehand; likewise, after class, people packed up their things. The activities of students in small groups (alone or with one other person) were much more varied, including texting, eating, playing games on their computer, and checking emails/social media. After class, students were observed standing up and stretching out, and infrequently students were asleep at the end of a lecture.

In between classes, the set of activities was even smaller, limited to things that could be accomplished on a mobile device or in person. Most often, students were simply walking with their bags, sometimes with a phone in hand. When asked, these people were most often checking Facebook or texting other friends. In groups, chatting (varying from somewhat quiet to quite raucous) was often conducted, typically with at most two members of the group using their mobile phones at one time. Additionally the occasional running student sought to make it to class before the bell rang on time.

In reflection, I decided to focus my brainstorming on ways to keep students more active during this intermediary time period. I postulated that being more active before/in between lectures would help students pay more attention during lecture and perhaps make them less likely to fall asleep during lecture.

Brainstorming:

The following are my one-line ideas for the brainstorming component of the project:

1. Remind yourself of assignments/projects/readings/etc. for classes

2. In real time analyze what people are talking about right after class

3. Check out what’s for lunch today at your respective dining hall/eating club

4. Calculate the fastest path between two classes for high efficiency walking

5. Sleepy tracker – monitor wakefulness during the day as a function of sleep/naps

6. Princeton trivia game – cool facts you never knew about Princeton

7. Fun music player – plays bassline/guitar/drums, and you can play along with it

8. Save the day’s lecture slides to your computer

9. Jeopardy-style game about lecture, featuring material covered in class

10. Reminder to make sure you don’t leave any of your belongings behind

11. The 5-minute trainer makes a short workout before siting down for an hour+

12. Add student events in the next three days to your calendar

13. Social game where you score points by interacting with classmates

14. Determine how many students are present so the teacher can begin lecture

15. Scavenger-hunt style game where you get points by going to places around campus

Ideas to prototype:

The ideas I’m picking to design prototypes for are ideas 11 and 4. The idea of

a small personal trainer is interesting because by nature of lecture, we often

spend a long time sitting down, and many people find they can stay more focused

for longer after exercising a bit. A mapping app would potentially help a student like those I observed running between classes get to class sooner and even allow them to see exactly how fast they need to go to make it to class on time.

Prototypes:

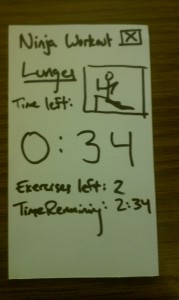

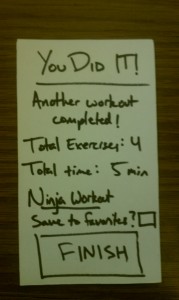

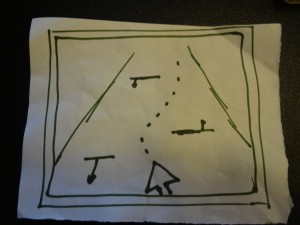

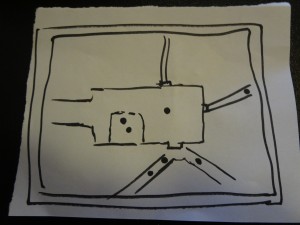

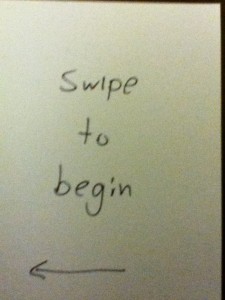

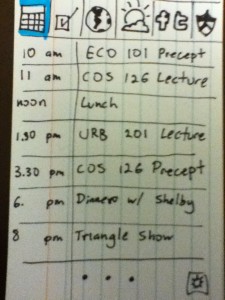

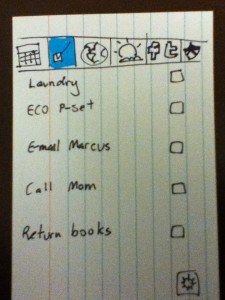

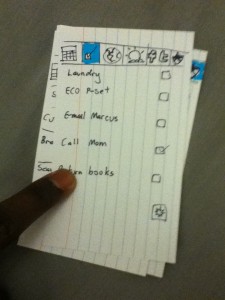

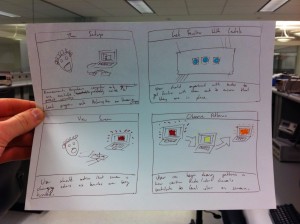

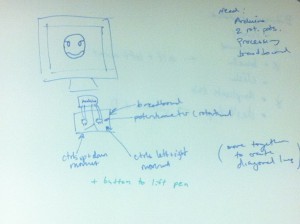

For the workout prototype, I decided to use the smartphone form factor because it needs to be on a device that ideally is widely available and also extremely portable, and the smartphone fits both of these needs. When running the workout app prototype, the user is presented with a starting screen, from which they can start a random workout, check out their list of favorited workouts, see their friends’ usage of the app, and adjust their own personal settings. When pressing the random workout button, the user is immediately brought to a workout confirmation page notifying them about the duration and intensity of the workout, from which they can continue on to the workout. The workout duration is automatically calculated based on the starting time for the class and the current time. The workout screen presents one exercise at a time, showing the time remaining on the exercise and the workout and the number of exercises remaining in the workout. Upon finishing or cancelling the workout, the user is brought to the workout completion page, where the workout is logged and the user is given the option to favorite the workout.

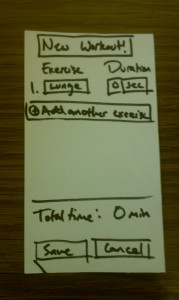

Additionally, the user can view their favorite workouts and the number of times they’ve completed their favorite workouts on the Favorite Workouts page. The user can start any of their favorite workouts, or he/she can design a new workout. When making a new workout, the user can rename the current workout, type in an exercise and a duration for the exercise, and add/remove exercises. The total time for the workout is tallied at the bottom of the screen.

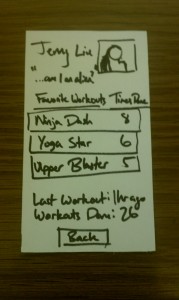

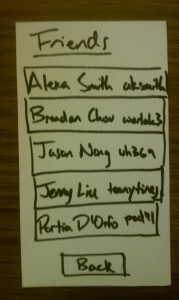

When clicking on the friends link on the home page, one is brought to a list of friends. Clicking on one of those friends brings up their profile, where one can view that friend’s favorite workouts, how many workouts they have completed, and the time of their last workout. Clicking on one of these workouts brings you back to the workout confirmation screen, enabling you to try one of your friends workouts.

Clicking on the settings button enables one to change their personal settings. These include: the default difficulty of the workout, the time to end the workout before lecture, the username, sound level, and whether to use vibrate.

————————————————————————————–

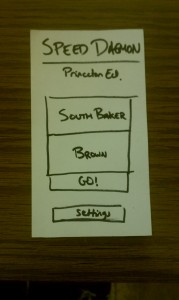

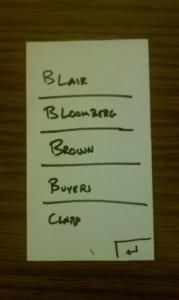

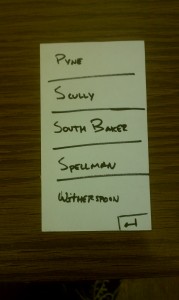

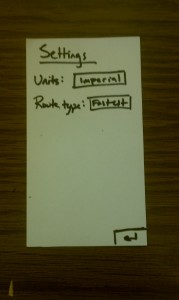

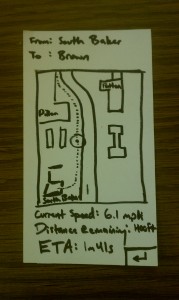

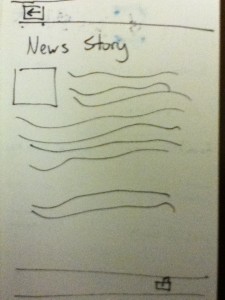

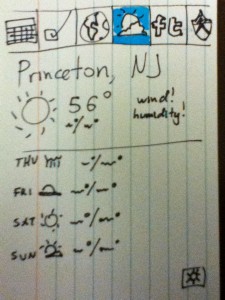

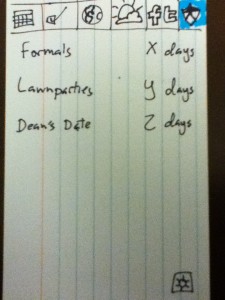

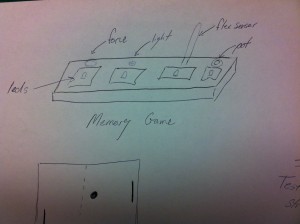

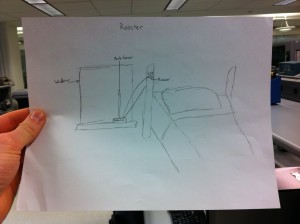

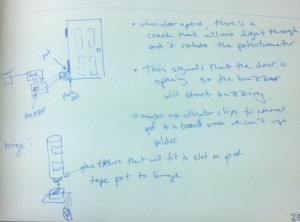

For the mapping app, I decided to go with a much simpler layout, simply because the functionality of this program is quite a bit more limited. I figured that it ought to help a user accomplish the singular task of getting from point A to point B as quickly as possible. As such, picking points A and B should be a very easy task. On the home page, one can choose the starting point and the destination point by pressing on the corresponding buttons and immediately ask for directions or change settings for the app. The only settings that can be modified are the route type, which can take a value of “Fastest,” “Shortest distance,” or “Late Meal,” which directs the student towards Frist en route to the destination.

Once requesting a path, a map screen is loaded, displaying the starting and ending locations, the path to follow, the current location of the user via GPS, the user’s current speed, remaining distance, and time to arrive at the destination.

Evaluation:

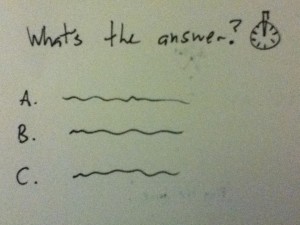

I completed testing with three real users. For the mapping app, I introduced the app to the user as they were about to leave one hall en route to another; in one instance, I also introduced the app to a user to simply play around with the app and describe the experience of using the app rather than try to extract any useful information from it. Before each test, I mentioned to the user that every area of the screen with a black box around it was an interactive component, encouraging them to touch those points and see what happens. Nobody that I had asked to demo my prototype had ever used a paper prototype in the past, so they ended up needing an acclimatory period in which they became accustomed to the use of a paper prototype. After that short period, most people were able to navigate the interface with relative ease. However, some users felt like they had exhausted the possibilities of the app rather quickly and became pretty bored with it after a short period of time. One user suggested the possibility of viewing others on the map who were also using the application. Still, there was overwhelming appreciation for the “Late Meal” setting, which I meant to be more humorous than functional.

Insight:

I found that during the actual evaluation of prototypes, it was far more useful to give the user a task rather than simply letting the user play with the application, especially since both of these applications are designed to accomplishing a very specific task, as I found when I tried to give the one user the app without actually using it to find the shortest path between two places. Without a task, this user felt very undirected and said that he could see how the application would be useful for him but didn’t enjoy the experience of using it.

Additionally, most people left me with the impression that they walked away unsatisfied with what the app could have provided them. In the next redesign of the app, I would change the design in order to emphasize the final result of the calculated route. Perhaps because this app targets a very particular user space, the set of people who are interested in getting places efficiently, I might have been more likely to have picked people not in this group, so the reviews were more negative than I would have hoped. However, this does indicate to me that I’m going to have to make the app more enjoyable or useful for people beyond this group if I want to garner more interest in it.