Lab 14

Stephen: wrote up the descriptions of the interviewed users and most of the contextual inquiry sections were planned here. Helped conduct interviews.

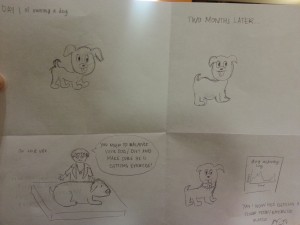

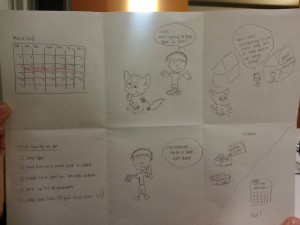

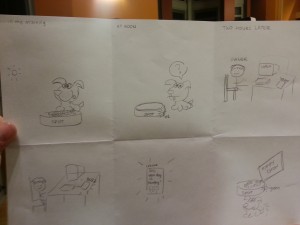

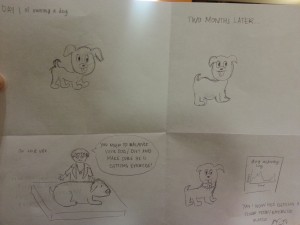

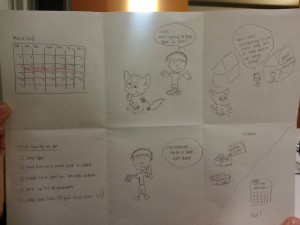

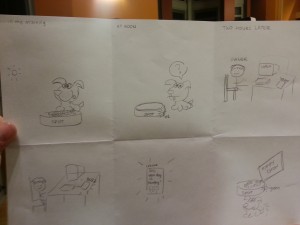

Karena: drew the 3 different story boards, helped with the task analysis questions, worked on writing up the interface design questions

Jean: helped conduct interviews, contextual inquiry writeups, also wrote up the tasks for the users

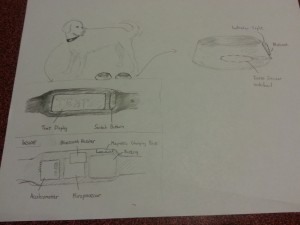

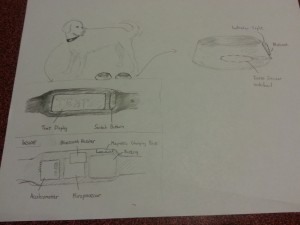

Eugene: drew the pictures and answered the task analysis questions, helped conduct the interviews

Problem and solution overview

We are addressing the problem of taking care of a dog, which involves tasks that are often shared between multiple people, completed/monitored by routine and memory, and sometimes entrusted to others when owners leave their dogs for extended periods of time. These tasks, the most important of which are feeding, exercising, and monitoring a dog’s location, are currently done through imprecise measures, cannot be monitored over long periods of time, and are periodically forgotten. We propose a system that involves a device that can be attached to a dog’s food and water bowl and a separate device that can be put on its collar, which detects the dog’s food and water intake, how much exercise or activity it has gotten, and its location, and aggregates this data for viewing on a mobile device. The devices alert the owner when the dog has not been fed according to schedule and, tracks whether the dog has gotten enough activity over time, and shows its location, so owners can check up on it when they are not home.

Description of users you observed in the contextual inquiry

Our target user group is dog-owners who are concerned about their dogs health and who must spend time away from their household due to business, vacation, etc. They might share responsibility for the dog with others, and when they leave their house they must leave their dog alone in their house with either a neighbor or a paid caretaker to watch after the dog.We chose this target group because they would benefit the most from our idea, and have a currently strong need that must be resolved. Our first interviewee was a high-school student who owns a beagle. She shares the responsibilities for the dog with her sister, and says she forgets to feed her dog about every two weeks. When she travels with her family, they usually ask her neighbor. Our second user is a graduate student who lives on campus with his dog. He is its primary caretaker, but he has to leave it inside while he teaches classes and does work in the lab. He says his lab schedule is often unpredictable and runs over time, so he cannot follow a regular routine and is concerned his dog doesn’t get enough activity. Our last interviewee was a stay-at-home mother whose kids have all moved out of the house. She owns a dog (and two cats) and is its primary caretaker. She usually completes all of the tasks involved in taking care of her dog right before and right after work. She is very routine-driven and rarely forgets to take care of her dog, but she becomes extremely stressed when she is away from home because she worries if it is ok. This makes it hard for her to visit her kids or go on vacation for extended periods of time.

CI interview descriptions

We conducted several interviews, in a variety of different locations. Our general approach was twofold: we observed and eventually approached dog-owners while they walked around campus with their dog, and we asked owners who were at home with their dog. Our approach was to observe the owners as they went by with their dogs on campus, and take notes of our observations. We asked some preliminary questions to people we knew who have dogs at home, and then asked if we could talk to the primary caretakers in their family. The graduate student who we interviewed was someone that we had observed walking their dog around our dorm room, and who we approached and asked questions.

All of our users definitely cared deeply about their dog’s well being and felt that their dog was important to them in their life. All of users were also busy and reported forgotting to feed their dog at least periodically. The high-schooler we interviewed was unique in that she was the only person who shared responsibility for her dog. She also mentioned that her dog often has other medical needs that need to be done on a recurring schedule, which suggested additional functionality for our interface, such as another button that would allow for checking up on personalized activities like giving medicine. The graduate student we interviewed was unique because he had a more unpredictable schedule than the other users, and had the most trouble following a routine, and would probably benefit the most from a mobile device. The stay-at-home mom we interviewed was unique in that she didn’t really have many issues with feeding or exercising with her dog. She was also unique in how anxious she said she got when she was away from her dog. She said that this is actually a constraint for how long she can leave the house, so this feedback would allow her to feel more relaxed during holidays/on vacations. It makes sense that all of the owners we interviewed cared about their dog and were interested in improving their dog’s lifestyle for the better. However, it is clear that different lifestyles/ages (students or working adults) lead to different issues in taking care of a pet.

Answers to 11 task analysis questions

1. Who is going to use the system?

Our target user group – dog-owners who have a vested interest in the well-being of their dog yet are too busy to sufficiently do so.

2. What tasks do they now perform?

Our current target user group feeds the dog, gives the dog water, walks the dog, and must make sure the dog stays within the appropriate boundaries (by putting up fences, etc.). If the dog owner must leave for vacation, they must make arrangements with someone for their dog to be taken care of while they are gone.

3. What tasks are desired?

One desired task is to set reminders for the user to feed the dog, or allow multiple people to feed a dog with little overlap. Another task would be to check up on the dog to know if they are getting sufficient exercise and staying healthy, relative to what they are eating. Also, it would be helpful to easily transition between users, so that if a user is going away for vacation, their dogsitter can easily know when to feed the dog, while the user can know if their dog is being taken care of appropriately.

4. How are the tasks learned?

The tasks are very visual, and therefore, easy to learn. The system is automated and serves as a friendly reminder to perform tasks. As soon as the user becomes familiar with how the reminders/updates about his/her dog work, he/she will learn how to respond to them, and therefore, learn the tasks.

5. Where are the tasks performed?

The tasks are mainly performed within the household – feeding the dog, giving the dog water, or walking outside around the house. The task of checking up on your dog while away from the household is done in any location.

6. What’s the relationship between the user and data?

The user will receive data about their dog (charts about fitness level and dietary intake), and the location of their dog through a mobile app connected to the bowl-collar system. The user can also receive alerts if any of these levels are outside a reasonable range. Given certain data, the user may change their behavior (giving less food, exercising more, etc.)

7. What other tools does the user have?

Users will also most likely have mobile phones that they can use in conjunction with this system. They will probably also have calendars, either electronic or not, that will be used to schedule important events for their dog. We can facilitate interaction amongst these devices by having the mobile phone, email, etc. all connecting to this app.

8. How do users communicate with each other?

The users of the system communicate implicitly with one another. For instance, the job of feeding the dog becomes a shared task under this system; if one person forgets, all the owners of the dog will get notified about the dog being hungry, and they can respond to this reminder. Thus, the responsibility of feeding the dog becomes a shared responsibility.

9. How often are the tasks performed?

Two of the tasks are performed on a daily basis. The activity monitor that senses the motion of the dog, and how active it has been, occurs in real-time. Meanwhile, the food reminders occur whenever the user has forgotten to feed the dog; this will vary from user-to-user. Finally, the task that serves to ensure the user that the dog has gotten fed when the owner is away, will be performed when the user has left for an extended period of time; this also varies depending on the user. The GPS tracking system will be used as frequently as the dog escapes from the backyard.

10. What are the time constraints on the tasks?

The time constraints on the tasks are not extremely relevant. As long as the reminder that the dog has not been fed is sent in a timely fashion (within 1 hour), the system should be useful to the user. When the user is getting updates (while on vacation) about the well-being of his/her dog, timing might be a little more relevant. Still, the data can be sent with a 1-2 hour grace period.

11. What happens when things go wrong?

When things go wrong–perhaps the weighing system is not calibrated well enough and the food is constantly setting alerts or maybe the activity monitor is not outputting the relevant data– the user will get unreliable data that could harm the pet, or rather, simply annoy the user.. Also, if the collar were to be removed by accident, it may omit important data to the user (the user wouldn’t be able to locate where the dog is, etc.)

Description of three tasks

Task 1: Checking who last fed the dog and when, and deciding when/whether to feed their dog.

Currently, this task is done mainly through routine and memory. Dog owners typically have some kind of system set up with family members/apartment-mates, etc, where they split up responsibility for feeding their dog. They have a routine for how much, how many times a day, and at what times they feed their dog, and they remember to do this task by habit (maybe feeding their dog when they eat). An owner might feed their dog twice a day in the same amount (a measuring cup), once in the morning and once in the evening. If multiple people share responsibility for feeding the dog, they might communicate orally or by texting, etc, to ask each other whether they have fed the dog. This task is currently not very difficult, as it becomes habitual over time, but coordinating with multiple family members may pose intermittent problems, and most users report periodically forgetting to feed their dog. Using our proposed system, coordinating this task with multiple people would be much easier, as the user would only need to check the dog bowl to see whether it is necessary to feed their pet. In addition, the number of times the user forgets to feed their dog would be reduced, as the system would ping their mobile device when the usual feeding schedule has not been followed.

Task 2: Checking and regulating the activity of your dog

Currently, dog-owners check and regulate their dog’s activity through routine, memory, and some measure of guesswork. This is a moderately difficult task. Owners usually have a routine of how many times per day or week they take their dog on a walk, and they might adjust this according to their schedule (taking a shorter route when they are busier, etc). If they leave their dog outside for extended periods of time, they might guess how much activity they have gotten and use this time in lieu of other forms of activity such as walking. In addition, activity is monitored and adjusted using relatively recent remembered “data”, such as whether the dog got less activity on a certain day or week (it is harder to remember long-term activity levels and trends). This might lead a pet to get less activity than needed over an extended period of time and lead to weight gain, etc. Using our proposed system, checking and regulating a dog’s activity would be much easier, as owners would not have to be reliant on memory. They would not have to guess how much activity a dog gets when it is left alone outside, and thus would have a more accurate holistic view of their activity. In addition, users could easily access long-term data about their dog’s activity level, and therefore see trends from over a period of several weeks or months and adjust their schedule accordingly to avoid giving their pet excessive/insufficient exercise.

Task 3: Taking care of your dog when you are away from home for extended periods of time.

Currently, users deal with this problem using a variety of methods. Typically, they leave their dog in the care of someone they know, usually a neighbor, friend, or family member. They might give their dogsitter a key to their house so that they can go in every day to feed/walk/check up on their dog, or they might have the dogsitter take the dog to their own home to take care of it. They usually leave written or oral instructions about how much/how often to feed their dog, how often to let it out, and how much/how often to exercise it. These dogsitters might have varying experience taking care of pets/dogs, and the owner might check up on the status of their dog by calling or texting the dogsitter periodically. Overall, this is currently a difficult and stressful task, as many owners worry whether their dog is being taken care of correctly, and they might not know how responsible or trustworthy their dogsitter is. Using our proposed system, this task would become much easier for both the owner and whoever has responsibility of the dog while the owner is away. Owners would be able to check the status of their dog remotely, and easily see whether their pet has eaten, been let outside, and walked. In addition, even dogsitters with very little experience taking care of dogs would find it easier to complete this task, as they would easily be able to see when the dog has not been fed enough, and when they are deviating from its usual schedule. With mobile pings, they would also be notified when they forget to feed the dog, which might be helpful because it is not part of their regular schedule and is thus not habitual.

Interface Design

1. Text description of the functionality of system

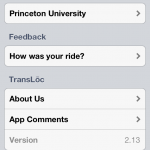

The pet-care system has several functions. It is a system with three main components: a dog water and food bowl with weight sensors and an LED system, a motion-detecting sensor on the collar of the dog which includes a GPS tracking system, and an interface that allows the users to get data and reminders from the system. The weight sensor tracks how much the dog has been fed, and the user will get notified when the user has forgotten to feed the dog, or when their dog has not been eating. The user can also use the system to monitor how often the dog has been exercising, and give a ratio of food that is proportional to the dog’s exercise. When an owner leaves his or her dog in the care of a neighbor or a friend, the system allows the user to get updates about the dog’s activities. The GPS system attached to the collar of the dog will notify the user of the dog’s location. The system that ensures that the dog is cared in all respects, and ensures the safety, health, and attention that a dog needs from its owner. The current device that resembles this system is called Tagg. Tagg, however, features the GPS tracking system and does not have the additional functionality of ensuring that the user’s dog is fed and getting sufficient exercise. Furthermore, our system is fully automated through the bowl and collar devices. This allows the system to cause little interference in the pet-owner’s life, and makes it easy to use.

2. 3 StoryBoards

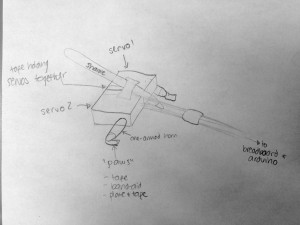

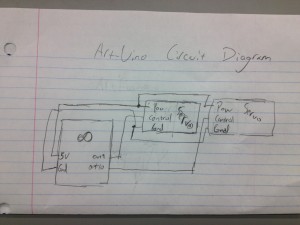

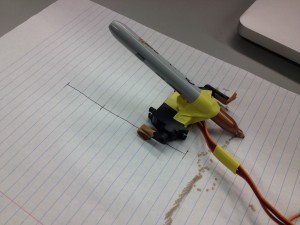

3. A few sketches of the system itself

A schematic of the actual device, showing the weight-sensing bowl and the text display. It also shows the components: switch button, text display, accelerometer, microprocessor, battery, magnetic charging point, and a bluetooth receiver.

Shows a potential interface for the mobile app that would come associated with the device. The functionality is shown above.